AlertManager is an open-source alerting system that works with the Prometheus Monitoring system. This blog is part of the Prometheus Kubernetes tutorial series.

In our previous posts, we have looked at the following.

In this guide, I will cover the Alert Manager setup and its integration with Prometheus.

Note: In this guide, all the Alert Manager Kubernetes objects will be created inside a namespace called monitoring. If you use a different namespace, you can replace it in the YAML files.

Alertmanager on Kubernetes

Alert Manager setup has the following key configurations.

- A config map for AlertManager configuration

- A config Map for AlertManager alert templates

- Alert Manager Kubernetes Deployment

- Alert Manager service to access the web UI.

Important Setup Notes

You should have a working Prometheus setup up and running.

Prometheus should have the correct alert manager service endpoint in its config.yaml as shown below to send the alert to Alert Manager.

Note: If you are following my tutorial on Prometheus Setup On Kubernetes, you don’t have to add the following configuration because it is part of the Prometheus configmap.

alerting:

alertmanagers:

- scheme: http

static_configs:

- targets:

- "alertmanager.monitoring.svc:9093"All the alerting rules have to be present on Prometheus config based on your needs. It should be created as part of the Prometheus config map with a file named prometheus.rules and added to the config.yaml in the following way.

rule_files:

- /etc/prometheus/prometheus.rulesAlert manager alerts can be written based on the metrics you receive on Prometheus.

For receiving emails for alerts, you need to have a valid SMTP host in the alert manager config.yaml (smarthost parameter). You can customize the email template as per your needs in the Alert Template config map. We have given the generic template in this guide.

Let’s get started with the setup.

Alertmanager Kubernetes Manifests

All the Kubernetes manifests used in this tutorial can be found in this Github link.

Clone the Github repository using the following command.

git clone https://github.com/bibinwilson/kubernetes-alert-manager.gitConfig Map for Alert Manager Configuration

Alert Manager reads its configuration from a config.yaml file. It contains the configuration of alert template path, email, and other alert receiving configurations.

In this setup, we are using email and slack webhook receivers. You can have a look at all the supported alert receivers from here.

Create a file named AlertManagerConfigmap.yaml and copy the following contents.

kind: ConfigMap

apiVersion: v1

metadata:

name: alertmanager-config

namespace: monitoring

data:

config.yml: |-

global:

templates:

- '/etc/alertmanager/*.tmpl'

route:

receiver: alert-emailer

group_by: ['alertname', 'priority']

group_wait: 10s

repeat_interval: 30m

routes:

- receiver: slack_demo

# Send severity=slack alerts to slack.

match:

severity: slack

group_wait: 10s

repeat_interval: 1m

receivers:

- name: alert-emailer

email_configs:

- to: [email protected]

send_resolved: false

from: [email protected]

smarthost: smtp.eample.com:25

require_tls: false

- name: slack_demo

slack_configs:

- api_url: https://hooks.slack.com/services/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sz4Qk

channel: '#devopscube-demo'Let’s create the config map using kubectl.

kubectl create -f AlertManagerConfigmap.yamlConfig Map for Alert Template

We need alert templates for all the receivers we use (email, Slack, etc). Alert manager will dynamically substitute the values and deliver alerts to the receivers based on the template. You can customize these templates based on your needs.

Create a file named AlertTemplateConfigMap.yaml and copy the contents from this file link ==> Alert Manager Template YAML

Create the configmap using kubectl.

kubectl create -f AlertTemplateConfigMap.yamlCreate a Deployment

In this deployment, we will mount the two config maps we created.

Create a file called Deployment.yaml with the following contents.

apiVersion: apps/v1

kind: Deployment

metadata:

name: alertmanager

namespace: monitoring

spec:

replicas: 1

selector:

matchLabels:

app: alertmanager

template:

metadata:

name: alertmanager

labels:

app: alertmanager

spec:

containers:

- name: alertmanager

image: prom/alertmanager:latest

args:

- "--config.file=/etc/alertmanager/config.yml"

- "--storage.path=/alertmanager"

ports:

- name: alertmanager

containerPort: 9093

resources:

requests:

cpu: 500m

memory: 500M

limits:

cpu: 1

memory: 1Gi

volumeMounts:

- name: config-volume

mountPath: /etc/alertmanager

- name: templates-volume

mountPath: /etc/alertmanager-templates

- name: alertmanager

mountPath: /alertmanager

volumes:

- name: config-volume

configMap:

name: alertmanager-config

- name: templates-volume

configMap:

name: alertmanager-templates

- name: alertmanager

emptyDir: {}Create the alert manager deployment using kubectl.

kubectl create -f Deployment.yamlCreate the Alert Manager Service Endpoint

We need to expose the alert manager using NodePort or Load Balancer just to access the Web UI. Prometheus will talk to the alert manager using the internal service endpoint.

Create a Service.yaml file with the following contents.

apiVersion: v1

kind: Service

metadata:

name: alertmanager

namespace: monitoring

annotations:

prometheus.io/scrape: 'true'

prometheus.io/port: '9093'

spec:

selector:

app: alertmanager

type: NodePort

ports:

- port: 9093

targetPort: 9093

nodePort: 31000Create the service using kubectl.

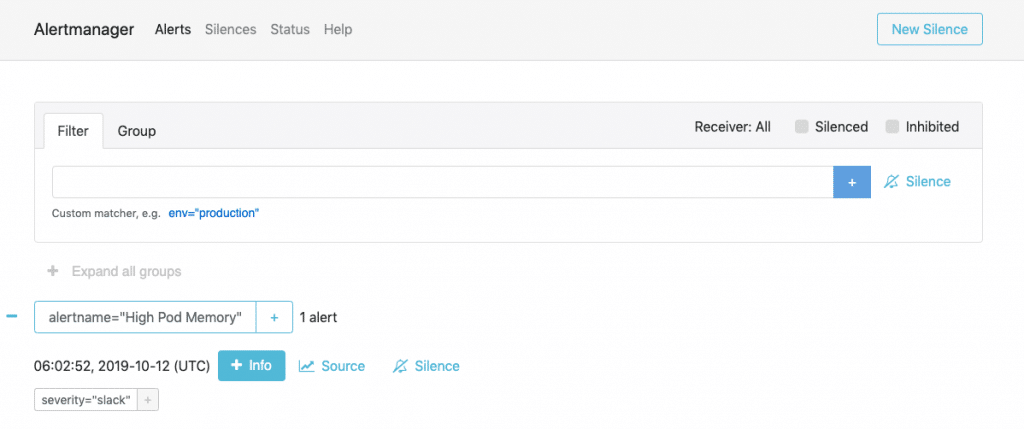

kubectl create -f Service.yamlNow, you will be able to access Alert Manager on Node Port 31000. For example,

http://35.114.150.153:31000

27 comments

Hii,

I have follow this tuorial step by step but the pod of the alertmanager is down

NAME READY STATUS RESTARTS AGE

pod/alertmanager-65c55cb747-5nz4z 0/1 Pending 0 21m

So guide me what went wrong here?

When you describe the pod, what does it say?

Name: alertmanager-65c55cb747-hc5wl

Namespace: monitoring

Priority: 0

Service Account: default

Node:

Labels: app=alertmanager

pod-template-hash=65c55cb747

Annotations:

Status: Pending

IP:

IPs:

Controlled By: ReplicaSet/alertmanager-65c55cb747

Containers:

alertmanager:

Image: prom/alertmanager:latest

Port: 9093/TCP

Host Port: 0/TCP

Args:

–config.file=/etc/alertmanager/config.yml

–storage.path=/alertmanager

Limits:

cpu: 1

memory: 1Gi

Requests:

cpu: 500m

memory: 500M

Environment:

Mounts:

/alertmanager from alertmanager (rw)

/etc/alertmanager from config-volume (rw)

/etc/alertmanager-templates from templates-volume (rw)

/var/run/secrets/kubernetes.io/serviceaccount from kube-api-access-tctqj (ro)

Conditions:

Type Status

PodScheduled False

Volumes:

config-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: alertmanager-config

Optional: false

templates-volume:

Type: ConfigMap (a volume populated by a ConfigMap)

Name: alertmanager-templates

Optional: false

alertmanager:

Type: EmptyDir (a temporary directory that shares a pod’s lifetime)

Medium:

SizeLimit:

kube-api-access-tctqj:

Type: Projected (a volume that contains injected data from multiple sources)

TokenExpirationSeconds: 3607

ConfigMapName: kube-root-ca.crt

ConfigMapOptional:

DownwardAPI: true

QoS Class: Burstable

Node-Selectors:

Tolerations: node.kubernetes.io/not-ready:NoExecute op=Exists for 300s

node.kubernetes.io/unreachable:NoExecute op=Exists for 300s

Events:

Type Reason Age From Message

—- —— —- —- ——-

Warning FailedScheduling 2m3s default-scheduler 0/1 nodes are available: 1 Insufficient cpu. preemption: 0/1 nodes are available: 1 No preemption victims found for incoming pod.

Hi,

The output shows your cluster doesnt have enough CPU and memory resources. Consider increasing the nodes.

I added Prometheus and AlertManager. My Rule is

================

groups:

– name: example

rules:

– alert: InstanceDown

expr: sum(up{ApolloService=”eliminatorcomponent”}) == 0

for: 5m

labels:

severity: page

annotations:

summary: “EliminatorComponent service down”

description: “EliminatorComponent service instance has been down for more than 5 minutes.”

=================

When I stop the service, In prometheus I see Pending alert. However I do not see it in AlertManager. What should I check for this?

=============================

alerting:

alertmanagers:

– scheme: http

static_configs:

– targets:

– “alertmanager:9093”

Hello there! Thanks for the great tutorial! I have one question regarding the alert for “High Pod Memory” in prometheus.rules. I have been receiving those alerts and I’m trying to set a way for it to tell me which pod exactly is triggering the alert, but I haven’t been successful… Is there a way to add that in the alert? I tried using $labels.pod in the description, but it appears empty…

Very nice tutorial. Can you tell how do we extend this to deploy alertmanager for high availability in kubernetes. How do we create multiple alertmanagers and connect them as peers?

Is there a way to configure the prometheus endpoint to use in Alertmanager? All of the links Alertmanager shows link to the service endpoint which is not routable outside of the cluster.

Hi Peter,

If you want routable endpoints, you need to use ingress.

Very clear, just wait tutorial for prometheus operator 🙂

Hi Enzo

Thank you. I will be publishing the operator version soon 🙂

Hello, thank you for the clear tutorial. But my deployment is not running due to “Back-off restarting failed container” issue. How could i fix this?

Hi Jerry,

What does the pod event/logs say? do you have enough node CPU and memory to run the pods?

Hi , I am facing the same issue , how to fix it . Have used the same yaml as shown in the link

Hi Prasad,

Can you elaborate on the issue

This is the error I am facing. Whereas the alert is reflected in my alert manager, but I am not getting emails in my inbox

In Prometheus, it is showing down because of the wrong port in the Prometheus annotation in the Alert manager service manifest. I have corrected it in Github as well as the post.

It was 8080,

annotations: prometheus.io/scrape: 'true' prometheus.io/port: '8080'Now I changed to the correct port.

annotations: prometheus.io/scrape: 'true' prometheus.io/port: '9093'Please re-apply for the service and the service status will be up.

For email, please if check the SMTP configurations are correct. You need a valid SMTP host for sending emails from the alert manager.

Hi

Amazing tutorial, very well explained.

I have one question to get nodes details and services? Do I need to deploy anything else? I have configured rule for kubelet service but have not received an alert in the mail while I can get your high memory demo mail. please let me know .

thanks

Thank you for a very good blog, I have few challenges with the setup. I see that in the target section the alert manger seems to be down. how do I fix it.

http://172.17.0.6:8080/

DOWN instance=”172.17.0.6:8080″ job=”kubernetes-service-endpoints” kubernetes_name=”alertmanager” kubernetes_namespace=”monitoring” 1.228s ago 873.8us Get “http://172.17.0.6:8080/”: dial tcp 172.17.0.6:8080: connect: connection refused

hi, Please check the Prometheus SD configs from here. https://raw.githubusercontent.com/bibinwilson/kubernetes-prometheus/master/config-map.yaml

Nicely explained. Thank you for you descriptive blog.

I have setup prometheus, grafana, kube-metrics and alertmanager.

But in alertmanager I am not able to see alerts in alertmanager page.

Could you guide me in that?

Sure Deep. Are the alerts triggering? You can set up an alert with very less threshold and see if the alert is triggering

I can see the alerts are triggering.

Initially I have setup two alerts 1)instance showing status down 2) High Pod Memory (which is shown by your config also). Moreover, in prometheus webpage under target section, endpoint of alermanager is showing down.

However, on alertmanager page I am not able to see the alerts for instance down and High Pod Memory.

did you check if the alert manager URL is reachable from Prometheus?

Now it’s working fine. I was using different namespace for my env.

Thanks again for your support.

You are welcome!

nice tutorial! there’s one more question:

how to verify Prometheus and Alertmanager are connected?