In this Kubernetes tutorial, you’ll learn how to setup EFK stack on Kubernetes cluster for log streaming, log analysis, and log monitoring.

Check out part 1 in this Kubernetes logging series, where we have covered Kubernetes logging fundamentals and patterns for beginners.

When running multiple applications and services on a Kubernetes cluster, it makes more sense to stream all of your application and Kubernetes cluster logs to one centralized logging infrastructure for easy log analysis.

This beginner's guide aims to walk you through the important technical aspects of Kubernetes logging through the EFK stack.

What is EFK Stack?

EFK stands for Elasticsearch, Fluentd, and Kibana. EFK is a popular and the best open-source choice for the Kubernetes log aggregation and analysis.

- Elasticsearch is a distributed and scalable search engine commonly used to sift through large volumes of log data. It is a NoSQL database based on the Lucene search engine (search library from Apache). Its primary work is to store logs and retrive logs from fluentd.

- Fluentd is a log shipper. It is an open source log collection agent which support multiple data sources and output formats. Also, it can forward logs to solutions like Stackdriver, Cloudwatch, elasticsearch, Splunk, Bigquery and much more. To be short, it is an unifying layer between systems that genrate log data and systems that store log data.

- Kibana is UI tool for querying, data visualization and dashboards. It is a query engine which allows you to explore your log data through a web interface, build visualizations for events log, query-specific to filter information for detecting issues. You can virtually build any type of dashboards using Kibana. Kibana Query Language (KQL) is used for querying elasticsearch data. Here we use Kibana to query indexed data in elasticsearch.

Also, Elasticsearch helps solve the problem of separating huge amounts of unstructured data and is in use by many organizations. Elasticsearch is commonly deployed alongside Kibana.

Note: When it comes to Kubernetes, Fluentd is the best choice because than logstash because FLuentd can parse container logs without any extra configurations. Moreover, it is a CNCF project.

Setup EFK Stack on Kubernetes

We will look at the step-by-step process for setting up EFK using Kubernetes manifests. You can find all the manifests used in this blog in the Kubernetes EFK Github repo. Each EFK component's manifests are categorized in individual folders.

You can clone the repo and use the manifests while you follow along with the article.

git clone https://github.com/scriptcamp/kubernetes-efkNote: All the EFK components get deployed in the default namespace.

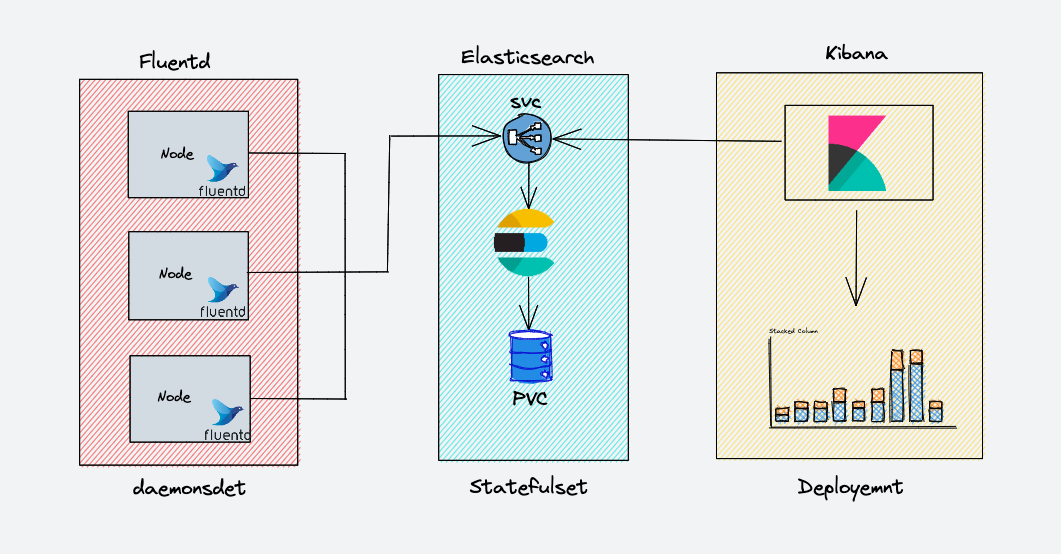

EFK Archiecture

The following diagram shows the high level architecture of EFK stack that we are going to build.

EKF components get deployed as follows,

- Fluentd:- Deployed as daemonset as it need to collect the container logs from all the nodes. It connects to the Elasticsearch service endpoint to forward the logs.

- Elasticsearch:- Deployed as statefulset as it holds the log data. We also expose the service endpoint for Fluentd and kibana to connect to it.

- Kibana:- Deployed as deployment and connects to elasticsearch service endpoint.

Deploy Elasticsearch Statefulset

Elasticsearch is deployed as a Statefulset and the multiple replicas connect with each other using a headless service. The headless svc helps in the DNS domain of the pods.

Save the following manifest as es-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: elasticsearch

labels:

app: elasticsearch

spec:

selector:

app: elasticsearch

clusterIP: None

ports:

- port: 9200

name: rest

- port: 9300

name: inter-nodeLet's create it now.

kubectl create -f es-svc.yamlBefore we begin creating the statefulset for elastic search, let's recall that a statefulset requires a storage class defined beforehand using which it can create volumes whenever required.

Note: Though in a production environment, we need to use 400-500Gbs of volume for elastic search, here we are deploying with 3Gb PVC's for demonstrations.

Let's create the Elasticsearch statefulset now. Save the following manifest as es-sts.yaml

Note: The statefulset creates the PVC with the default available storage class. If you have a custom storage class for PVC, you can add it in thevolumeClaimTemplatesby uncommenting thestorageClassNameparameter.

apiVersion: apps/v1

kind: StatefulSet

metadata:

name: es-cluster

spec:

serviceName: elasticsearch

replicas: 3

selector:

matchLabels:

app: elasticsearch

template:

metadata:

labels:

app: elasticsearch

spec:

containers:

- name: elasticsearch

image: docker.elastic.co/elasticsearch/elasticsearch:7.5.0

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

ports:

- containerPort: 9200

name: rest

protocol: TCP

- containerPort: 9300

name: inter-node

protocol: TCP

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

env:

- name: cluster.name

value: k8s-logs

- name: node.name

valueFrom:

fieldRef:

fieldPath: metadata.name

- name: discovery.seed_hosts

value: "es-cluster-0.elasticsearch,es-cluster-1.elasticsearch,es-cluster-2.elasticsearch"

- name: cluster.initial_master_nodes

value: "es-cluster-0,es-cluster-1,es-cluster-2"

- name: ES_JAVA_OPTS

value: "-Xms512m -Xmx512m"

initContainers:

- name: fix-permissions

image: busybox

command: ["sh", "-c", "chown -R 1000:1000 /usr/share/elasticsearch/data"]

securityContext:

privileged: true

volumeMounts:

- name: data

mountPath: /usr/share/elasticsearch/data

- name: increase-vm-max-map

image: busybox

command: ["sysctl", "-w", "vm.max_map_count=262144"]

securityContext:

privileged: true

- name: increase-fd-ulimit

image: busybox

command: ["sh", "-c", "ulimit -n 65536"]

securityContext:

privileged: true

volumeClaimTemplates:

- metadata:

name: data

labels:

app: elasticsearch

spec:

accessModes: [ "ReadWriteOnce" ]

# storageClassName: ""

resources:

requests:

storage: 3GiLet's create the statefulset.

kubectl create -f es-sts.yamlVerify Elasticsearch Deployment

After the Elastisearch pods come into the running state, let us try and verify the Elasticsearch statefulset. The easiest method to do this is to check the status of the cluster. In order to check the status, port-forward the Elasticsearch pod's 9200 port.

kubectl port-forward es-cluster-0 9200:9200To check the health of the Elasticsearch cluster, run the following command in the terminal.

curl http://localhost:9200/_cluster/health/?prettyThe output will display the status of the Elasticsearch cluster. If all the steps were followed correctly, the status should come up as 'green'.

{

"cluster_name" : "k8s-logs",

"status" : "green",

"timed_out" : false,

"number_of_nodes" : 3,

"number_of_data_nodes" : 3,

"active_primary_shards" : 8,

"active_shards" : 16,

"relocating_shards" : 0,

"initializing_shards" : 0,

"unassigned_shards" : 0,

"delayed_unassigned_shards" : 0,

"number_of_pending_tasks" : 0,

"number_of_in_flight_fetch" : 0,

"task_max_waiting_in_queue_millis" : 0,

"active_shards_percent_as_number" : 100.0

}Tip on Elasticsearch Headless Service

As you know, headless svc does not work as a load balancer and is used to address a group of pods together. There is another use case for headless services.

We can use it to get the address of individual pods. Let's take an e.g. to understand this.

We have three pods running as part of the Elastic search statefulset.

| Pod name | Pod Address |

| es-cluster-0 | 172.20.20.134 |

| es-cluster-1 | 172.20.10.134 |

| es-cluster-2 | 172.20.30.89 |

Elasticsearch Pods and their addresses.

A headless svc - "elasticsearch" is pointed to these pods.

If you do a nslookup from a pod running inside the same namespace of your cluster, you'll be able to get the address of the above pods through the headless svc.

nslookup es-cluster-0.elasticsearch.default.svc.cluster.local

Server: 10.100.0.10

Address: 10.100.0.10#53

Name: es-cluster-0.elasticsearch.default.svc.cluster.local

Address: 172.20.20.134

The above concept is used very commonly in Kubernetes, so should be understood clearly. In fact, the statefulset env vars - "discovery.seed_hosts" and "cluster.initial_master_nodes" are using this concept.

Now that we have a running Ealsticsearch cluster, let's move on to Kibana now.

Deploy Kibana Deployment & Service

Kibana can be created as a simple Kubernetes deployment. If you check the following Kibana deployment manifest file, we have an env var ELASTICSEARCH_URL defined to configure the Elasticsearch cluster endpoint. Kibana uses the endpoint URL to connect to elasticsearch.

Create the Kibana deployment manifest as kibana-deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: kibana

labels:

app: kibana

spec:

replicas: 1

selector:

matchLabels:

app: kibana

template:

metadata:

labels:

app: kibana

spec:

containers:

- name: kibana

image: docker.elastic.co/kibana/kibana:7.5.0

resources:

limits:

cpu: 1000m

requests:

cpu: 100m

env:

- name: ELASTICSEARCH_URL

value: http://elasticsearch:9200

ports:

- containerPort: 5601Create the manifest now.

kubectl create -f kibana-deployment.yamlLet's create a service of type NodePort to access the Kibana UI over node IP address. We are using nodePort for demonstration purposes. However, ideally, kubernetes ingress with a ClusterIP service is used for actual project implementation.

Save the following manifest as kibana-svc.yaml

apiVersion: v1

kind: Service

metadata:

name: kibana-np

spec:

selector:

app: kibana

type: NodePort

ports:

- port: 8080

targetPort: 5601

nodePort: 30000Create the kibana-svc now.

kubectl create -f kibana-svc.yamlNow you will be able to access Kibana over http://<node-ip>:3000

Verify Kibana Deployment

After the pods come into the running state, let us try and verify Kibana deployment. The easiest method to do this is through the UI access of the cluster.

To check the status, port-forward the Kibana pod's 5601 port. If you have created the nodePort service, you can also use that.

kubectl port-forward <kibana-pod-name> 5601:5601After this, access the UI through the web browser or make a request using curl

curl http://localhost:5601/app/kibanaIf the Kibana UI loads or a valid curl response comes up, then we can conclude that Kibana is running correctly.

Let's move to fluentd component now.

Deploy Fluentd Kubernetes Manifests

Fluentd is deployed as a daemonset since it has to stream logs from all the nodes in the clusters. In addition to this, it requires special permissions to list & extract the pod's metadata in all the namespaces.

Kubernetes Service accounts are used for providing permissions to a component in kubernetes, along with cluster roles and cluster rolebindings. Let's go ahead and create the required service account and roles.

Create Fluentd Cluster Role

A cluster role in kubernetes contains rules that represent a set of permissions. For fluentd, we want to give permissions for pods and namespaces.

Create a manifest fluentd-role.yaml

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: fluentd

labels:

app: fluentd

rules:

- apiGroups:

- ""

resources:

- pods

- namespaces

verbs:

- get

- list

- watch

Apply the manifest

kubectl create -f fluentd-role.yamlCreate Fluentd Service Account

A service account in kubernetes is an entity to provide identity to a pod. Here, we want to create a service account to be used with fluentd pods.

Create a manifest fluentd-sa.yaml

apiVersion: v1

kind: ServiceAccount

metadata:

name: fluentd

labels:

app: fluentdApply the manifest

kubectl create -f fluentd-sa.yamlCreste Fluentd Cluster Role Binding

A cluster rolebinding in kubernetes grants permissions defined in a cluster role to a service account. We want to create a rolebinding between the role and the service account created above.

Create a manifest fluentd-rb.yaml

kind: ClusterRoleBinding

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: fluentd

roleRef:

kind: ClusterRole

name: fluentd

apiGroup: rbac.authorization.k8s.io

subjects:

- kind: ServiceAccount

name: fluentd

namespace: default

Apply the manifest

kubectl create -f fluentd-rb.yaml

Deploy Fluentd DaemonSet

Let us deploy the daemonset now.

Save the following as fluentd-ds.yaml

apiVersion: apps/v1

kind: DaemonSet

metadata:

name: fluentd

labels:

app: fluentd

spec:

selector:

matchLabels:

app: fluentd

template:

metadata:

labels:

app: fluentd

spec:

serviceAccount: fluentd

serviceAccountName: fluentd

containers:

- name: fluentd

image: fluent/fluentd-kubernetes-daemonset:v1.4.2-debian-elasticsearch-1.1

env:

- name: FLUENT_ELASTICSEARCH_HOST

value: "elasticsearch.default.svc.cluster.local"

- name: FLUENT_ELASTICSEARCH_PORT

value: "9200"

- name: FLUENT_ELASTICSEARCH_SCHEME

value: "http"

- name: FLUENTD_SYSTEMD_CONF

value: disable

resources:

limits:

memory: 512Mi

requests:

cpu: 100m

memory: 200Mi

volumeMounts:

- name: varlog

mountPath: /var/log

- name: varlibdockercontainers

mountPath: /var/lib/docker/containers

readOnly: true

terminationGracePeriodSeconds: 30

volumes:

- name: varlog

hostPath:

path: /var/log

- name: varlibdockercontainers

hostPath:

path: /var/lib/docker/containersNote: If you check the deployment, we whave use two env vars,"FLUENT_ELASTICSEARCH_HOST"&"FLUENT_ELASTICSEARCH_PORT". Fluentd uses these Elasticsearch values to ship the collected logs.

Lets apply the fluentd manifest

kubectl create -f fluentd-ds.yamlVerify Fluentd Setup

In order to verify the fluentd installation, let us start a pod that creates logs continuously. We will then try to see these logs inside Kibana.

Save the following as test-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: counter

spec:

containers:

- name: count

image: busybox

args: [/bin/sh, -c,'i=0; while true; do echo "Thanks for visiting devopscube! $i"; i=$((i+1)); sleep 1; done']

Apply the manifest

kubectl create -f test-pod.yamlNow, let's head to Kibana to check whether the logs from this pod are being picked up by fluentd and stored at elasticsearch or not. Follow the below steps:

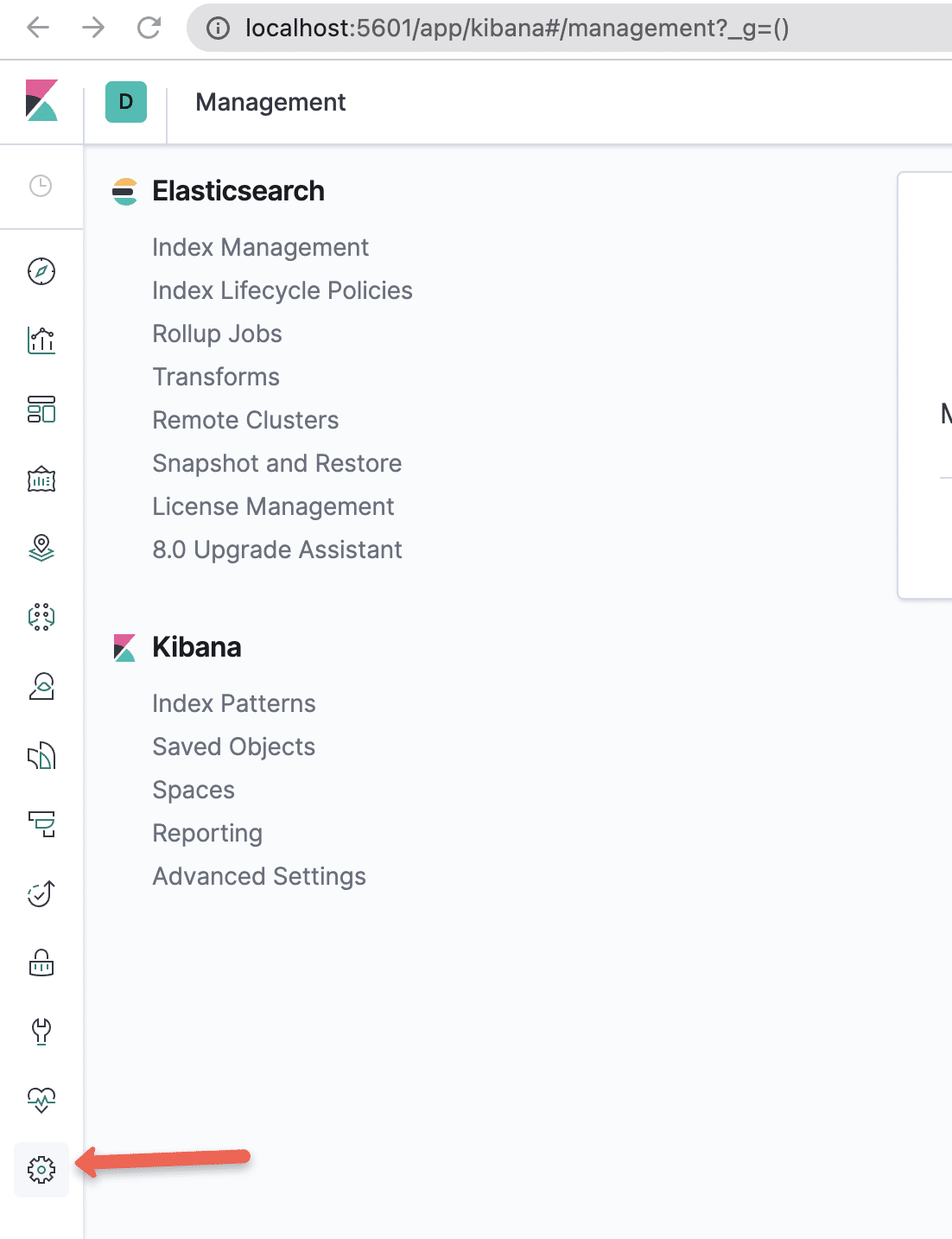

Step 1: Open kibana UI using proxy or the nodeport service endpoint. Head to management console inside it.

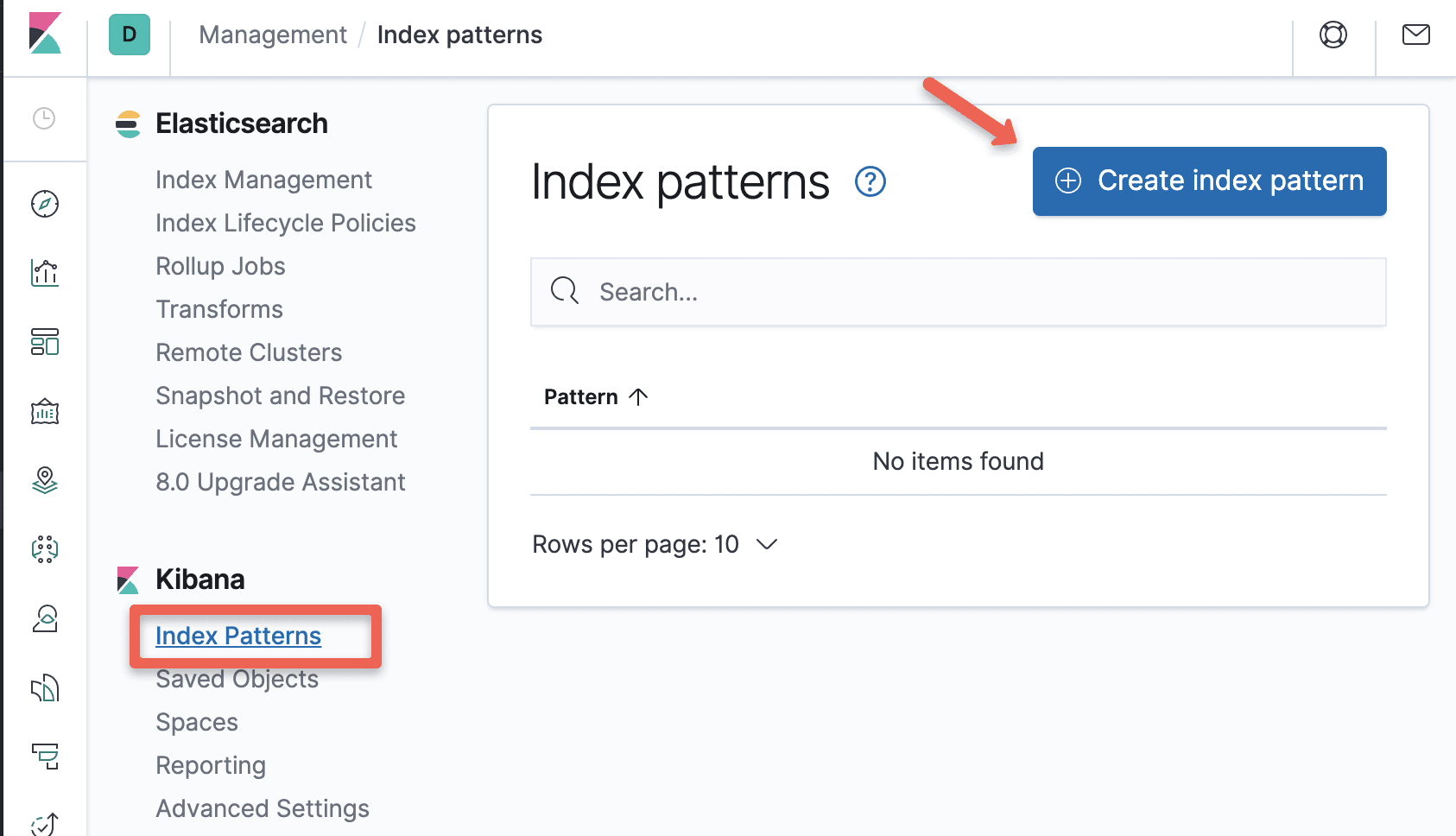

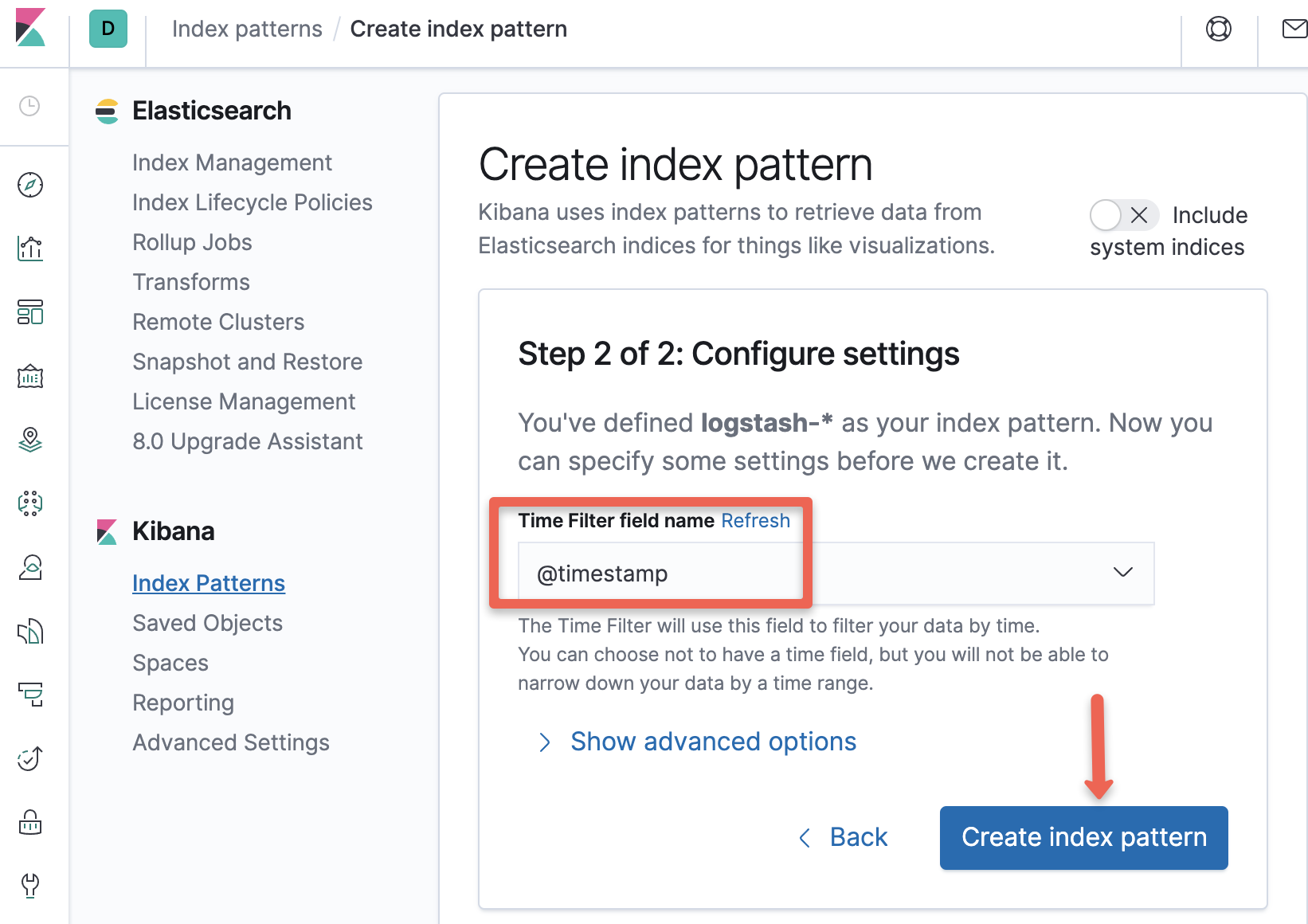

Step 2: Select the "Index Patterns" option under Kibana section.

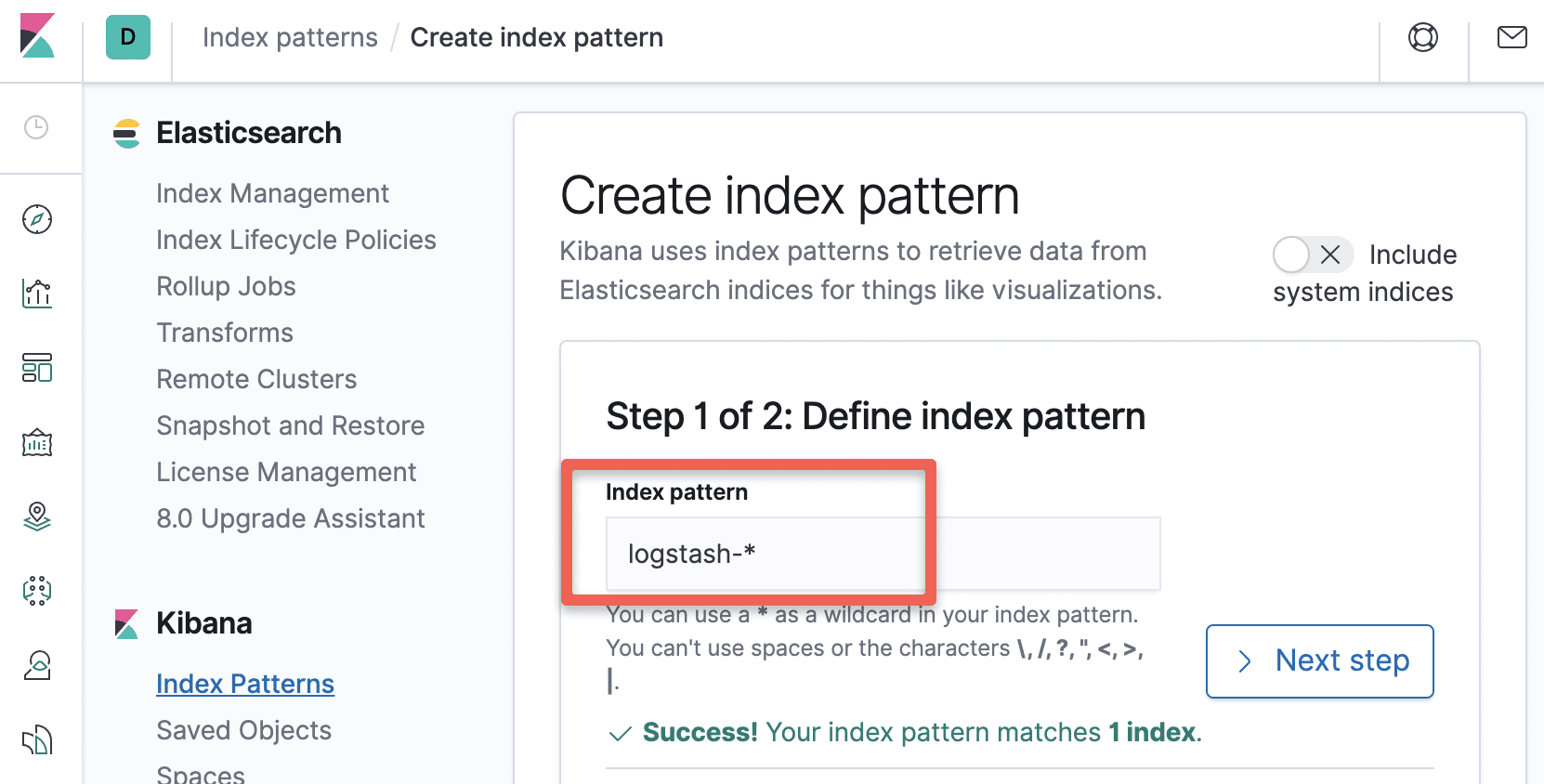

Step 3: Create a new Index Pattern using the pattern - "logstash-*" and

Step 4: Select "@timestamp" in the timestamps option.

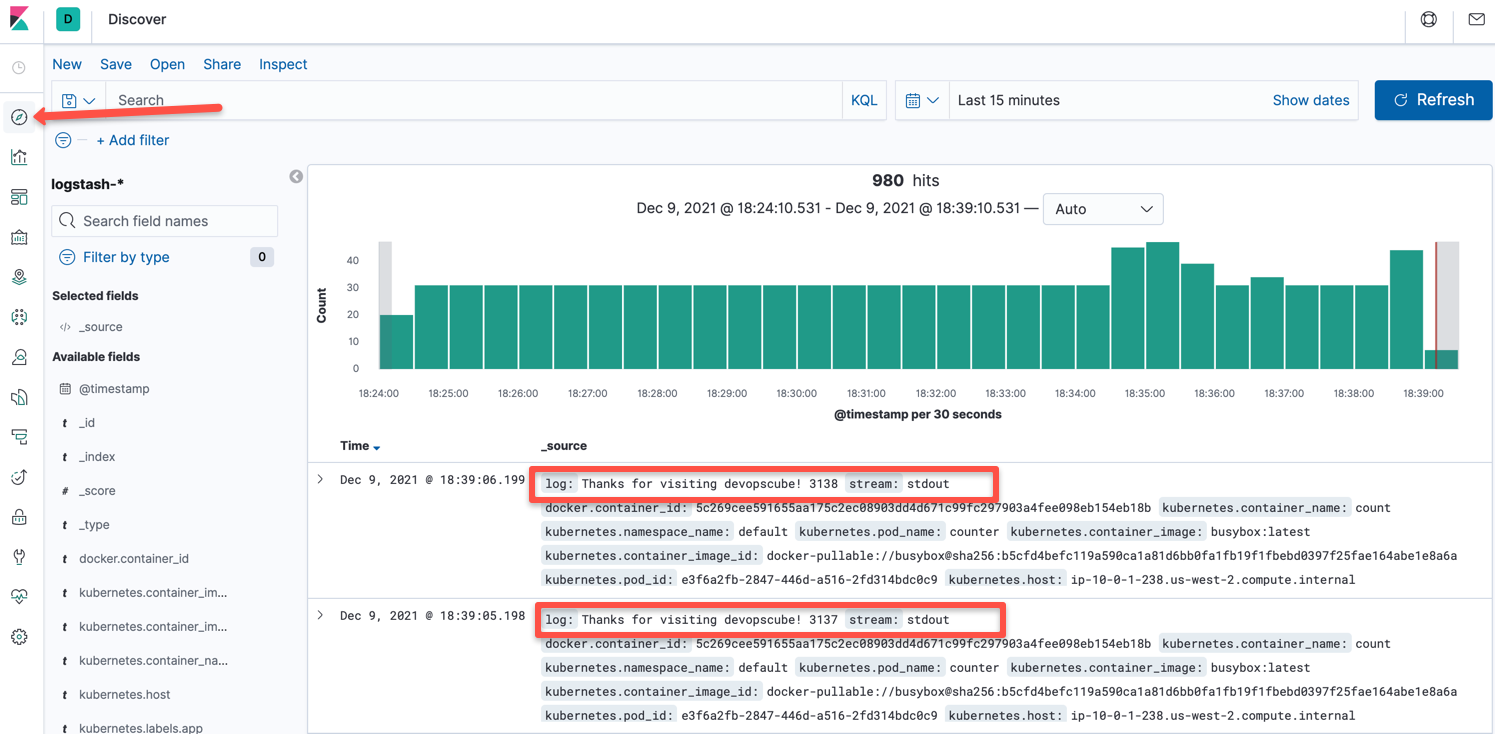

Step 5: Now the index pattern has been created. Head to discover console.Here, you will be able to see all the logs being exported by fluentd like the logs from our test pod as shown in the image below.

That's it!

We have covered all the components required for a logging solution in Kubernetes and also verified each of our components separately. Let us go through the best practices of using EFK stack.

Kubernetes EFK Best practices

- Elasticsearch uses heap memory extensively for filtering and caching for better query performances, so ample memory should be available for Elasticsearch.

Giving more than half of total memory to Elasticsearch could also leave too less memory for OS functions which could in turn hamper Elasticsearch's capabilities.

So be mindful of this! A 40-50% of total heap space to Elasticsearch is good enough. - Elasticsearch indices can fill up quickly so it's important to clean up old indices regularly. Kubernetes cron jobs can help you do this regularly in an automated fashion.

- Having data replicated across multiple nodes can help in disaster recovery and also improve query performance. By default, replication factor in elasticsearch is set to 1.

Consider playing around with this values according to your use case. Having atleast 2 is a good practise. - Data which is known to be accessed more frequently can be placed in different nodes with more resources allocated. This can be achieved by running a cronjob that moves the indices to different nodes at regular intervals.

Though this is an advance use case - it is good for a beginner to atleast have knowledge that something like this can be done. - In Elasticsearch, you an archive indices to low cost cloud storage such as aws-s3 and restore when you need data from those indices.

This is a best practise if you need to conserve logs for audit and compliance. - Having multiple nodes like master, data and client nodes with dedicated functionalities is good for high availability and fault tolerance.

Beyond EFK - Further Research

This guide was just a small use case of setting up the Elastic stack on Kubernetes. Elastic stack has tons of other features which help in logging and monitoring solutions.

For example, it can ship logs from virtual machines and managed services of various cloud providers. You can even ship logs from data engineering tools like Kafka into the Elastic Stack.

The elastic stack has other powerful components worth looking into, such as:

- Elastic Metrics: Ships metrics from multiple sources across your entire infrastructure and makes it available in elastic search and kibana.

- APM: Expands elastic stack capabilities and lets you analyze where exactly an application is spending time quickly fixing issues in production.

- Uptime: Helps in monitoring and analyzing availability issues across your apps and services before they start appearing in the production.

Explore and research them!

Conclusion

In his Kubernetes EFK setup guide, we have learned how to set up the logging infrastructure on Kubernetes.

If you want to become a DevOps engineer, it is very important to understand all the concepts involved in the Kubernetes logging.

In the next part of this series, we are going to explore Kibana dashboards and visualization options.

In Kibana, it is a good practice to visualize data through graphs wherever possible as it gives a much more clear picture of your application state. So don't forget to check out the next part of this series.

Till then, keep learning and exploring.