In this Kubernetes tutorial, I have covered the step-by-step guide to set up the Kubernetes cluster on Vagrant. It is a multinode kubernetes setup using kubeadm.

Vagrant is a great utility to set up Virtual machines on your local workstation. I pretty much use Vagrant for most of my testing and learning purposes. If you are new to Vagrant, see my beginners vagrant guide

This guide primarily focuses on the Kubernetes automated setup using Vagrantfile and shell scripts.

Automated Kubernetes Cluster Setup on Vagrant

I have written a basic Vagrantfile and scripts so that anyone can understand and make changes as per their requirements.

Here is the summary of the setup.

- A single vagrant up command will create three VMs and configures all essential kubernetes components and configuration using Kubeadm.

- Calico Network Plugin, Metrics server, and Kubernetes dashboard get installed as part of the setup.

- The kubeconfig file gets added to all the nodes in the cluster so that you can execute kubectl commands from any node.

- The kubeconfig file and the kubernetes dashboard access token get added to the configs folder where you have the Vagrantfile. You can use the kubeconfig file to connect the cluster from your workstation.

- You can shut down the VMs when not in use and start them again whenever needed. All the cluster configurations remain intact without any issues. The nodes get connected automatically to the master during the startup.

- You can delete all the VMs in one command and recreate the setup with a

vagrant upcommand any time you need.

Here is a high-level overview of the setup.

CKA/CKAD/CKS Certification Practice Environment

If you are preparing for any of the Kubernetes certifications, you need a cluster to practice all the exam scenarios.

You can use these Vagrant scripts to set up your local practice environment.

Specifically, for CKA Certification, you can expect Kubeadm-related exam questions like bootstrapping and upgrading the kubernetes cluster using kubeadm. You can check out the following guides.

The setup script deploys the latest version of kubernetes that is required for Kubernetes certification exams.

Kubernetes-Kubeadm Vagrant Github Repository

The Kubeadm Vagrantfile and scripts are hosted on the Vagrant Kubernetes Github repository.

Clone the repository to follow along with the guide.

git clone https://github.com/techiescamp/vagrant-kubeadm-kubernetesPrerequisite For MAC Users

If you have upgraded MAC to OS Monterey, you might face issues with Vagrant for creating private networks. This means Vagrant won't be able to create a network other than the 198 series.

To resolve the issue, one /etc/vbox/networks.conf and add the following.

* 0.0.0.0/0 ::/0Setup Kubernetes Cluster on Vagrant

Follow the steps given below to spin up the cluster and validate all the Kubernetes cluster configurations.

Step 1: To create the cluster, first cd into the cloned directory.

cd vagrant-kubeadm-kubernetesStep 2: Execute the vagrant command. It will spin up three nodes. One control plane (master) and two worker nodes. Kubernetes installation and configuration happen through the shell script present in the scripts folder.

vagrant upStep 3: Log in to the master node to verify the cluster configurations.

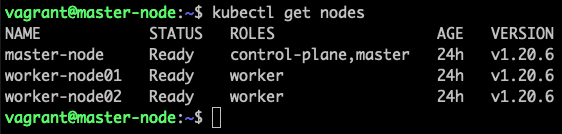

vagrant ssh controlplaneStep 4: List all the cluster nodes to ensure the worker nodes are connected to the master and in a ready state.

kubectl top nodesYou should see the output as shown below.

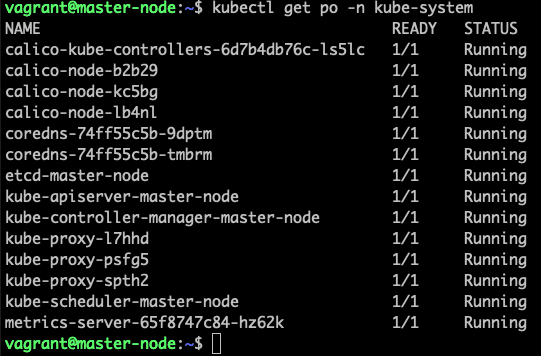

Step 5: List all the pods in kube-system namespace and ensure it is in a running state.

kubectl get po -n kube-system

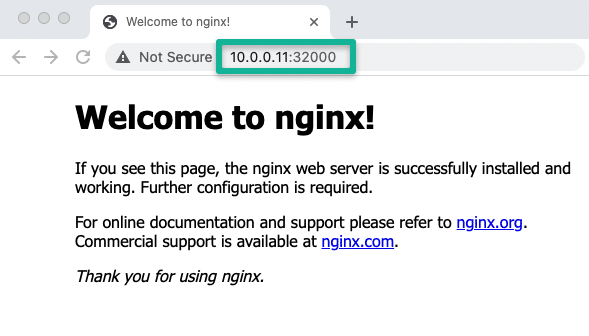

Step 6: Deploy a sample Nginx app and see if you can access it over the nodePort.

kubectl apply -f https://raw.githubusercontent.com/scriptcamp/kubeadm-scripts/main/manifests/sample-app.yamlYou should be able to access Nginx on any of the node's IPs on port 32000. For example, http://10.0.0.11:32000

That's it! You can start deploying and testing other applications.

To shut down the Kubernetes VMs, execute the halt command.

vagrant haltWhenever you need the cluster, just execute the following.

vagrant upTo destroy the VMs,

vagrant destroyAccess Kubernetes Cluster From Workstation Terminal

Once Vagrant execution is successful, you will see a configs folder with a few files (config, join.sh, and token) inside the cloned repo. These are generated during the run time.

Copy the config file to your $HOME/.kube folder if you want to interact with the cluster from your workstation terminal. You should have kubectl installed on your workstation.

For example, I did the following on my Mac keeping vagrant-kubeadm-kubernetes folder as the current directory.

mkdir -p $HOME/.kube

cp configs/config $HOME/.kubeAlternatively, you can set a Kubeconfig env variable as shown below. Make sure you execute the command from the vagrant-kubeadm-kubernetes folder where you have the Vagrantfile.

export KUBECONFIG=$(PWD)/configs/configOnce you copy the kubeconfig (config) file to your local $HOME/.kube directory you can run the kubectl command against the cluster

Verify the config by listing the cluster nodes.

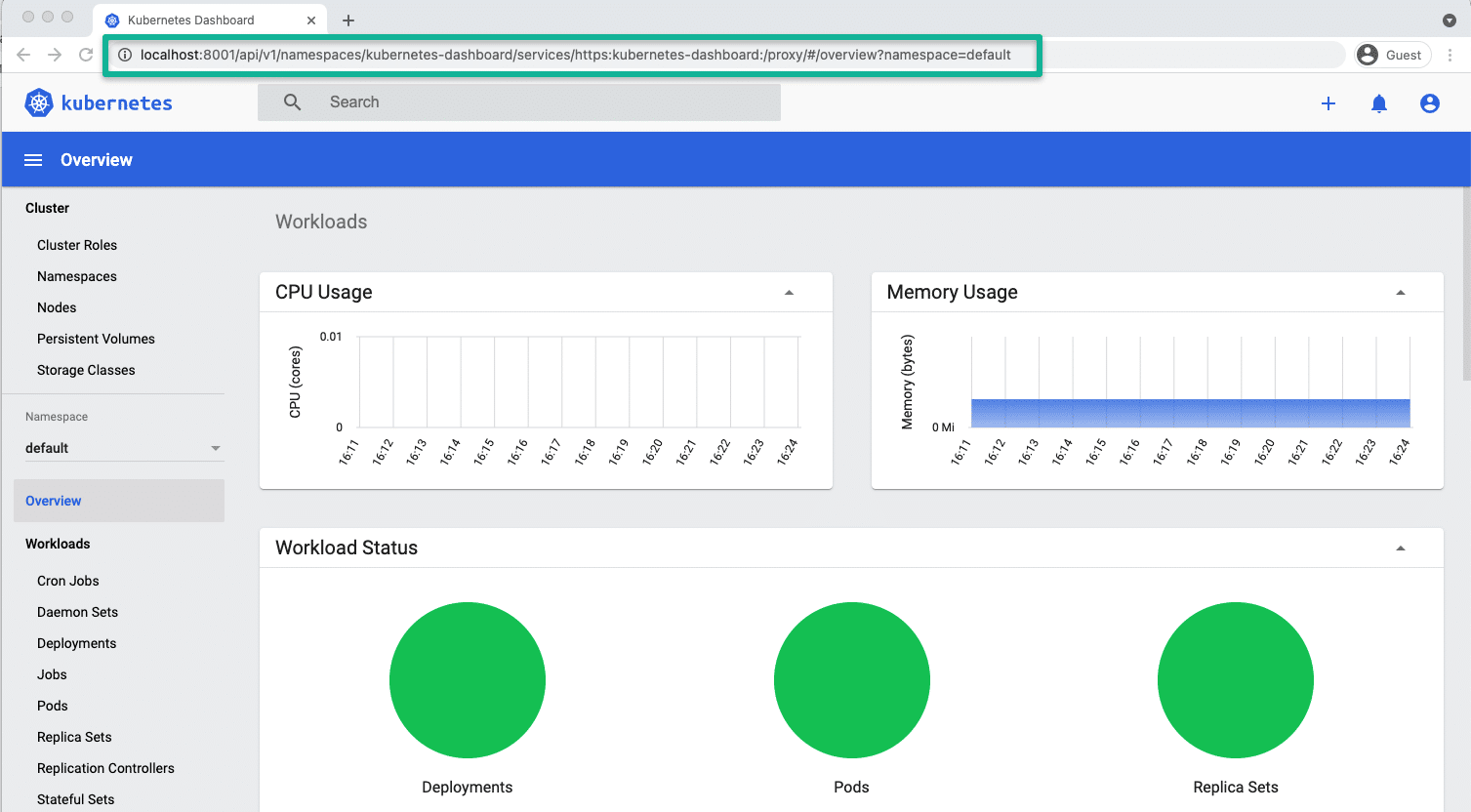

kubectl get nodesTo access the kubernetes dashboard, run kubectl proxy to access the Kubernetes dashboard.

kubectl proxyThe token file inside the configs folder contains the sign-in token for the kubernetes dashboard. If you want to use the kubernetes dashboard, use the token and log in from the following URL

http://localhost:8001/api/v1/namespaces/kubernetes-dashboard/services/https:kubernetes-dashboard:/proxy/#/login

Kubeadm Vagrantfile & Scripts Explanation

Here is the file tree for the Vagrant repo.

├── Vagrantfile

├── configs

│ ├── config

│ ├── join.sh

│ └── token

└── scripts

├── common.sh

├── master.sh

└── node.shThe configs folder and files get generated only after the first run.

As I explained earlier, the config file contains the config, token, and join.sh file.

In the previous section, I have already explained config and token. The join.sh file has the worker node join command with the token created during kubeadm master node initialization.

Since all the nodes share the folder containing the Vagrantfile, the worker nodes can read the join.sh file and join the master automatically during the first run. It is a one-time task.

If you log in to any node and access the /vagrant folder, you will see Vagrantfile and scripts as it is shared between the VMs.

Let's have a look at the Vagrantfile

NUM_WORKER_NODES=2

IP_NW="10.0.0."

IP_START=10

Vagrant.configure("2") do |config|

config.vm.provision "shell", env: {"IP_NW" => IP_NW, "IP_START" => IP_START}, inline: <<-SHELL

apt-get update -y

echo "$IP_NW$((IP_START)) master-node" >> /etc/hosts

echo "$IP_NW$((IP_START+1)) worker-node01" >> /etc/hosts

echo "$IP_NW$((IP_START+2)) worker-node02" >> /etc/hosts

SHELL

config.vm.box = "bento/ubuntu-22.04"

config.vm.box_check_update = true

config.vm.define "master" do |master|

# master.vm.box = "bento/ubuntu-18.04"

master.vm.hostname = "master-node"

master.vm.network "private_network", ip: IP_NW + "#{IP_START}"

master.vm.provider "virtualbox" do |vb|

vb.memory = 4048

vb.cpus = 2

end

master.vm.provision "shell", path: "scripts/common.sh"

master.vm.provision "shell", path: "scripts/master.sh"

end

(1..NUM_WORKER_NODES).each do |i|

config.vm.define "node0#{i}" do |node|

node.vm.hostname = "worker-node0#{i}"

node.vm.network "private_network", ip: IP_NW + "#{IP_START + i}"

node.vm.provider "virtualbox" do |vb|

vb.memory = 2048

vb.cpus = 1

end

node.vm.provision "shell", path: "scripts/common.sh"

node.vm.provision "shell", path: "scripts/node.sh"

end

end

end As you can see, I have added the following IPs for nodes, and it is added to the host's file entry of all the nodes with its hostname with a common shell block that gets executed on all the VMs.

- 10.0.0.10 (master)

- 10.0.0.11 (node01)

- 10.0.0.11 (node02)

Also, the worker node block is in a loop. So if you want more than two worker nodes or have only one worker node, you need to replace 2 with the desired number in the loop declaration in the NUM_WORKER_NODES variable. If you add more nodes, ensure you add the IP to the host's file entry.

For example, for 3 worker nodes, you need to have,

NUM_WORKER_NODES=3master.sh, node.sh and common.sh Scripts

The three shell scripts get called as provisioners during the Vagrant run to configure the cluster.

- common.sh: - A self-explanatory list of commands which configures and installs specific version of cri-o runtime, kubeadm, kubectl, and kubelet on all the nodes. Also, disables swap.

- master.sh: - contains commands to initialize master, install the calico plugin, metrics server, and kubernetes dashboard. Also, copies the kube-config, join.sh, and token files to the configs directory.

- node.sh:- reads the join.sh command from the configs shared folder and join the master node. Also, copied the kubeconfig file to

/home/vagrant/.kubelocation to execute kubectl commands.

common.sh installs kubernetes version 1.20.6-00 to have the same cluster version for CKA/CKAD and CKS preparation. If you would like the latest version, remove the version number from the command.

Video Documentation For Vagrant Setup

I have documented the whole process in a YouTube video. Check out the video if you want to see the live setup.

Conclusion

It is good to have a Local kubernetes cluster setup that you can spin up and tear down whenever you need without spending much time.

To set up the kubernetes cluster on Vagrant, all you have to do is, clone the repo and run the vagrant up command.

Moreover, if you are a DevOps engineer and work on the Kubernetes cluster, you can have a production-like setup locally for development and testing.

If you want to have a simple single-node Kubernetes setup, you can try minikube. Here is a minikube tutorial for beginners.

You can add more tools and utilities like helm, ingress controller, Prometheus, etc to the existing script and customize it as per your requirements.

Please feel free to contribute to the repo with enhancements!