In this blog, we will look at the important Kubernetes configurations every devops engineer or Kubernetes administrator should know.

Toward the end of the guide, I added the configurations involved in a real-world Kubernetes cluster setup.

Note: All the config locations refered in this guide are based on the default Kubernetes installation using kubeadm.

Kubernetes Cluster Configurations

Whether you are preparing for Kubernetes certifications or plan to work on kubernetes projects, it is very important to know about the key configuration of the Kubernetes cluster.

Also, when it comes to CKA certification, you will get scenarios to rectify issues in the cluster. So understanding the cluster configurations will make it easier for you to troubleshoot and find issues in the cluster the right way.

Let's start with the configurations related to control plane components.

Static Pod Manifests

As we discussed in the Kubernetes architecture, all the control plane components are started by the kubelet from the static pod manifests present in the /etc/kubernetes/manifests directory. Kubelet manages the lifecycle of all the pods created from the static pods manifests.

The following components are deployed from the static pod manifests.

- etcd

- API server

- Kube controller manager

- Kube scheduler.

manifests

├── etcd.yaml

├── kube-apiserver.yaml

├── kube-controller-manager.yaml

└── kube-scheduler.yamlYou can get all the configuration locations of these components from these pod manifests.

API Server Configurations

If you look at the kube-apiserver.yaml, under the container spec you can see all the parameters that point to TLS certs and other required parameters for the API server to work and communicate with other cluster components.

apiVersion: v1

kind: Pod

metadata:

annotations:

kubeadm.kubernetes.io/kube-apiserver.advertise-address.endpoint: 172.31.42.106:6443

creationTimestamp: null

labels:

component: kube-apiserver

tier: control-plane

name: kube-apiserver

namespace: kube-system

spec:

containers:

- command:

- kube-apiserver

- --advertise-address=172.31.42.106

- --allow-privileged=true

- --authorization-mode=Node,RBAC

- --client-ca-file=/etc/kubernetes/pki/ca.crt

- --enable-admission-plugins=NodeRestriction

- --enable-bootstrap-token-auth=true

- --etcd-cafile=/etc/kubernetes/pki/etcd/ca.crt

- --etcd-certfile=/etc/kubernetes/pki/apiserver-etcd-client.crt

- --etcd-keyfile=/etc/kubernetes/pki/apiserver-etcd-client.key

- --etcd-servers=https://127.0.0.1:2379

- --kubelet-client-certificate=/etc/kubernetes/pki/apiserver-kubelet-client.crt

- --kubelet-client-key=/etc/kubernetes/pki/apiserver-kubelet-client.key

- --kubelet-preferred-address-types=InternalIP,ExternalIP,Hostname

- --proxy-client-cert-file=/etc/kubernetes/pki/front-proxy-client.crt

- --proxy-client-key-file=/etc/kubernetes/pki/front-proxy-client.key

- --requestheader-allowed-names=front-proxy-client

- --requestheader-client-ca-file=/etc/kubernetes/pki/front-proxy-ca.crt

- --requestheader-extra-headers-prefix=X-Remote-Extra-

- --requestheader-group-headers=X-Remote-Group

- --requestheader-username-headers=X-Remote-User

- --secure-port=6443

- --service-account-issuer=https://kubernetes.default.svc.cluster.local

- --service-account-key-file=/etc/kubernetes/pki/sa.pub

- --service-account-signing-key-file=/etc/kubernetes/pki/sa.key

- --service-cluster-ip-range=10.96.0.0/12

- --tls-cert-file=/etc/kubernetes/pki/apiserver.crt

- --tls-private-key-file=/etc/kubernetes/pki/apiserver.key

image: registry.k8s.io/kube-apiserver:v1.26.3So if you want to troubleshoot or verify the cluster components configurations, first you should look at the static pod manifest configurations.

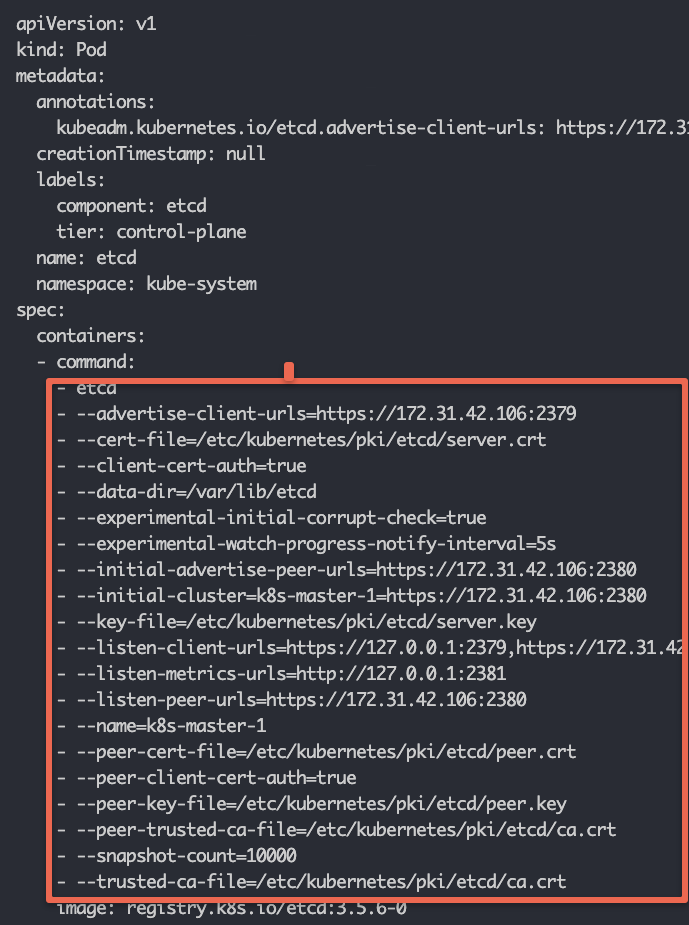

ETCD Configurations

If you want to interact with the etcd component, you can use the details from the static pod YAML.

For example, if you want to backup etcd you need to know the etcd service endpoint and related certificates to authenticate against etcd and create a backup.

If you open the etcd.yaml manifest you can view all the etcd-related configurations as shown below.

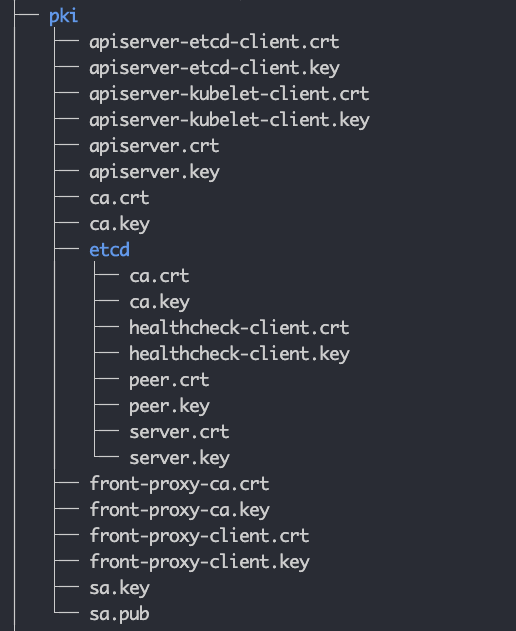

TLS Certificates

In Kubernetes, all the components talk to each other over mTLS. Under the PKI folder, you will find all the TLS certificates and keys. Kubernetes control plane components use these certificates to authenticate and communicate with each other.

Also, there is an etcd subdirectory that contains the etcd-specific certificates and private keys. These are used to secure communication between etcd nodes and between the API server and etcd nodes.

The following image shows the file structure of the PKI folder.

The static pod manifests refer to the required TLS certificates and keys from this folder.

When you work on a self-hosted cluster using tools like kubeadm, these certificates are automatically generated by the tool. In managed kubernetes clusters, the cloud provider takes care of all the TLS requirements as it is their responsibility to manage control plane components.

However, if you are setting up a self-hosted cluster for production use, these certificates have to be requested from the organization's network or security team. They will generate these certificates signed by the organization's internal Certificate authority and provide them to you.

Kubeconfig Files

Any components that need to authenticate to the API server need the kubeconfig file.

All the cluster Kubeconfig files are present in the /etc/kubernetes folder (.conf files). You will find the following files.

- admin.conf

- controller-manager.conf

- kubelet.conf

- scheduler.conf

It contains the API server endpoint, cluster CA certificate, cluster client certificate, and other information.

The admin.conf, file, which is the admin kubeconfig file used by end users to access the API server to manage the clusters. You can use this file to connect the cluster from a remote workstation.

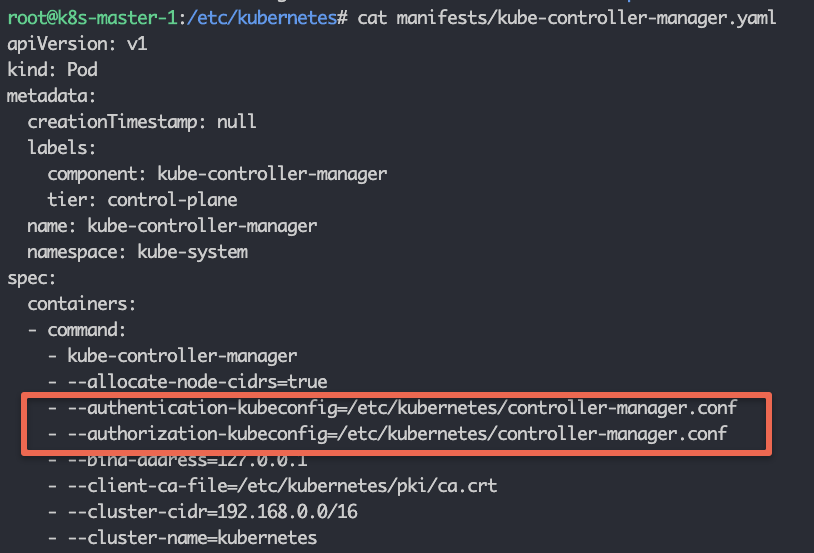

The Kubeconfig for the Controller manager, scheduler, and Kubelet is used for API server authentication and authorization.

For example, if you check the Controller Manager static pod manifest file, you can see the controller-manager.conf added as the authentication and authorization parameter.

Kubelet Configurations

Kubelet service runs as a systems service on all the cluster nodes.

You can view the kubelet systemd service under /etc/systemd/system/kubelet.service.d

Here are the system file contents.

[Service]

Environment="KUBELET_KUBECONFIG_ARGS=--bootstrap-kubeconfig=/etc/kubernetes/bootstrap-kubelet.conf --kubeconfig=/etc/kubernetes/kubelet.conf"

Environment="KUBELET_CONFIG_ARGS=--config=/var/lib/kubelet/config.yaml"

EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

EnvironmentFile=-/etc/default/kubelet

ExecStart=

ExecStart=/usr/bin/kubelet $KUBELET_KUBECONFIG_ARGS $KUBELET_CONFIG_ARGS $KUBELET_KUBEADM_ARGS $KUBELET_EXTRA_ARGSI have highlighted two important kubelet configurations in bold.

- kubelet kubeconfig file: /etc/kubernetes/kubelet.conf

- kubelet config file: /var/lib/kubelet/config.yaml

- EnvironmentFile=-/var/lib/kubelet/kubeadm-flags.env

The kubeconfig file will be used for API server authentication and authorization.

The /var/lib/kubelet/config.yaml contains all the kubelet-related configurations. The static pod manifest location is added as part of the staticPodPath parameter.

staticPodPath: /etc/kubernetes/manifests/var/lib/kubelet/kubeadm-flags.env file contains the container runtime environment Linux socket and the infra container (pause container) image.

For example, here is the kubelet config that is using the CRI-O container runtime, as indicated by the Unix socket and the pause container image.

KUBELET_KUBEADM_ARGS="--container-runtime-endpoint=unix:///var/run/crio/crio.sock --pod-infra-container-image=registry.k8s.io/paA pause container is a minimal container that is the first to be started within a Kubernetes Pod. Then the role of the pause container is to hold the networking namespace and other shared resources for all the other containers in the same Pod.

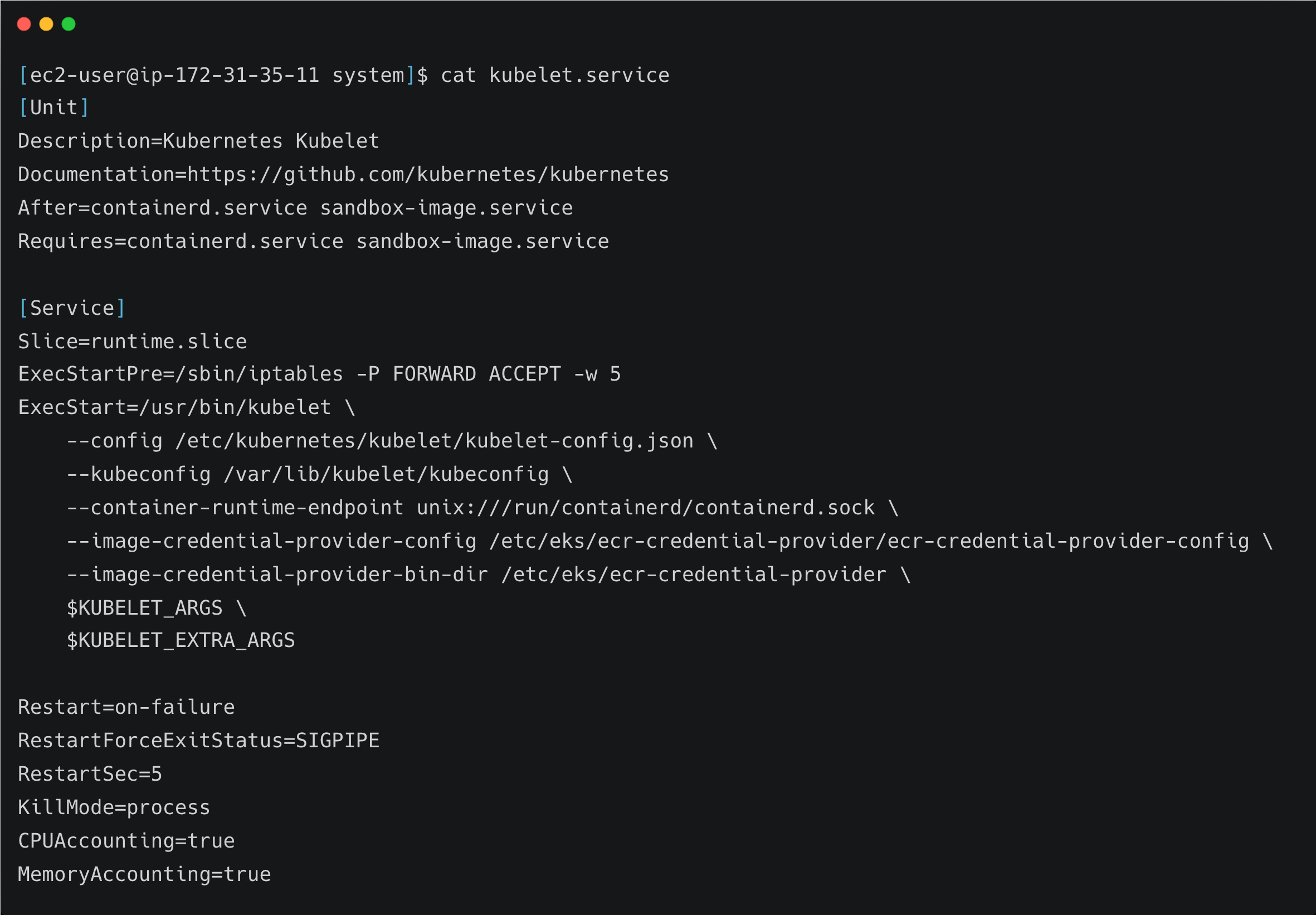

If you look at the kubelet configuration in the managed k8s cluster, it looks a little different than the kubeadm setup.

For example, here is a kubelet service file for an AWS EKS cluster.

Here you can see the container runtime is containerd and its Unix socket flag is directly added to the service file

The kubelet kubeconfig file is in a different directory as compared to kubeadm configurations.

CoreDNS Configurations

CoreDNS addon components deal with the cluster DNS configurations.

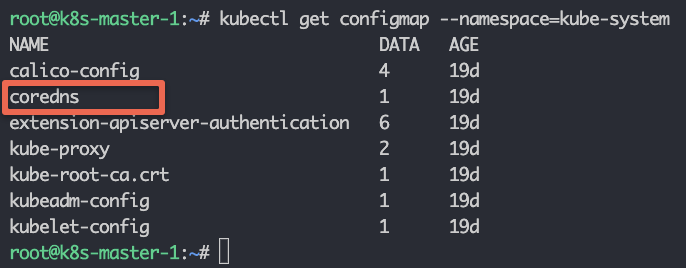

All the CoreDNS configurations are part of a configmap named CoreDNS in the kubesystem namespace.

If you list the Configmaps in the kube-system namespace, you can see the CoreDNS configmap.

kubectl get configmap --namespace=kube-system

use the following command to view the CoreDNS configmap contents.

kubectl edit configmap coredns --namespace=kube-systemYou will see the following contents.

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}When it comes to DNS connectivity, applications may need to connect to:

- Internal services using Kubernetes service endpoints.

- Publicly available services using public DNS endpoints.

- In hybrid cloud environments, services are hosted in on-premise environments using private DNS endpoints.

If you have a use case where you need to have custom DNS servers, for example, the applications in the cluster need to connect to private DNS endpoints in the on-premise data center, you can add the custom DNS server to the core DNS configmap configurations.

For example, let's say the custom DNS server IP is 10.45.45.34 and your DNS suffix is dns-onprem.com, we have to add a block as shown below. So that all the DNS requests related to that domain endpoint will be forwarded to 10.45.45.34 DNS server.

dns-onprem.com:53 {

errors

cache 30

forward . 10.45.45.34

}Here is the full configmap configuration with the custom block highlighted in bold.

apiVersion: v1

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

pods insecure

fallthrough in-addr.arpa ip6.arpa

ttl 30

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

dns-onprem.com:53 {

errors

cache 30

forward . 10.45.45.34

}Audit Logging Configuration

When it comes to production clusters, audit logging is a must-have feature.

Audit logging is enabled in the kube-api-server.yaml static pod manifest.

The command argument should have the following two parameters. The file path is arbitrary and it depends on the cluster administrator.

--audit-policy-file=/etc/kubernetes/audit/audit-policy.yaml

--audit-log-path=/var/log/kubernetes/audit.logaudit-policy.yaml contains all the audit policies and audit.log file contains the audit logs generated by Kubernetes.

Kubernetes Configurations Video

I have made a video explaining the Kubernetes configuration. You can watch the video on Youtube. Here is the embedded video.

Kubernetes Configurations Involved in Production Setup

It is very important to know about kubernetes cluster configuration when working on real-time projects.

When it comes to self-hosted Kubernetes clusters, which primarily happen in on-premise environments, you need to know each and every configuration of the cluster control plane and the worker nodes.

Although there are automation tools available to set up the whole cluster, you need to work on the following.

- Requesting cluster certificates from the organization's network or security teams.

- Ensure cluster control plane components use the correct TLS certificates.

- Cluster DNS configurations are configured with the custom DNS servers.

When it comes to managed kubernetes clusters, you will not have access to the control plane components.

However, having good knowledge of the cluster configuration would help during implementation and discussions with the cloud support teams to fix cluster-related issues.

Conclusion

In this guide, we have looked at the important Kubernetes cluster configurations that would help you in Kubernetes cluster administration activities.

Also, you can check out the detailed blog on Production Kubernetes cluster activities.

If you want to level up your kubernetes skills, check out my 40+ comprehensive kubernetes tutorials.