In this guide, you will learn how to mount OCI container images as volumes in Kubernetes Pods using the ImageVolume feature.

This feature is especially useful for ML projects that work with LLMs, as it allows you to package model data in OCI images and easily switch between different models.

ImageVolume (beta feature)

Kubernetes version 1.31 has introduced a new alpha feature that allows you to use OCI image volumes directly within Kubernetes pods. In Kubernetes version 1.33, it has been changed to a beta feature.

So what are OCI images?

OCI images are images that follow Open Container Initiative specifications. For example, Docker, Podman, containerd and CRI-O runtimes used OCI image specifications for images.

You can use the ImageVolume feature to store binary artifacts in images and mount them to pods. Also, a key thing about this feature is, it is a read only Volume.

Now, lets get started with the practical example.

Step 1: Enable the ImageVolume Feature Gate

ImageVolume feature is not enabled by default.

So, to mount OCI image in a pods volume, first you need to enable the feature gate ImageVolume in the Kubernetes cluster

To enable this feature gate, you have to modify the API server manifest and kubelet config file as given below.

To edit the API server manifest file, open the manifest file using the following command.

sudo vi /etc/kubernetes/manifests/kube-apiserver.yaml

Then add the following line.

- --feature-gates=ImageVolume=trueOnce you save the file, the API server will restart automatically.

Now, modify the kubelet config file.

sudo vi /var/lib/kubelet/config.yamlAnd add the following block inside the config file.

featureGates:

ImageVolume: trueThen restart the kubelet.

sudo systemctl daemon-reload

sudo systemctl restart kubeletTesting in Kind Cluster (Optional)

If you just want to check this feature and you dont have an active cluster, you can create a Kind cluster in which we can enable ImageVolume feature gates during creation.

kind: Cluster

apiVersion: kind.x-k8s.io/v1alpha4

name: multi-node-cluster

featureGates:

ImageVolume: true

nodes:

- role: control-plane

image: kindest/node:v1.33.1

extraPortMappings:

- containerPort: 30000

hostPort: 30000

protocol: TCP

- containerPort: 31000

hostPort: 31000

protocol: TCP

- containerPort: 32000

hostPort: 32000

protocol: TCP

- containerPort: 80

hostPort: 80

protocol: TCP

- containerPort: 443

hostPort: 443

protocol: TCP

- role: worker

image: kindest/node:v1.33.1

- role: worker

image: kindest/node:v1.33.1Step 2: Build an OCI Image

The next step is to build an OCI image.

For this example, I am using a prediction model that I have locally. You can replace the model file with any file for testing.

Here is the Dockerfile.

FROM scratch

COPY model.pkl /models/model.pkldevopscube/oci-image:1.0.You can use it directly for testing.

Step 3: Deploy a Predictor Application

To test the ImageVolume, we will deploy a simple Python predictor application. This application loads the model.pkl file directly from the mounted image volume.

devopscube/predictor:1.0, which you can use for testing.Here is the python code that is part of the predictor image.

import os

import joblib

import numpy as np

from fastapi import FastAPI

from pydantic import BaseModel

MODEL_PATH = os.environ.get("MODEL_PATH", "/models/model.pkl")

model = joblib.load(MODEL_PATH)

app = FastAPI()

class PredictIn(BaseModel):

instances: list

@app.get("/healthz")

def healthz():

return {"ok": True, "model_path": MODEL_PATH}

@app.post("/v1/models/model:predict")

def predict(p: PredictIn):

X = p.instances

try:

X_arr = np.array(X, dtype=float)

preds = model.predict(X_arr).tolist()

except Exception:

preds = model.predict(X).tolist()

return {"predictions": preds}Step 4: Test the ImageVolume

Now, lets deploy the predictor image and the OCI volume image to test the image volume.

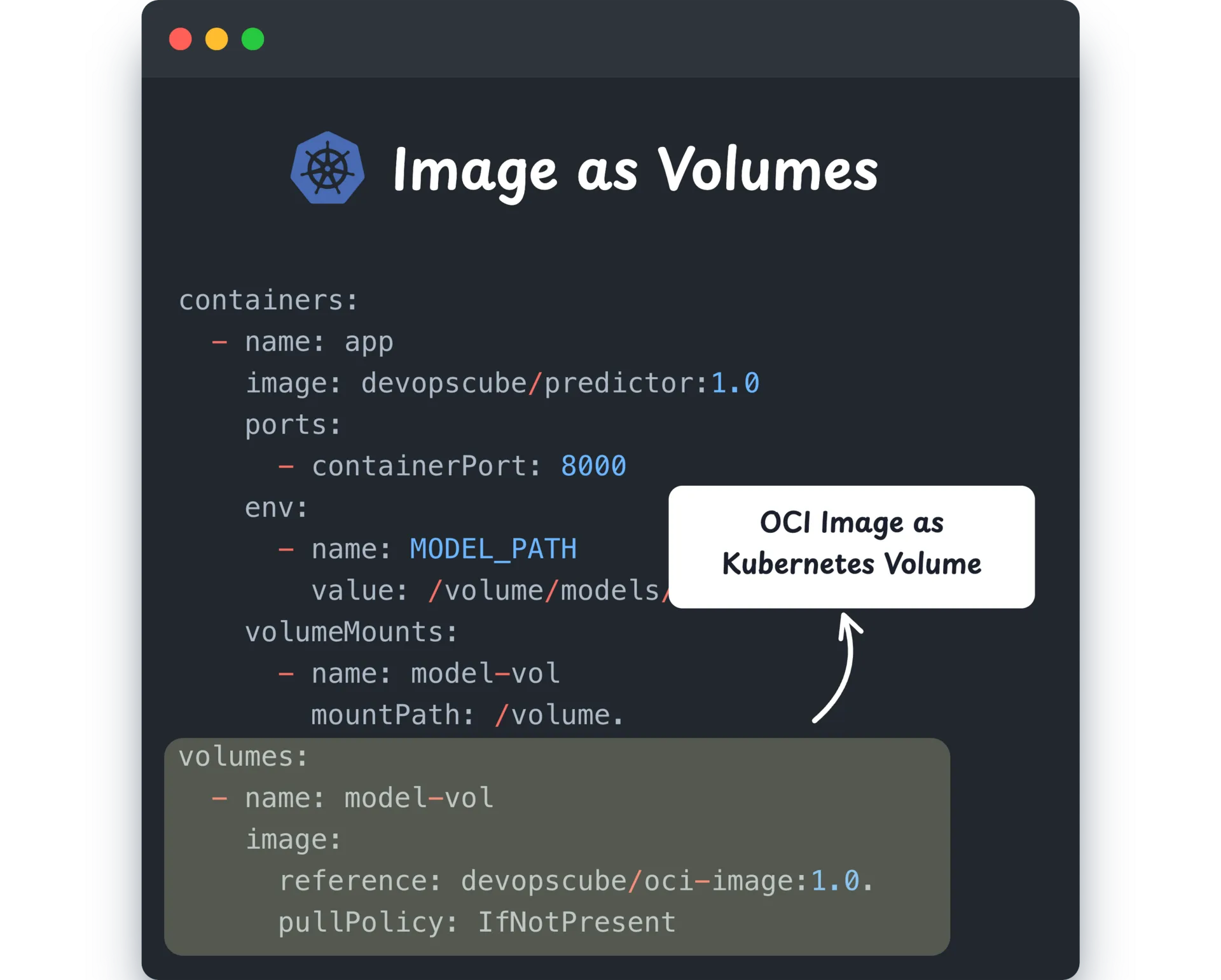

Here are the Deployment and Service manifests.

apiVersion: apps/v1

kind: Deployment

metadata:

name: predictor

spec:

replicas: 1

selector:

matchLabels:

app: predictor

template:

metadata:

labels:

app: predictor

spec:

containers:

- name: app

image: devopscube/predictor:1.0

ports:

- containerPort: 8000

env:

- name: MODEL_PATH

value: /volume/models/model.pkl

volumeMounts:

- name: model-vol

mountPath: /volume

volumes:

- name: model-vol

image:

reference: devopscube/oci-image:1.0

pullPolicy: IfNotPresent

---

apiVersion: v1

kind: Service

metadata:

name: predictor-svc

spec:

selector:

app: predictor

ports:

- port: 80

targetPort: 8000Deploy the above manifest and once the pod is running without issues, check if the model.pkl file is inside the volume.

$ kubectl exec -it predictor-7f7ff66689-gwg5w -- ls /volume/models

model.pklNow run the following command to port-forward the predictor service so that we can test the prediction endpoint.

kubectl port-forward svc/predictor-svc 8080:80Now, use the following curl command from your workstation to send a prediction request to the predictor application. This will validate whether the application is able to access the model.pkl file from the ImageVolume.

curl -X POST \

-H "Content-Type: application/json" \

-d '{

"instances": [

"sparrow",

"elephant",

"rose"

]

}' \

"http://127.0.0.1:8080/v1/models/model:predict"You will get the following output.

This is the expected output, 0 means animal, 1 means bird, and 2 means plant.

That’s a wrap! 🎉

Conclusion

In this post, you learned how to enable the ImageVolume feature in Kubernetes, build an OCI image, and test it with a sample predictor ML application.

This feature makes it easy to package data, tools , or models and switch between models without managing complex storage setups.

Refer the Kubernetes ML features blog to know more about native ML support in Kubernetes.

If you want to learn more Kubernetes concepts, look at our Kubernetes tutorial blog.

Try this feature and let me know how it goes!