In this comprehensive guide, I have explained what is Docker, its evolution, underlying core Linux concepts, and how it works.

Docker has become the defacto standard when it comes to container-based implementations. Docker is the base for container-based orchestration from small-scale implementations to large-scale enterprise applications.

Docker gained so much popularity and adoption in the DevOps community quickly because it's developed for portability and designed for modern microservice architecture.

In this blog, you will learn,

- What is Docker?

- Learn about Docker and see why Docker is beneficial and different from other container technologies.

- Docker core architecture and its key components

- Container evolution and the underlying concept of Linux Containers

- What is a container, and what Linux features make it work?

- The difference between a process, container, and a VM

Here, the idea is to get your basics right to understand what Docker really is and how it works.

What is Docker?

Docker is a popular open-source project written in go and developed by Dotcloud (A PaaS Company).

It is a container engine that uses the Linux Kernel features like namespaces and control groups to create containers on top of an operating system. So you can call it OS-level virtualization.

Docker was initially built on top of Linux containers (LXC). Later Docker replaced LXC with its container runtime libcontainer (now part of runc). I have explained the core LXC & container concepts towards the end of the article.

You might ask how Docker is different from a Linux Container (LXC) as all the concepts and implementation look similar?

Besides just being a container technology, Docker has well-defined wrapper components that make packaging applications easy. Before Docker, it was not easy to run containers. Meaning it does all the work to decouple your application from the infrastructure by packing all application system requirements into a container.

For example, if you have a Java jar file, you can run it on any server which has java installed. Same way, once you package a container with required applications using Docker, you can run it on any other host which has Docker installed.

We will have containers up and running by executing a few Docker commands & parameters.

Difference Between Docker & Container

Docker is a technology or a tool developed to manage containers efficiently.

So, can I run a container without Docker?

Yes! of course. You can use LXC technology to run containers on Linux servers. In addition, the latest tools like Podman offers similar workflows like Docker.

Things you should know about Docker:

- Docker is not LXC

- Docker is not a Virtual Machine Solution.

- Docker is not a configuration management system and is not a replacement for Chef, Puppet, Ansible, etc.

- Docker is not a platform as a service technology.

- Docker is not a container.

What Makes Docker So Great?

Docker has an efficient workflow for moving the application from the developer's laptop to the test environment to production. You will understand more about it when you look at a practical example of packaging an application into a Docker image.

Do you know that starting a docker container takes less than a second?

It is incredibly fast, and it can run on any host with compatible Linux Kernel. (Supports Windows as well)

Docker uses a Copy-on-write union file system for its image storage. Therefore, when changes are made to a container, only the changes will be written to disk using copy on the write model.

With Copy on write, you will have optimized shared storage layers for all your containers.

Docker Adoption Statistics

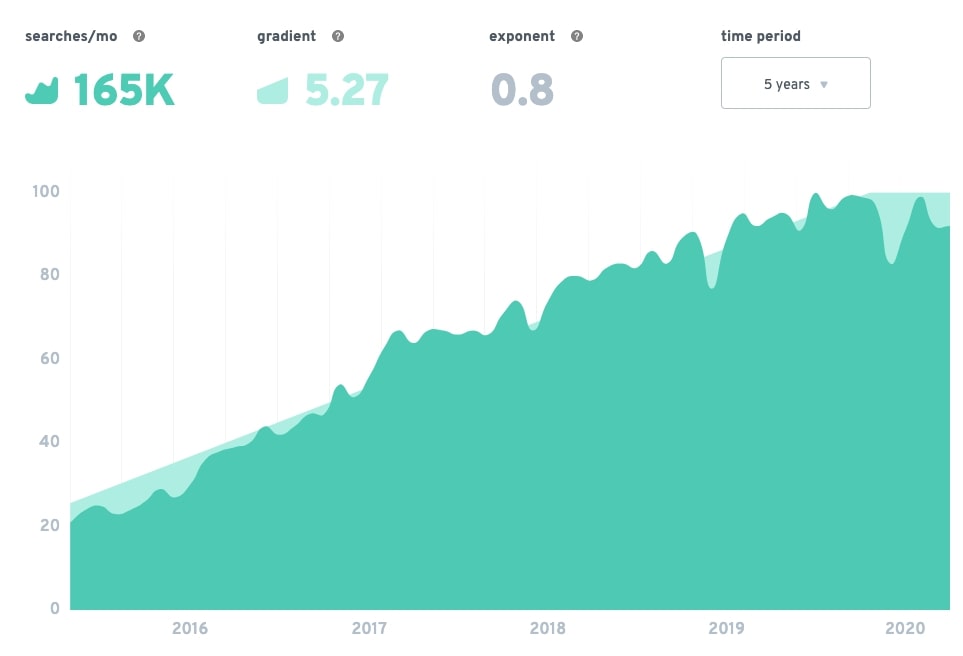

Here is the google trends data on Docker. You can see it has been an exploding topic for the last five years.

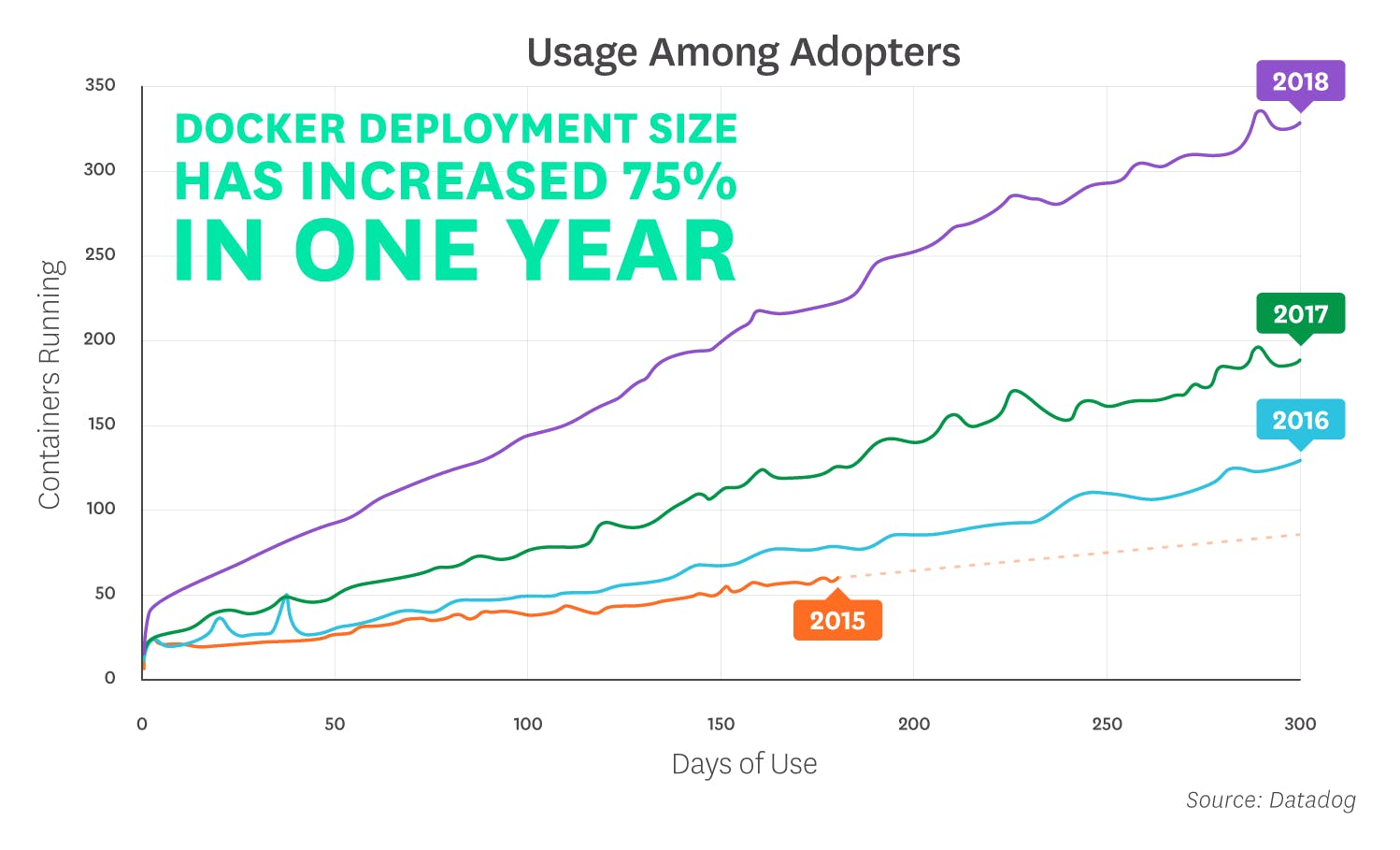

Here is a survey result from Datadog, which shows the rise in Docker adoption.

Docker Core Architecture

The following sections will look at the Docker architecture and its associated components. We will also look at how each component works together to make Docker work.

Docker architecture has changed a few times since its inception. When I published the first version of this article, Docker was built on top of LXC

Here are some notable architectural changes that happened for the Docker

- Docker moved from LXC to libcontainer in 2014

- runc - a CLI for spinning up containers that follow all OCI specifications.

- containerd - Docker separated its container management component to containerd in 2016

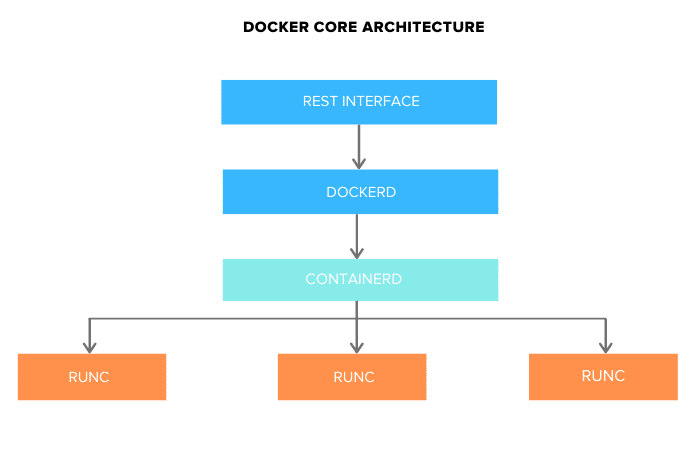

When Docker was initially launched, it had a monolithic architecture. Now it is separated into the following three different components.

- Docker Engine (dockerd)

- docker-containerd (containerd)

- docker-runc (runc)

Docker and other big organizations contributed to a standard container runtime and management layers. Hence containerd and runc are now part of the Cloud Native Foundation with contributors from all the organizations.

Now let's have a looks at each Docker component.

Docker Engine

Docker engine comprises the docker daemon, an API interface, and Docker CLI. Docker daemon (dockerd) runs continuously as dockerd systemd service. It is responsible for building the docker images.

To manage images and run containers, dockerd calls the docker-containerd APIs.

docker-containerd (containerd)

containerd is another system daemon service than is responsible for downloading the docker images and running them as a container. It exposes its API to receive instructions from the dockerd service

docker-runc

runc is the container runtime responsible for creating the namespaces and cgroups required for a container. It then runs the container commands inside those namespaces. runc runtime is implemented as per the OCI specification.

Read this excellent 3 part blog post series to understand more about container runtimes.

How Does Docker Work?

We have seen the core building blocks of Docker.

Now let's understand the Docker workflow using the Docker components.

Docker Components

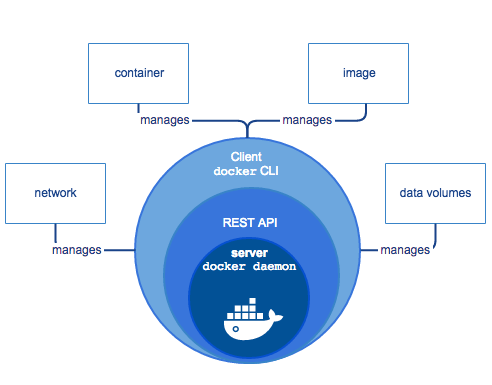

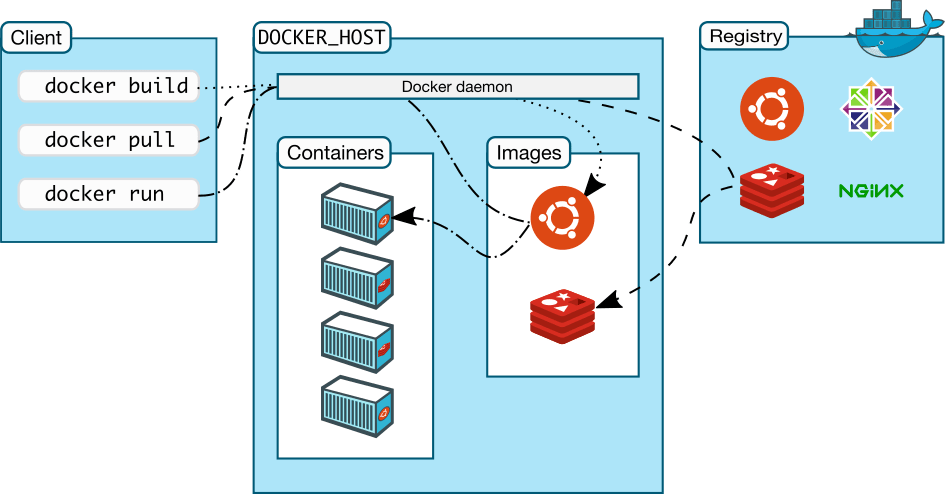

The following official high-level docker architecture diagram shows the common Docker workflow.

Docker ecosystem is composed of the following four components

- Docker Daemon (dockerd)

- Docker Client

- Docker Images

- Docker Registries

- Docker Containers

What is a Docker Daemon?

Docker has a client-server architecture. Docker Daemon (dockerd) or server is responsible for all the actions related to containers.

The daemon receives the commands from the Docker client through CLI or REST API. Docker client can be on the same host as a daemon or present on any other host.

By default, the docker daemon listens to the docker.sock UNIX socket. If you have any use case to access the docker API remotely, you need to expose it over a host port. One such use case is running Docker as Jenkins agents.

If you want to run Docker inside Docker, you can use the docker.sock from the host machine.

What is a Docker Image?

Images are the basic building blocks of Docker. It contains the OS libraries, dependencies, and tools to run an application.

Images can be prebuilt with application dependencies for creating containers. For example, if you want to run an Nginx web server as a Ubuntu container, you need to create a Docker image with the Nginx binary and all the OS libraries required to run Nginx.

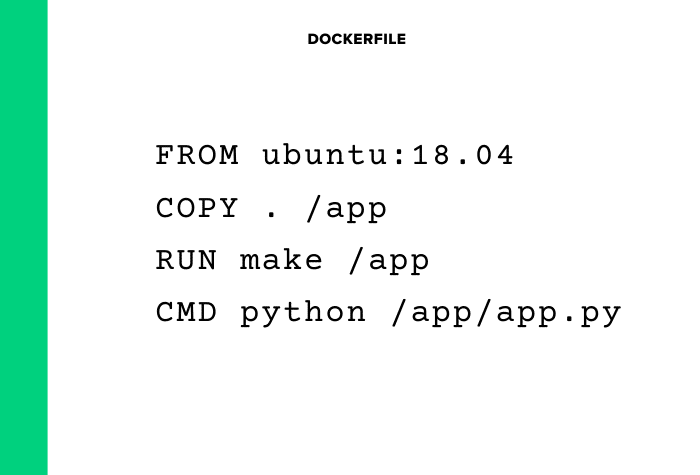

What is a Dockerfile?

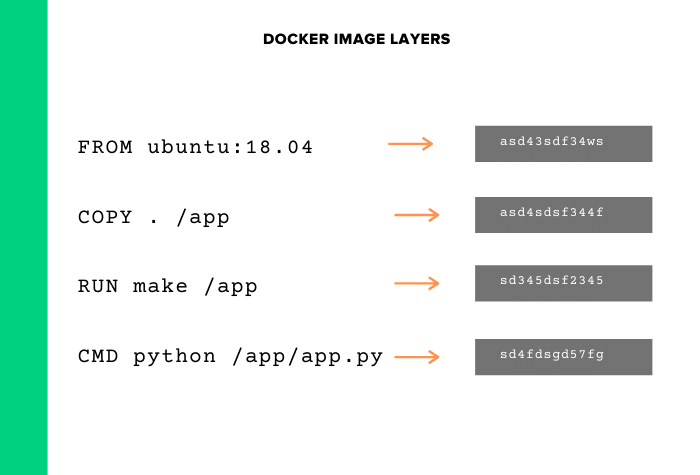

Docker has a concept of Dockerfile that is used for building the image. A Dockerfile a text file that contains one command (instructions) per line.

Here is an example of a Dockerfile.

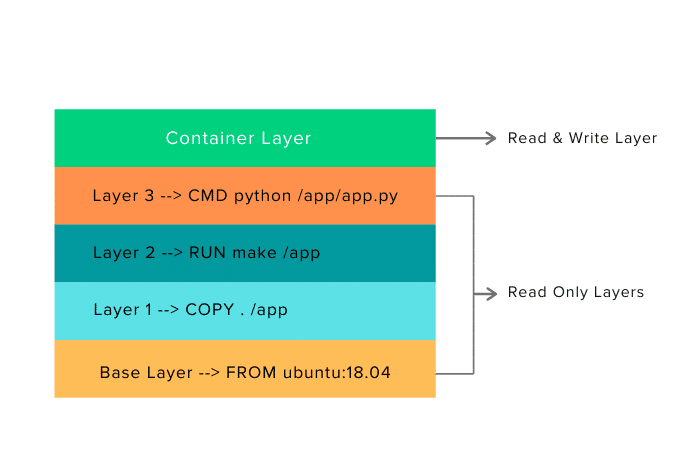

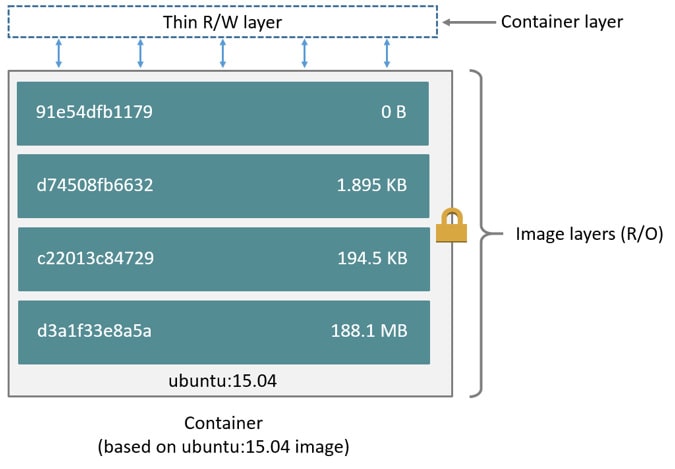

A docker image is organized in a layered fashion. Every instruction on a Dockerfile is added a layer in an image. The topmost writable layer of the image is a container.

Every image is created from a base image.

For example, if you can use a base image of Ubuntu and create another image with the Nginx application. A base image can be a parent image or an image built from a parent image. Check out his docker article to know more about it.

You might ask where this base image (Parent image) comes from? There are docker utilities to create the initial parent base image. It takes the required OS libraries and bakes them into a base image. You don't have to do this because you will get the official base images for Linux distros.

The top layer of an image is writable and used by the running container. Other layers in the image are read-only.

What is a Docker Registry?

It is a repository (storage) for Docker images.

A registry can be public or private. For example, Docker Inc provides a hosted registry service called Docker Hub. It allows you to upload and download images from a central location.

Other Docker hub users can access all your images if your repository is public. You can also create a private registry in Docker Hub.

Docker hub acts like git, where you can build your images locally on your laptop, commit it, and then be pushed to the Docker hub.

What is a Docker Container?

Docker Containers are created from existing images. It is a writable layer of the image.

If you try to relate image layers and a container, here is how it looks for a ubuntu-based image.

You can package your applications in a container, commit it, and make it a golden image to build more containers from it.

Containers can be started, stopped, committed, and terminated. If you terminate a container without committing it, all the container changes will be lost.

Ideally, containers are treated as immutable objects, and it is not recommended to make changes to a running container. Instead, make changes to a running container only for testing purposes.

Two or more containers can be linked together to form tiered application architecture. However, hosting hight scalable applications with Docker has been made easy with the advent of container orchestration tools like kubernetes.

Docker FAQs

What is the difference between containerd & runc?

Containerd is responsible for managing the container and runc is responsible for running the containers (create namespaces, cgroups and run commands inside the container) with the inputs from containerd

What is the difference between the Docker engine & the Docker daemon?

Docker engine is composed of the docker daemon, rest interface, and the docker CLI. Docker daemon is the systemd dockerd service responsible for building the docker images and sending docker instructions to containerd runtime.

Conclusion

By now, you should have a good understanding of what Docker is and how it works.

The best feature of Docker is collaboration. Docker images can be pushed to a repository and pulled down to any other host to run containers from that image.

Moreover, the Docker hub has thousands of images created by users, and you can pull those images down to your hosts based on your application requirements. Also, it is primarily used in container orchestration tools like kubernetes

Go ahead and install Docker and then you can start building your own docker images. Check out the comprehensive build docker image guide to deploy your first container.

If you want to run Docker for production workloads, make sure you follow Docker images' recommended practices.

You can read my article on how to reduce docker image size where I have listed down all the standard approaches to optimize the docker image.

Also, if you are trying to become a DevOps engineer, I highly recommend you get hands-on experience with Docker.