In this blog, I will walk you through the steps required to run docker in docker using three different methods.

Run Docker in a Docker Container

There are three ways to achieve docker in docker

- Run docker by mounting

docker.sock(DooD Method) - Docker dind method

- Using Nestybox sysbox Docker runtime

Let's have a look at each option in detail. Make sure you have docker installed in your host to try this setup.

Method 1: Docker in Docker Using [/var/run/docker.sock]

![Docker in Docker Using [/var/run/docker.sock]](https://devopscube.com/content/images/2025/03/docker-docker-unix-socket-1.png)

What is /var/run/docker.sock?

/var/run/docker.sock is the default Unix socket. Sockets are meant for communication between processes on the same host.

Docker daemon by default listens to docker.sock. If you are on the same host where the Docker daemon is running, you can use the /var/run/docker.sock to manage containers. meaning you can mount the Docker socket from the host into the container

For example, if you run the following command, it will return the version of the docker engine.

curl --unix-socket /var/run/docker.sock http://localhost/versionNow that you have a bit of understanding of what is docker.sock, let's see how to run docker in docker using docker.sock

To run docker inside docker, all you have to do is run docker with the default Unix socket docker.sock as a volume.

For example,

docker run -v /var/run/docker.sock:/var/run/docker.sock \

-ti dockerdocker.sock, it means it has more privileges over your docker daemon. So when used in real projects, understand the security risks, and use it.Now, from within the container, you should be able to execute docker commands for building and pushing images to the registry.

Here, the actual docker operations happen on the VM host running your base docker container rather than from within the container. Meaning, even though you are executing the docker commands from within the container, you are instructing the docker client to connect to the VM host docker-engine through docker.sock

To test his setup, use the official docker image from the docker hub. It has docker the docker binary in it.

Follow the steps given below to test the setup.

Step 1: Start the Docker container in interactive mode mounting the docker.sock as volume. We will use the official docker image.

docker run -v /var/run/docker.sock:/var/run/docker.sock -ti dockerStep 2: Once you are inside the container, execute the following docker command.

docker pull ubuntu

Step 3: When you list the docker images, you should see the Ubuntu image along with other docker images in your host VM.

docker imagesStep 4: Now create a Dockerfile inside the test directory.

mkdir test && cd test

vi DockerfileCopy the following Dockerfile contents to test the image build from within the container.

FROM ubuntu:18.04

LABEL maintainer="Bibin Wilson <bibinwilsonn@gmail.com>"

RUN apt-get update && \

apt-get -qy full-upgrade && \

apt-get install -qy curl && \

apt-get install -qy curl && \

curl -sSL https://get.docker.com/ | sh

Build the Dockerfile

docker build -t test-image .docker.sock permission error

While using docker.sock you may get permission denied error. In that case, you need to change the docker.sock permission to the following.

sudo chmod 666 /var/run/docker.sockAlso, you might have to add the --privileged flag to give privileged access.

The doccker sock permission gets reset server restarts. To avoid this you need to add the permission to system startup scripts.

For example, you can add the command to /etc/rc.local so that it runs automatically every time your server starts up.

Also, Keep in mind that 666 permissions open a security hole. Consult with your security team before implementing in production-level projects.

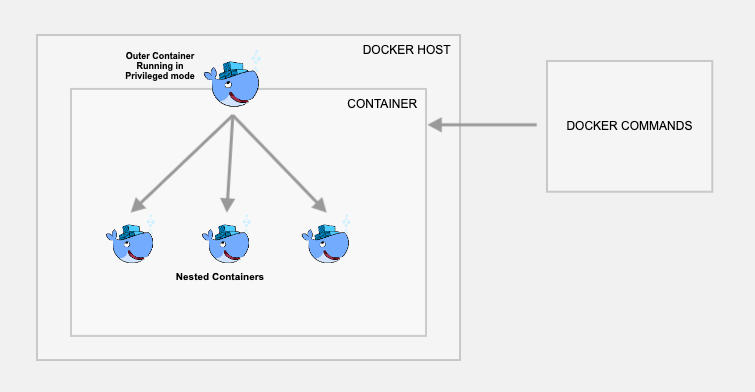

Method 2: Docker in Docker Using DinD

This method actually creates a child container inside a Docker container. Use this method only if you really want to have the containers and images inside the container. Otherwise, I would suggest you use the first approach.

For this, you just need to use the official docker image with dind tag. The dind image is baked with the required utilities for Docker to run inside a docker container.

Follow the steps to test the setup.

Step 1: Create a container named dind-test with docker:dind image

docker run --privileged -d --name dind-test docker:dindStep 2: Log in to the container using exec.

docker exec -it dind-test /bin/shNow, perform steps 2 to 4 from the previous method and validate docker command-line instructions and image build.

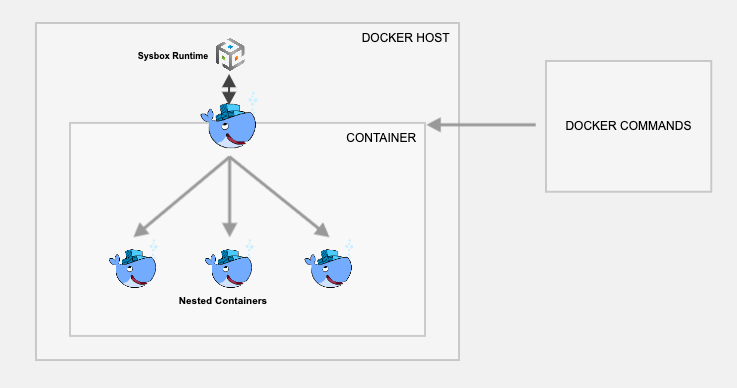

--privileged: gives full access to host’s resources (needed for Docker to work inside). It is good for CI systems or isolated environments. it is not safe for production setup due to security risk caused by full access.Method 3: Docker in Docker Using Sysbox Runtime

Methods 1 & 2 have some disadvantages in terms of security because of running the base containers in privileged mode. Nestybox tries to solve that problem by having a sysbox Docker runtime.

If you create a container using Nestybox sysbox runtime, it can create virtual environments inside a container that is capable of running systemd, docker, kubernetes without having privileged access to the underlying host system.

Explaining sysbox demands significant comprehension so I’ve excluded from the scope of this post. Please refer to this page to understand fully about sysbox

To get a glimpse, let us now try out an example

Step 1: Install the sysbox runtime environment. Refer to this page to get the latest official instructions on installing sysbox runtime.

Step 2: Once you have the sysbox runtime available, all you have to do is start the docker container with a sysbox runtime flag as shown below. Here we are using the official docker dind image.

docker run --runtime=sysbox-runc --name sysbox-dind -d docker:dindStep 3: Now take an exec session to the sysbox-dind container.

docker exec -it sysbox-dind /bin/shNow, you can try building images with the Dockerfile as shown in the previous methods.

Key Considerations

- Use Docker in Docker only if it is a requirement. Do the POCs and enough testing before migrating any workflow to the Docker-in-Docker method.

- While using containers in privileged mode, make sure you get the necessary approvals from enterprise security teams on what you are planning to do.

- When using Docker in Docker with kubernetes pods there are certain challenges. Refer to this blog to know more about it.

- If you plan to use Nestybox (Sysbox), make sure it is tested and approved by enterprise architects/security teams.

Docker in Docker Use Cases

Here are a few use cases to run docker inside a docker container.

- One potential use case for docker in docker is for the CI/CD pipeline, where you need to build and push docker images to a container registry after a successful code build.

- Modern CI/CD systems support Docker-based agents or runners where you can run all the build steps inside a container and build container images inside a container agent.

- Building Docker images with a VM is pretty straightforward. However, when you plan to use Jenkins Docker-based dynamic agents for your CI/CD pipelines, docker in docker comes as a must-have functionality.

- Sandboxed environments.

- For experimental purposes on your local development workstation.

FAQ's

Here are some frequently asked docker-in docker questions.

Is running Docker in Docker secure?

Running docker in docker using docker.sock and dind method is less secure as it has complete privileges over the docker daemon

How to run docker in docker in Jenkins?

You can use the Jenkins dynamic docker agent setup and mount the docker.sock to the agent container to execute docker commands from within the agent container.

Is there any performance impact in running Docker in Docker

The performance of the container doesn't have any effect because of the methods you use. However, the underlying hardware decides on the performance.

Conclusion

In this blog, we looked at three different methods to run Docker in Docker. When using these methods in production environments, always consult your enterprise security team for compliance.

If you are using Kubernetes, you could try building Docker images using Kaniko. Which does not require privileged access to the host.

![How To Run Docker in Docker Container [3 Easy Methods]](/content/images/size/w100/2025/03/docker-on-docker-1.png)