In this blog, we will learn how to integrate TLS certificates into a Kubernetes cluster using Cert-Manager and the Let's Encrypt Certificate Authority.

By the end of this blog, you will have learned.

- Installation of CertManager

- Configuration of Let's encrypt Certificate Authority to Certmanager

What is Cert Manager?

SSL/TLS certificates are essential for Kubernetes Ingress objects to secure communication between the users and the application.

Cert Manager is a tool that creates and manages TLS certificates for Ingress objects, also automatically renewing their validity at the right time.

The Cert Manager works with Certificate Authorities (CA) such as Let's Encrypt, Hashicorp Vault, etc.

Before setting up the Cert Manager, let us understand its workflow.

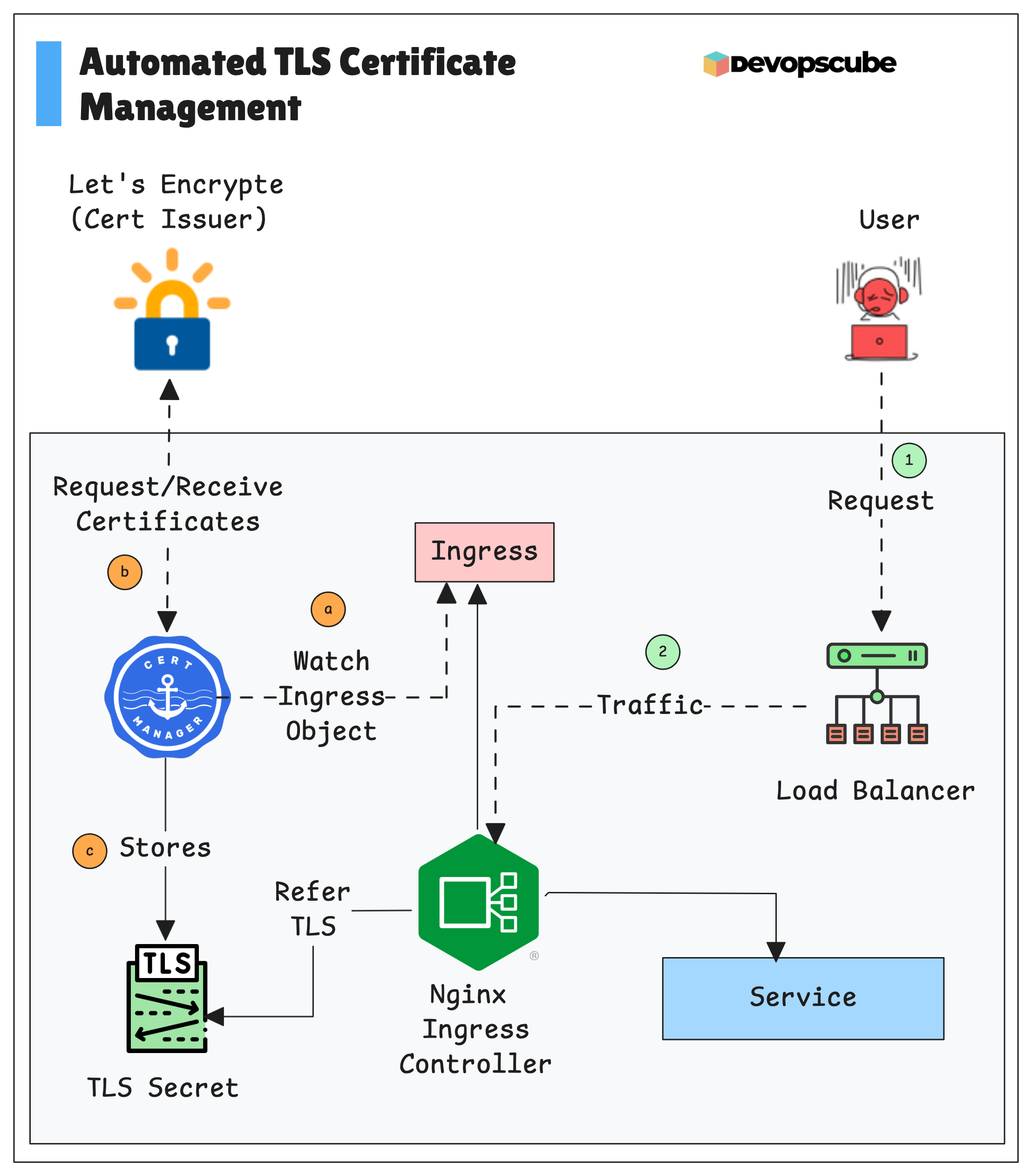

Cert Manager Workflow

The diagram below explains how the Cert manager works with the kubernetes cluster to provision and manage the TLS Certificates to safeguard the Ingress.

- When we create an Ingress object, we add a reference to the Cert Manager Issuer in it.

- The Ingress Controller retrieves information from the Ingress object and requests a certificate from the Certificate Manager.

- The Cert Manager will request the Certificate Authority, for example, Let's Encrypt.

- After the verification, the CA will generate and provide the certificate to the Cert Manager.

- The generated certificate will be stored in Kubernetes as a TLS Secret.

- The Ingress Controller will perform the TLS termination using the stored certificate.

- When a user tries to access the application, the external traffic is routed from the external Load Balancer to the Ingress Controller.

- The TLS termination will happen in the Ingress Controller with the TLS certificate and securely route the traffic to the application Pods.

How to Set Up Cert Manager on Kubernetes

We will install the Cert Manager using the Helm chart. Before adding the repository, we need to ensure the following prerequisites are met.

Prerequisites

- Kubernetes Cluster version 1.30+

- Nginx Ingress Controller [Kubernetes cluster]

- Helm [Local Workstation]

- Kubectl [Local Workstation]

- DNS Provider (Route53, Cloudflare, etc)

A DNS provider is required for our certificate issuer (Let's Encrypt). It verifies the validity of domain names to provide certificates.

Step 1: Install Cert Manager on Kubernetes

First, we need to add the Cert Manager Helm Repository

helm repo add jetstack https://charts.jetstack.io --force-updateUpdate the repository before installation

helm repo updateNow, we are ready to deploy the Cert Manager, but if you want to download the Helm chart to store your own repo, use the following command.

helm pull jetstack/cert-manager --untarThe following is the tree structure of the Helm chart

cert-manager

├── Chart.yaml

├── README.md

├── templates

│ ├── NOTES.txt

│ ├── _helpers.tpl

│ ├── cainjector-config.yaml

│ ├── cainjector-deployment.yaml

│ ├── cainjector-poddisruptionbudget.yaml

│ ├── cainjector-psp-clusterrole.yaml

│ ├── cainjector-psp-clusterrolebinding.yaml

│ ├── cainjector-psp.yaml

│ ├── cainjector-rbac.yaml

│ ├── cainjector-service.yaml

│ ├── cainjector-serviceaccount.yaml

│ ├── controller-config.yaml

│ ├── crd-acme.cert-manager.io_challenges.yaml

│ ├── crd-acme.cert-manager.io_orders.yaml

│ ├── crd-cert-manager.io_certificaterequests.yaml

│ ├── crd-cert-manager.io_certificates.yaml

│ ├── crd-cert-manager.io_clusterissuers.yaml

│ ├── crd-cert-manager.io_issuers.yaml

│ ├── deployment.yaml

│ ├── extras-objects.yaml

│ ├── networkpolicy-egress.yaml

│ ├── networkpolicy-webhooks.yaml

│ ├── poddisruptionbudget.yaml

│ ├── podmonitor.yaml

│ ├── psp-clusterrole.yaml

│ ├── psp-clusterrolebinding.yaml

│ ├── psp.yaml

│ ├── rbac.yaml

│ ├── service.yaml

│ ├── serviceaccount.yaml

│ ├── servicemonitor.yaml

│ ├── startupapicheck-job.yaml

│ ├── startupapicheck-psp-clusterrole.yaml

│ ├── startupapicheck-psp-clusterrolebinding.yaml

│ ├── startupapicheck-psp.yaml

│ ├── startupapicheck-rbac.yaml

│ ├── startupapicheck-serviceaccount.yaml

│ ├── webhook-config.yaml

│ ├── webhook-deployment.yaml

│ ├── webhook-mutating-webhook.yaml

│ ├── webhook-poddisruptionbudget.yaml

│ ├── webhook-psp-clusterrole.yaml

│ ├── webhook-psp-clusterrolebinding.yaml

│ ├── webhook-psp.yaml

│ ├── webhook-rbac.yaml

│ ├── webhook-service.yaml

│ ├── webhook-serviceaccount.yaml

│ └── webhook-validating-webhook.yaml

├── values.schema.json

└── values.yaml

2 directories, 52 filesThe following are the container images used in this Helm chart

quay.io/jetstack/cert-manager-controllerquay.io/jetstack/cert-manager-webhookquay.io/jetstack/cert-manager-cainjectorquay.io/jetstack/cert-manager-acmesolverquay.io/jetstack/cert-manager-startupapicheck

In the Helm chart, you can see a values file (values.yaml) which has all modifiable values that we can change if required.

Instead of editing this main file, we create a new file and add our own settings.

Create a file named custom-values.yaml and add the following contents to enable the Cert Manager Custom Resource Definitions

crds:

enabled: true

keep: falseThe modification we have done is

crds.enabled: true- To install the Cert Manager's required CRDscrds.keep: false- To remove the CRDs when we uninstall the Cert Manager.

After making the changes, we can install the Cert Manager using the following command.

helm install \

cert-manager jetstack/cert-manager \

--namespace cert-manager \

--create-namespace \

--values custom-values.yamlStep 2: Validating the Cert Manager Installation

Once the installation is complete, verify the Cert Manager resources and ensure everything is running as expected.

kubectl get all -n cert-managerYou will get the following output

cert-manager

NAME READY STATUS RESTARTS AGE

pod/cert-manager-55c5fdb5f5-hfp7t 1/1 Running 0 87s

pod/cert-manager-cainjector-5dc4bf4cdf-fgwtz 1/1 Running 0 87s

pod/cert-manager-webhook-6ff7dcb868-4fkbp 1/1 Running 0 87s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cert-manager ClusterIP 10.100.223.113 <none> 9402/TCP 87s

service/cert-manager-cainjector ClusterIP 10.100.192.136 <none> 9402/TCP 87s

service/cert-manager-webhook ClusterIP 10.100.161.169 <none> 443/TCP,9402/TCP 87s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cert-manager 1/1 1 1 88s

deployment.apps/cert-manager-cainjector 1/1 1 1 88s

deployment.apps/cert-manager-webhook 1/1 1 1 88s

NAME DESIRED CURRENT READY AGE

replicaset.apps/cert-manager-55c5fdb5f5 1 1 1 88s

replicaset.apps/cert-manager-cainjector-5dc4bf4cdf 1 1 1 88s

replicaset.apps/cert-manager-webhook-6ff7dcb868 1 1 1 88sWe also need to check whether the Custom Resource Definitions are deployed or not.

kubectl get crdsYou will get the following output

$ kubectl get crds | grep -iE "cert-manager"

certificaterequests.cert-manager.io 2025-11-04T10:42:30Z

certificates.cert-manager.io 2025-11-04T10:42:30Z

challenges.acme.cert-manager.io 2025-11-04T10:42:31Z

clusterissuers.cert-manager.io 2025-11-04T10:42:32Z

issuers.cert-manager.io 2025-11-04T10:42:32Z

orders.acme.cert-manager.io 2025-11-04T10:42:30ZThe following is an explanation of the Custom Resources.

certificaterequests- To track the certificate request progress and status.certificates- Store the certificates created by the Certificate Authority (CA)challenges- To verify the ownership of the requesterclusterissuers- Certificate issuers for the entire clusterissuers- Certificate issuers for specific namespacesorders- To track all the requests made to the Certificate Authority

Now, our Cert Manager is ready, so in the next step, we map the Load Balancer to Route53

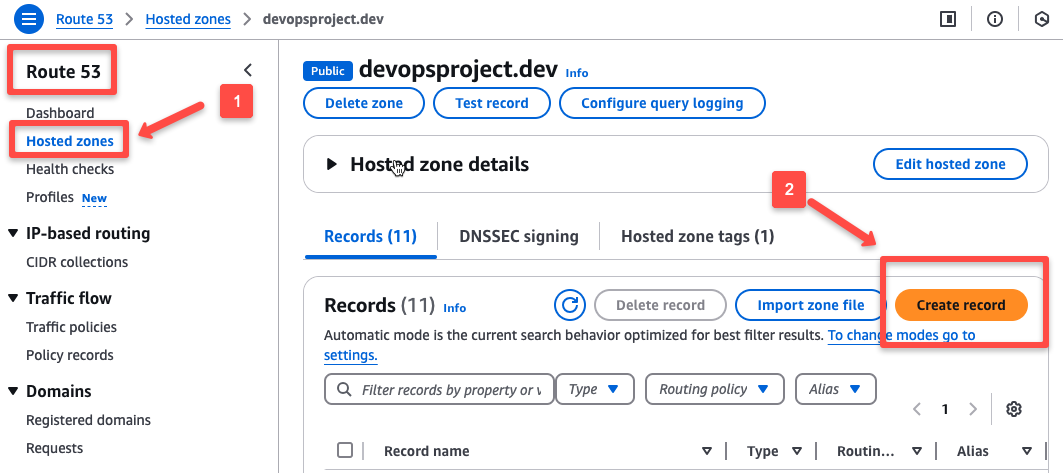

Map the AWS ELB to Route 53

Assuming you already installed Ingress controller on EKS, then it should have created a Load Balancer.

If you are not setup Ingress, refer this --> Setup Nginx Ingress Controller On Kubernetes

Now, we are going to map that Load Balancer DNS to Route53 for public DNS resolution.

Open the Route53 service in AWS and click "Create record".

Fill the record with the following details of

- Prefix for the domain name - Here, I am giving as

nginxso the domain name will benginx.devopsproject.dev - Record type - Should be

Ato map with Load Balancer DNS - Enable "Alias" to select the Load balancer type, region and DNS address.

To ensure the mapping is properly done, make a DNS query to the domain name.

$ dig nginx.devopsproject.dev

; <<>> DiG 9.10.6 <<>> nginx.devopsproject.dev

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 55696

;; flags: qr rd ra; QUERY: 1, ANSWER: 2, AUTHORITY: 4, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;nginx.devopsproject.dev. IN A

;; ANSWER SECTION:

nginx.devopsproject.dev. 60 IN A 44.252.195.62

nginx.devopsproject.dev. 60 IN A 34.218.125.185

;; AUTHORITY SECTION:

devopsproject.dev. 172800 IN NS ns-1127.awsdns-12.org.

devopsproject.dev. 172800 IN NS ns-1958.awsdns-52.co.uk.

devopsproject.dev. 172800 IN NS ns-418.awsdns-52.com.

devopsproject.dev. 172800 IN NS ns-966.awsdns-56.net.

;; Query time: 22 msec

;; SERVER: fe80::1%6#53(fe80::1%6)

;; WHEN: Wed Nov 05 17:55:16 IST 2025

;; MSG SIZE rcvd: 224

We can see the query is routed to the IPs of the Load Balancer, which ensures that the resolution is properly done.

Now, we need a demo application for the testing.

Install a Demo Application

I am deploying an Nginx web server for testing.

kubectl create deployment web-server --image nginx --port 80We need to create a service for this deployment.

kubectl expose deployment web-server --name web-svc --port 80 --target-port 80 Once the deployment is completed, ensure the web server is running.

$ kubectl get po,svc

NAME READY STATUS RESTARTS AGE

pod/web-server-6d7585d7f-z869v 1/1 Running 0 4m59s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/kubernetes ClusterIP 10.100.0.1 <none> 443/TCP 57m

service/web-svc ClusterIP 10.100.174.18 <none> 80/TCP 37sOur test deployment is ready. So, we need to configure for the certificate creation.

Create a Cluster Issuer

Here, we need to choose who should provide our certificates

For this, we will use,

Cluster Issuer CRD to request certificate and Let's Encrypt as Certificate Authority to provide the certificate.

Copy and paste the following contents on your terminal to create a manifest for the ClusterIssuer object.

cat << EOF > cluster-issuer.yaml

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: letsencrypt-dev

spec:

acme:

server: https://acme-v02.api.letsencrypt.org/directory

email: devopscube@gmail.com

privateKeySecretRef:

name: letsencrypt-prod

solvers:

- http01:

ingress:

class: nginx

EOFThis issuer works for the entire cluster, so you can utilize for any namespaces.

Replace the email with yours to get notifications before the certificate expires.

You can give any name on privateKeySecretRef.name, so that it creates a secret with that name to store a private key for the Let's encrypt account (ACME account).

spec.acme.solvers.http01.ingress.class: nginx is the default Ingress Class of the Nginx Ingress Controller.kubectl apply -f cluster-issuer.yamlWe have to ensure that the object is successfully created.

$ kubectl get clusterissuers

NAME READY AGE

letsencrypt-dev True 28sCreate an Ingress Object with the Certificate Issuer

Now, we need to create Ingress object with the issuer to create TLS certificates for the Ingress during the creation.

cat << EOF > web-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: web-server-ingress

namespace: default

annotations:

nginx.ingress.kubernetes.io/force-ssl-redirect: "true"

nginx.ingress.kubernetes.io/backend-protocol: "HTTP"

nginx.ingress.kubernetes.io/ssl-passthrough: "false"

cert-manager.io/cluster-issuer: "letsencrypt-dev"

spec:

ingressClassName: nginx

rules:

- host: nginx.devopsproject.dev

http:

paths:

- pathType: Prefix

backend:

service:

name: web-svc

port:

number: 80

path: /

tls:

- hosts:

- nginx.devopsproject.dev

secretName: nginx-devopsproject-tls

EOFTo integrate the Cert Manager with the Ingress, we need to pass the following annotation.

metadata.annotations.cert-manager.io/cluster-issuer: letsencrypt-dev- In the TLS section, add hostname and name for a secret.

Cert Manager use this secret name to create TLS secret once it gets the certificate from the Let's Encrypt Issuer.

kubectl apply -f web-ingress.yamlThe TLS certificate will be generated only after the Ingress object deployment.

Ensure that the Ingress Object is created and verify the status.

$ kubectl get ingress

NAME CLASS HOSTS ADDRESS PORTS AGE

web-server-ingress nginx nginx.devopsproject.dev a090d3d63364645a08b739a596fd6095-b607c436b02c89d4.elb.us-west-2.amazonaws.com 80, 443 16hEach time you create an Ingress object for an application, the TLS certificate will automatically be generated and attached to the resource.

Now, we can check the Secret because the TLS certificate will be stored as a secret in the cluster.

$ kubectl get secret

NAME TYPE DATA AGE

nginx-devopsproject-tls kubernetes.io/tls 2 16hIf you want to know more details about the generated certificate, we can describe the certificates Custom Resource Definition.

$ kubectl describe certificate nginx-devopsproject-tls

Name: nginx-devopsproject-tls

Namespace: default

Labels: <none>

Annotations: <none>

API Version: cert-manager.io/v1

Kind: Certificate

Metadata:

Creation Timestamp: 2025-11-05T12:08:52Z

Generation: 1

Owner References:

API Version: networking.k8s.io/v1

Block Owner Deletion: true

Controller: true

Kind: Ingress

Name: web-server-ingress

UID: 8c7bb1a7-fca9-4593-9738-ea5786ab8669

Resource Version: 87098

UID: 5e27ca7a-8cd6-4a7c-a123-fdeadfc9bb4b

Spec:

Dns Names:

nginx.devopsproject.dev

Issuer Ref:

Group: cert-manager.io

Kind: ClusterIssuer

Name: letsencrypt-dev

Secret Name: nginx-devopsproject-tls

Usages:

digital signature

key encipherment

Status:

Conditions:

Last Transition Time: 2025-11-05T12:08:54Z

Message: Certificate is up to date and has not expired

Observed Generation: 1

Reason: Ready

Status: True

Type: Ready

Not After: 2026-02-03T11:10:22Z

Not Before: 2025-11-05T11:10:23Z

Renewal Time: 2026-01-04T11:10:22Z

Revision: 1

Events: <none>We can now check our application with the hostname and ensure the TLS Certificate is attached.

Verify the TLS Attachment

To verify this, open any browser and paste the hostname as URL (e.g., nginx.devopsproject.dev)

The TLS termination will happen in the Ingress Controller when the external traffic is reached.

Conclusion

Cert Manager will track the certificates that have been created and renew them before their expiration.

By default, the certification validity is 90 days and if you want change the renewal, you can modify the settings.

Cert Manager also can create self signed certificates by itself without a help of an Isser. To know more, please refer to this official documentation.