In this blog, you’ll learn what a Kubeconfig file is and how to create and use one to connect to a Kubernetes cluster with hands-on examples.

By the end of this guide, you will learn:

- What a kubeconfig file is

- How to use it to connect to Kubernetes clusters

- How to create a custom kubeconfig file

- How to merge multiple kubeconfig files.

- How to secure the kubeconfig files.

What is a Kubeconfig file?

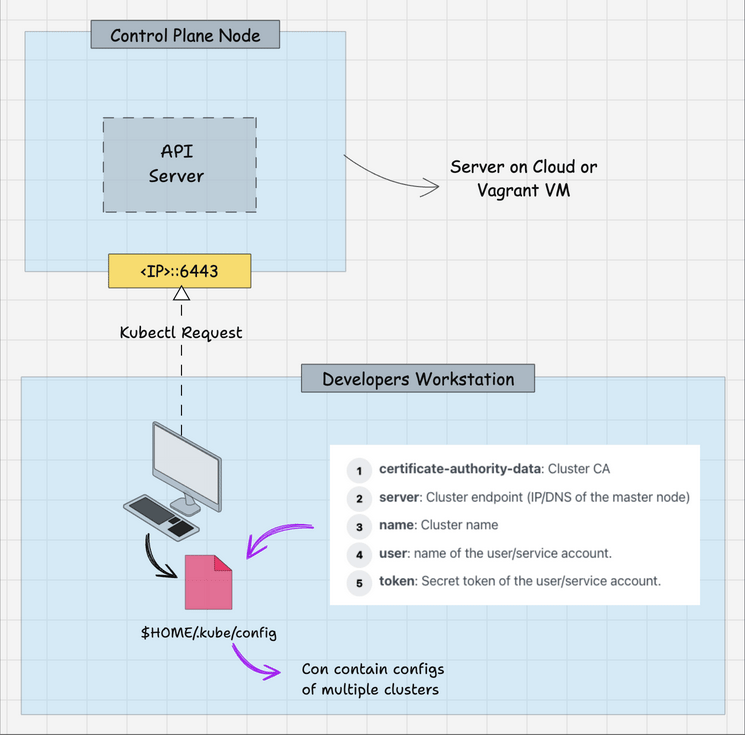

A Kubeconfig is a YAML file with all the Kubernetes cluster details, certificates, and secret tokens to authenticate the cluster. You might get this config file directly from the cluster administrator or from a cloud platform if you are using a managed Kubernetes cluster.

When you use kubectl, it uses the information in the kubeconfig file to connect to the kubernetes cluster API. The default location of the Kubeconfig file is $HOME/.kube/config

Not just users, any cluster component that need to authenticate to the kubernetes API server need the kubeconfig file.

For example, kubernetes cluster components like controller manager, scheduler and kubelet use the kubeconfig files to interact with the API server.

All the cluster Kubeconfig files are present in the control plane /etc/kubernetes folder (.conf files).

Check out our CKA course and practice test bundle (Use code DCUBE25 to get 25% OFF today).

We explain concepts using illustrations, hands-on exercises, real-world examples, and provide dedicated discord based user support.

Example Kubeconfig File

Here is an example of a Kubeconfig. It needs the following key information to connect to the Kubernetes clusters.

- certificate-authority-data: Cluster CA

- server: Cluster endpoint (IP/DNS of the master node)

- name: Cluster name

- user: name of the user/service account.

- token: Secret token of the user/service account.

apiVersion: v1

clusters:

- cluster:

certificate-authority-data: <ca-data-here>

server: https://your-k8s-cluster.com

name: <cluster-name>

contexts:

- context:

cluster: <cluster-name>

user: <cluster-name-user>

name: <cluster-name>

current-context: <cluster-name>

kind: Config

preferences: {}

users:

- name: <cluster-name-user>

user:

token: <secret-token-here>

Kubeconfig File Usage

You can use the kubeconfig in different ways and each way has its own precedence.

Here is the precedence in order.

- Kubectl Context: Kubeconfig with kubectl overrides all other configs. It has the highest precedence.

- Environment Variable: KUBECONFIG env variable overrides the current context.

- Command-Line Reference: The current context has the least precedence over the inline config reference and env variable.

Now let's take a look at all three ways to use the Kubeconfig file.

Method 1: Using Kubeconfig with Kubectl Context

To connect to the Kubernetes cluster, the basic prerequisite is the Kubectl CLI plugin. If you don't have the CLI installed, follow the instructions given here.

Now follow the steps given below to use the kubeconfig file to interact with the cluster.

Step 1: Move kubeconfig to .kube directory.

Kubectl interacts with the kubernetes cluster using the details available in the Kubeconfig file. By default, kubectl looks for the config file in the /.kube location.

Let's move the kubeconfig file to the .kube directory. Replace /path/to/kubeconfig with your kubeconfig current path.

mv /path/to/kubeconfig ~/.kubeStep 2: List all cluster contexts

You can have any number of kubeconfig in the .kube directory. Each config will have a unique context name (ie, the name of the cluster). You can validate the Kubeconfig file by listing the contexts. You can list all the contexts using the following command. It will list the context name as the name of the cluster.

kubectl config get-contexts -o=nameStep 3: Switch Kubernetes contex

Now you need to set the current context to your kubeconfig file. You can set that using the following command. replace <cluster-name> with your listed context name.

kubectl config use-context <cluster-name> For example,

kubectl config use-context my-dev-clusterStep 4: Validate the Kubernetes cluster connectivity

To validate the cluster connectivity, you can execute the following kubectl command to list the cluster nodes.

kubectl get nodesMethod 2: Using KUBECONFIG environment variable

You can set the KUBECONFIG environment variable with the kubeconfig file path to connect to the cluster. So wherever you are using the kubectl command from the terminal, the KUBECONFIG env variable should be available. If you set this variable, it overrides the current cluster context.

You can set the variable using the following command. Where dev_cluster_config is the kubeconfig file name.

export KUBECONFIG=$HOME/.kube/dev_cluster_configMethod 3: Using Kubeconfig File With Kubectl

You can pass the Kubeconfig file with the Kubectl command to override the current context and KUBECONFIG env variable.

Here is an example to get nodes.

kubectl get nodes --kubeconfig=$HOME/.kube/dev_cluster_configAlso, you can use,

KUBECONFIG=$HOME/.kube/dev_cluster_config kubectl get nodesMerge Multiple Kubeconfig Files

Usually, when you work with multiple Kubernetes clusters, all the cluster contexts get added as a single file.

However, there are situations where you will be given a Kubeconfig file with limited access to connect to prod or non-prod servers.

To manage all clusters effectively using a single config, you can merge the other Kubeconfig files to the default $HOME/.kube/config file using the supported kubectl command.

Let's assume you have three Kubeconfig files in the $HOME/.kube/ directory.

- config (default kubeconfig)

- dev_config

- test_config

You can merge all three configs into a single file using the following command. Ensure you are running the command from the HOME/ .kube directory.

KUBECONFIG=config:dev_config:test_config kubectl config view --merge --flatten > config.newThe above command creates a merged config named config.new.

Now rename the old $HOME.kube/config file.

mv $HOME/.kube/config $HOME/.kube/config.oldRename the config.new to config.

mv $HOME/.kube/config.new $HOME/.kube/configTo verify the configuration, try listing the contexts from the config.

kubectl config get-contexts -o nameIf you want to view the kubeconfig file in a condensed format to analyze all the configurations, you can use the following minify command.

kubectl config view --minifyDelete Cluster Context From Kubeconfig

There are scenarios where you might have context added to the kubeconfig file and the cluster gets deleted.

In this scenario, you might want to remove the context from the Kubeconfig file. Here is how you can do it.

First, list the contexts.

kubectl config get-contexts -o=nameGet the context name and delete it using the following command. Replace <context-name> with your context name.

kubectl config delete-context <context-name>You can set a new context of existing clusters using,

kubectl config use-context <context-name>Creating a Kubeconfig File

There are scenarios in real time project environments where you need to provide Kubeconfig files to developers or other teams to connect to clusters for specific use cases. It could be user access or for apps.

Now we will look at creating Kubeconfig files using the serviceaccount method. serviceaccount is the default user type managed by Kubernetes API.

A kubeconfig needs the following important details.

- Cluster endpoint (IP or DNS name of the cluster)

- Cluster CA Certificate

- Cluster name

- Service account user name

- Service account token

For this demo, I am creating a service account with clusterRole that has limited access to cluster-wide resources. You can also create a normal role and Rolebinding that limits the user's access to a specific namespace.

Step 1: Create a Service Account

The service account name will be the user name in Kubeconfig. Here I am creating the service account in the kube-system as I am creating a clusterRole. If you want to create a config to give namespace level limited access, create the service account in the required namespace.

kubectl -n kube-system create serviceaccount devops-cluster-adminStep 2: Create a Secret Object for the Service Account

From Kubernetes Version 1.24, the secret for the service account has to be created separately with an annotation kubernetes.io/service-account.name and type kubernetes.io/service-account-token

Let's create a secret named devops-cluster-admin-secret with the annotation and type.

cat << EOF | kubectl apply -f -

apiVersion: v1

kind: Secret

metadata:

name: devops-cluster-admin-secret

namespace: kube-system

annotations:

kubernetes.io/service-account.name: devops-cluster-admin

type: kubernetes.io/service-account-token

EOFStep 3: Create a ClusterRole

Let's create a clusterRole with limited privileges to cluster objects. You can add the required object access as per your requirements. Refer to the service account with clusterRole access blog for more information.

If you want to create a namespace-scoped role, refer to creating service account with role.

Execute the following command to create the clusterRole.

cat << EOF | kubectl apply -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

name: devops-cluster-admin

rules:

- apiGroups: [""]

resources:

- nodes

- nodes/proxy

- services

- endpoints

- pods

verbs: ["get", "list", "watch"]

- apiGroups:

- extensions

resources:

- ingresses

verbs: ["get", "list", "watch"]

EOFStep 4: Create ClusterRoleBinding

The following YAML is a ClusterRoleBinding that binds the devops-cluster-admin service account with the devops-cluster-admin clusterRole.

cat << EOF | kubectl apply -f -

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: devops-cluster-admin

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: devops-cluster-admin

subjects:

- kind: ServiceAccount

name: devops-cluster-admin

namespace: kube-system

EOFStep 5: Get all Cluster Details & Secrets

We will retrieve all the required kubeconfig details and save them in variables. Then, finally, we will substitute it directly with the Kubeconfig YAML.

If you have used a different secret name, replace devops-cluster-admin-secret with your secret name,

export SA_SECRET_TOKEN=$(kubectl -n kube-system get secret/devops-cluster-admin-secret -o=go-template='{{.data.token}}' | base64 --decode)

export CLUSTER_NAME=$(kubectl config current-context)

export CURRENT_CLUSTER=$(kubectl config view --raw -o=go-template='{{range .contexts}}{{if eq .name "'''${CLUSTER_NAME}'''"}}{{ index .context "cluster" }}{{end}}{{end}}')

export CLUSTER_CA_CERT=$(kubectl config view --raw -o=go-template='{{range .clusters}}{{if eq .name "'''${CURRENT_CLUSTER}'''"}}"{{with index .cluster "certificate-authority-data" }}{{.}}{{end}}"{{ end }}{{ end }}')

export CLUSTER_ENDPOINT=$(kubectl config view --raw -o=go-template='{{range .clusters}}{{if eq .name "'''${CURRENT_CLUSTER}'''"}}{{ .cluster.server }}{{end}}{{ end }}')Step 6: Generate the Kubeconfig With the variables.

If you execute the following YAML, all the variables get substituted and a config named devops-cluster-admin-config gets generated.

cat << EOF > devops-cluster-admin-config

apiVersion: v1

kind: Config

current-context: ${CLUSTER_NAME}

contexts:

- name: ${CLUSTER_NAME}

context:

cluster: ${CLUSTER_NAME}

user: devops-cluster-admin

clusters:

- name: ${CLUSTER_NAME}

cluster:

certificate-authority-data: ${CLUSTER_CA_CERT}

server: ${CLUSTER_ENDPOINT}

users:

- name: devops-cluster-admin

user:

token: ${SA_SECRET_TOKEN}

EOFStep 7: Validate the generated Kubeconfig

To validate the Kubeconfig, execute it with the kubectl command to see if the cluster is getting authenticated.

kubectl get nodes --kubeconfig=devops-cluster-admin-config Additionally, other services, such as OIDC (OpenID Connect), can be used to manage users and create kubeconfig files that limit access to the cluster based on specific security requirements.

Security Best Practices

Always ensure you follow the following security best practices for Kubeconfig files.

Securing Kubeconfig Files

Your kubeconfig file holds sensitive details like tokens and certificates, so it should only be accessible to you. Make sure you're the only one who can read or modify it.

If your Kubeconfig file is not secure, you will get the following error while using kubectl.

WARNING: Kubernetes configuration file is group-readable. This is insecure. Location: /Users/bibinwilson/.kube/configUsing the following commands, you can set and verify restrictive permissions.

# Set restrictive permissions (owner read/write only)

chmod 600 ~/.kube/config

# Verify permissions

ls -la ~/.kube/config

# Should show: -rw------- 1 user user

# For multiple configs

find ~/.kube -name "*.config" -exec chmod 600 {} \;You can also lock down the entire .kube directory to make sure no one else can read from or write to it.

chmod 700 ~/.kube

chown -R $USER ~/.kubePrevent accidental exposure

If you’re working in a Git repo, avoid committing sensitive kubeconfig files by adding it to the .gitignore file.

echo "/.kube/" >> ~/.gitignore

echo "kubeconfig*" >> ~/.gitignoreKubeconfig File FAQs

Let's look at some of the frequently asked Kubeconfig file questions.

Where do I put the Kubeconfig file?

The default Kubeconfig file location is $HOME/.kube/ folder in the home directory. Kubectl looks for the kubeconfig file using the context name from the .kube folder. However, if you are using the KUBECONFIG environment variable, you can place the kubeconfig file in a preferred folder and refer to the path in the KUBECONFIG environment variable.

Where is the Kubeconfig file located?

All the kubeconfig files are located in the .kube directory in the user home directory. That is $HOME/.kube/config

How to manage multiple Kubeconfig files?

You can store all the kubeconfig files in $HOME/.kube directory. You need to change the cluster context to connect to a specific cluster.

How to create a Kubeconfig file?

To create a Kubeconfig file, you need to have the cluster endpoint details, cluster CA certificate, and authentication token. Then you need to create a Kubernetes YAML object of type config with all the cluster details.

How to use Proxy with Kubeconfig?

If you are behind a corporate proxy, you can use proxy-url: https://proxy.host:port in your Kubeconfig file to connect to the cluster.

Conclusion

In this blog, we learned different ways to connect to the Kubernetes cluster using a custom Kubeconfig file.

If you are learning Kubernetes, check out the comprehensive list of kubernetes tutorials for beginners

If you are interested in Kubernetes certification check out the best kubernetes certifications guide that helps you choose the right Kubernetes certification based on your domain competencies.