In this blog, you will learn how to install and configure Istio on Kubernetes using Helm.

By the end, you will understand,

- Different Istio installation modes

- How to install Istio custom resources and the sidecar proxy (daemon)

- How to validate the setup with a demo application & canary release strategy.

- Key concepts like

DestinationRuleandVirtualServiceand more..

Let's get started!

What is Istio?

Istio is a popular Service Mesh, an infrastructure layer that manages communication between microservices in a Kubernetes cluster. It is used in production by companies like Airbnb, Intuit, eBay, Salesforce, etc.

Everyone starting with Istio will have the following question.

Why do we need Istio when Kubernetes offers many microservices functionalities?

Well, when you have dozens or hundreds of microservices talking to each other, you face challenges that Kubernetes cant handle.

Here are some of those features Kubernetes doesnt offer natively.

- Load balancing between service versions.

- Implementing Circuit breaking and retry patterns.

- Enabling automatic Mutual TLS (mTLS) between all services.

- Automatic metrics collection for all service-to-service calls

- Distributed tracing to follow requests across services and more..

Without ISTIO, you will have to build these features into every microservice yourself. But Istio provides them at the infrastructure level using sidecar proxies (Envoy) that intercept all network traffic without changing your application code.

Now that you have a high level idea of Istio service mesh, lets get started with the setup.

Setup Prerequisites

To set up Istio, you need the following.

- A kubernetes cluster

- Kubectl [Local workstation]

- Helm [Local workstation]

Lets get started with the setup.

Kubernetes Node Requirements

To have a basic Istio setup up and running, you need to have the following minimum CPU and memory resources on your worker node.

- 4 vCPU

- 16 GB RAM

It will be enough for Istio control plane + sidecars + a few sample apps to get started.

Istio Sidecar Vs Ambient Mode

Before you start setting up Istio, you should know the following two Istio deployment modes.

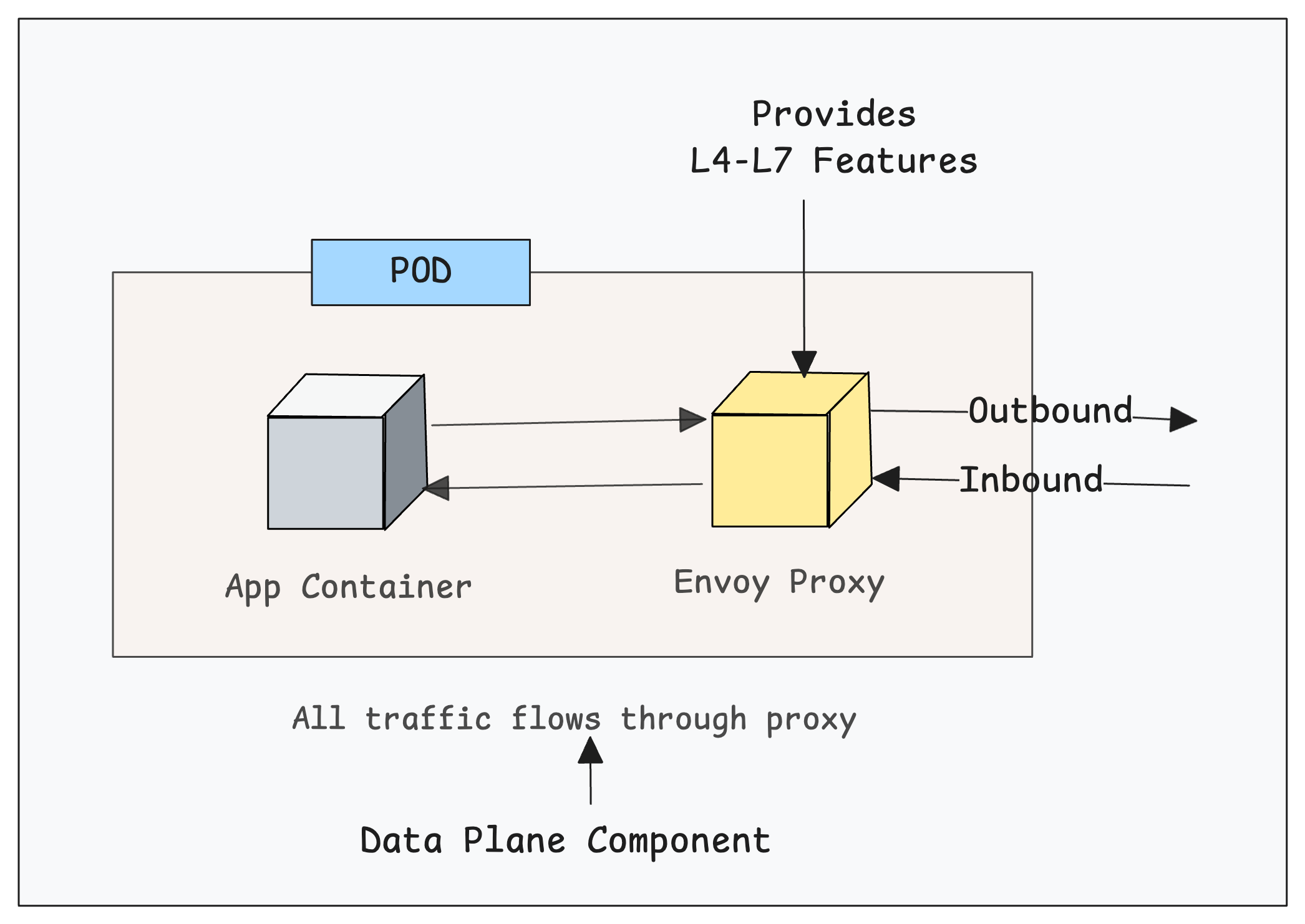

1. Sidecar Mode

In this mode, Istio Deploys an Envoy proxy as a sidecar container alongside each application pod. All the traffic flows through this sidecar proxy (data plane component) and provides all the L4-L7 features directly within the pod for all inbound and outbound connections.

It also means, each pod requires additional CPU and memory (typically 100-500MB RAM) for the sidecar container.

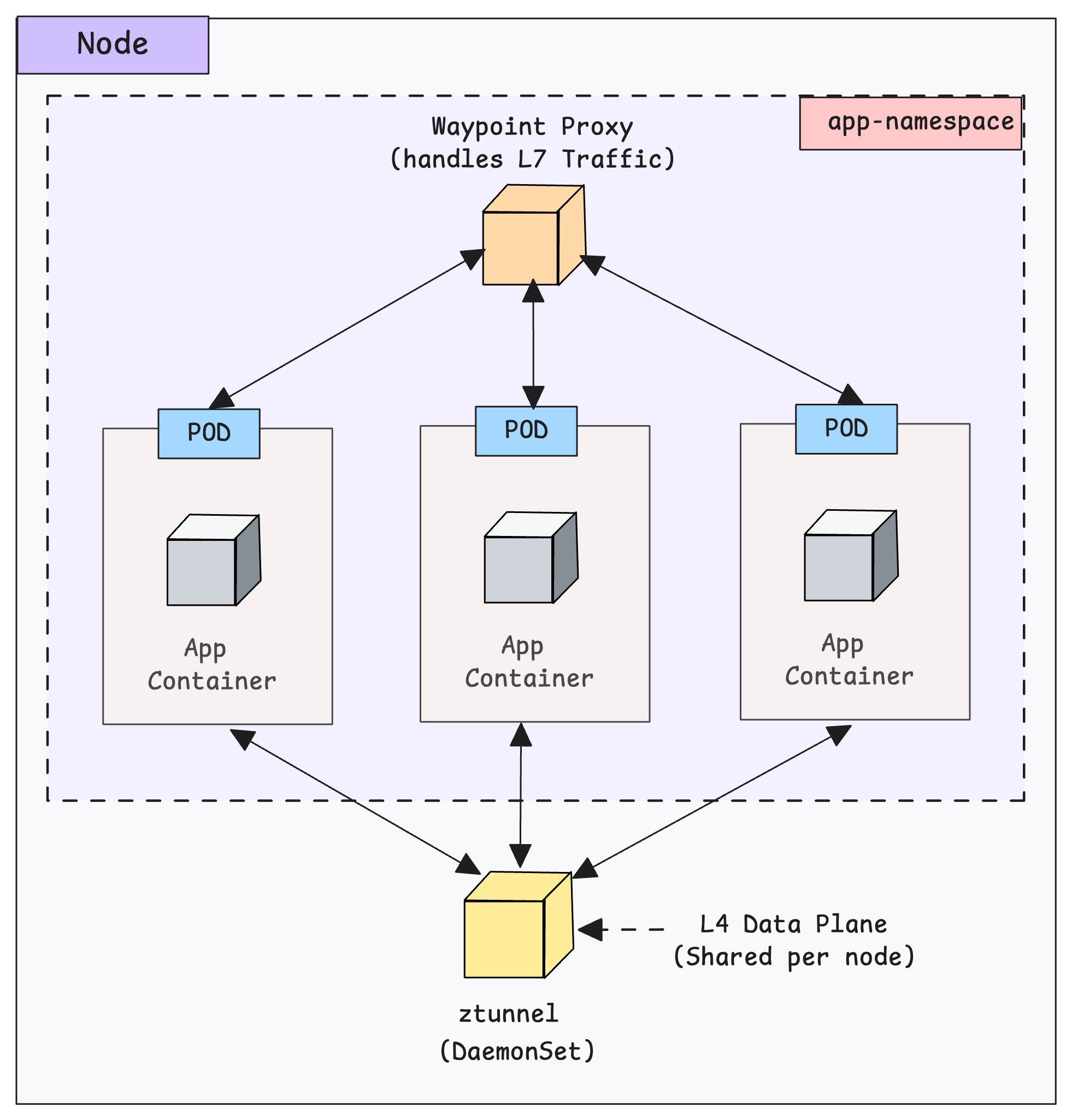

2. Ambient Mode

In this mode, no sidecars are injected into application pods.

Istio deploys a node-level proxy called ztunnel as a DaemonSet for L4 functionality (mTLS, authN/authZ at transport layer). This means, all pods on a node share the same ztunnel instance instead of their own sidecar proxies.

If you want L7 features (HTTP, gRPC, etc) you can run an optional namespace-scoped waypoint proxies.

To put is simple, ambient mode is more “evolved” in concept but it is still newer. Meaning there are more trade-offs and some gaps

If you want to learn more about Ambient Mode, please refer to the Set Up Istio in Ambient Mode blog.

Install Istio Using Helm

Helm is the common method followed in organizations to istall and mangage Istio. We will be using Helm to install Istio on the Kubernetes cluster.

Follow the steps given below for the installation.

Step 1: Add Helm Chart Repo

First, we need to add and update the official Istio Helm repo on our local machine.

helm repo add istio https://istio-release.storage.googleapis.com/charts

helm repo updateNow, we have added the Istio repo. So we can list the available charts of this repo.

helm search repo istioIn the output, we can see all the Istio related chart lists and their latest versions. We will not be using all the charts.

$ helm search repo istio

NAME CHART VERSION APP VERSION DESCRIPTION

istio/istiod 1.28.0 1.28.0 Helm chart for istio control plane

istio/istiod-remote 1.23.6 1.23.6 Helm chart for a remote cluster using an extern...

istio/ambient 1.28.0 1.28.0 Helm umbrella chart for ambient

istio/base 1.28.0 1.28.0 Helm chart for deploying Istio cluster resource...

istio/cni 1.28.0 1.28.0 Helm chart for istio-cni components

istio/gateway 1.28.0 1.28.0 Helm chart for deploying Istio gateways

istio/ztunnel 1.28.0 1.28.0 Helm chart for istio ztunnel components We will be using only those are required for sidecar mode implementation.

Step 2: Install Istio CRD's

Istio adds many custom features to Kubernetes like traffic routing, mTLS, gateways, sidecar rules, policies, and more.

These Istio features do not exist in Kubernetes by default. To support them, Istio defines them as Custom Resource Definitions (CRDs)

We can install the CRD's from the Istio chart list. We need to choose the istio/base chart to setup Istio in sidecar mode.

Execute the following command to install istio-base.

helm install istio-base istio/base -n istio-system --set defaultRevision=default --create-namespaceYou should get a successful message as follows.

NAME: istio-base

LAST DEPLOYED: Sat Nov 15 14:58:26 2025

NAMESPACE: istio-system

STATUS: deployed

REVISION: 1

TEST SUITE: None

NOTES:

Istio base successfully installed!Once the CRD deployment is completed, the Kubernetes API server supports Istio resource types.

We can list the Istio CRDs using the following command.

kubectl get crds | grep istioYou will get the following output. It contains CRD's realted to security, traffic management, telemetry, extensions and workloads.

$ kubectl get crds | grep istio

authorizationpolicies.security.istio.io 2025-11-15T06:20:10Z

destinationrules.networking.istio.io 2025-11-15T06:20:10Z

envoyfilters.networking.istio.io 2025-11-15T06:20:10Z

gateways.networking.istio.io 2025-11-15T06:20:10Z

peerauthentications.security.istio.io 2025-11-15T06:20:10Z

proxyconfigs.networking.istio.io 2025-11-15T06:20:10Z

requestauthentications.security.istio.io 2025-11-15T06:20:10Z

serviceentries.networking.istio.io 2025-11-15T06:20:10Z

sidecars.networking.istio.io 2025-11-15T06:20:10Z

telemetries.telemetry.istio.io 2025-11-15T06:20:10Z

virtualservices.networking.istio.io 2025-11-15T06:20:10Z

wasmplugins.extensions.istio.io 2025-11-15T06:20:10Z

workloadentries.networking.istio.io 2025-11-15T06:20:10Z

workloadgroups.networking.istio.io 2025-11-15T06:20:10ZCustomizing Istio Chart & Values (Optional)

In enterprise environments, you cannot install Istio directly from the public Helm repo.

You will have to host the helm chart in internal helm repos (e.g., Nexus, Artifactory, Harbor, S3 bucket, Git repo). Also you will customization to the default values to meet the project requirements.

In that case, you can download the chart locally or store it on your own repo, use the following command.

helm pull istio/base --version 1.28.0 --untarOnce you pull the chart, the directory structure looks like this.

base

|-- Chart.yaml

|-- README.md

|-- files

| |-- crd-all.gen.yaml

| |-- profile-ambient.yaml

| |-- ...

| |-- ...

| |-- ...

| |-- profile-remote.yaml

| `-- profile-stable.yaml

|-- templates

| |-- NOTES.txt

| |-- crds.yaml

| |-- defaultrevision-validatingadmissionpolicy.yaml

| |-- defaultrevision-validatingwebhookconfiguration.yaml

| |-- reader-serviceaccount.yaml

| `-- zzz_profile.yaml

`-- values.yamlHere, you can see the values.yaml file, which has the modifiable parameters.

So if you want to do a custom installation, you can modify this file or create a new values file with only the required parameters.

Then run the following command to install the CRDs using the downloaded chart.

helm install istio-base ./base -n istio-system --create-namespace-f field with the path of the custom file.Step 3: Install Istio Daemon (Istiod)

Istiod is the Istio control plane that manages everything related to Istio, like managing configuration,mTLS, service discovery, certification management etc.

Now, install the Istiod using the Helm chart. For that, we need to select the istio/istiod chart from the list.

helm install istiod istio/istiod -n istio-system --waitWait a few seconds to complete the installation.

This will install the Istiod control plane as a deployment and other required objects like services, configmaps, secrets, etc.

Customizing Istio Daemon Chart & Values (Optional)

For a custom installation, pull the charts to local, create a custom values file, or modify the default values.yaml file, then install the charts downloaded locally.

Use the following command to download the chart locally.

helm pull istio/istiod --version 1.28.0 --untarThe following is the structure of the chart.

istiod

|-- Chart.yaml

|-- README.md

|-- files

| |-- gateway-injection-template.yaml

| |-- grpc-agent.yaml

| |-- grpc-simple.yaml

| |-- ...

| |-- ...

| |-- ...

| |-- profile-preview.yaml

| |-- profile-remote.yaml

| |-- profile-stable.yaml

| `-- waypoint.yaml

|-- templates

| |-- NOTES.txt

| |-- _helpers.tpl

| |-- autoscale.yaml

| |-- ...

| |-- ...

| |-- ...

| |-- validatingwebhookconfiguration.yaml

| |-- zzy_descope_legacy.yaml

| `-- zzz_profile.yaml

`-- values.yamlThis is how the Istiod Helm chart is structured, and the following are the images used on this chart.

busybox:1.28docker.io/istio/pilot:1.28.0

Then run the following command to install Istiod using the downloaded chart.

helm install istiod ./istiod -n istio-system --wait

The default max replication is 5.

Validating Istio Installation

Run the following command to list the pod, deployment, and service created by the Helm chart.

kubectl -n istio-system get po,deploy,svcYou will see the following objects.

$ kubectl -n istio-system get po,deploy,svc

NAME READY STATUS RESTARTS AGE

pod/istiod-fd9bbfdf8-wd5sw 1/1 Running 0 5m20s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/istiod 1/1 1 0 5m20s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/istiod ClusterIP 10.102.128.197 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 5m20sThe output ensures that the installation is successful without any issues.

Install Istioctl [Local Machine]

Istioctl is a command-line tool to access and manage the Istio Daemon from the local machine.

Use the following command to install Istioctl.

For Linux:

curl -sL https://istio.io/downloadIstioctl | sh -

export PATH=$HOME/.istioctl/bin:$PATH

echo 'export PATH=$HOME/.istioctl/bin:$PATH' >> ~/.bashrc

source ~/.bashrcFor MAC:

brew install istioctlFor other installation options, you can use this official documentation for the installation.

Verify the installtion using the following command. It will shows the Istio clinent and control plane version.

$ istioctl version

client version: 1.28.0

control plane version: 1.28.0

data plane version: noneNow, we need to test Istio with applications.

Quick Istio Demo: Deploy Apps, Inject Sidecars, and Split Traffic

Now that we have completed the installation, let's validate the setup using a sample deployment that uses Istio features.

Here is what we are going to do.

- Create a namespace and label it for automatic Istio sidecar injection.

- Then deploy two sample applications versions (V1 & V2) in the istio enabled namespace.

- Configure Destination rule & Virtual Service to split traffic between two versions of app using weighted routing.

- Then test the setup by creating a client pod and sends requests to the application service endpoint.

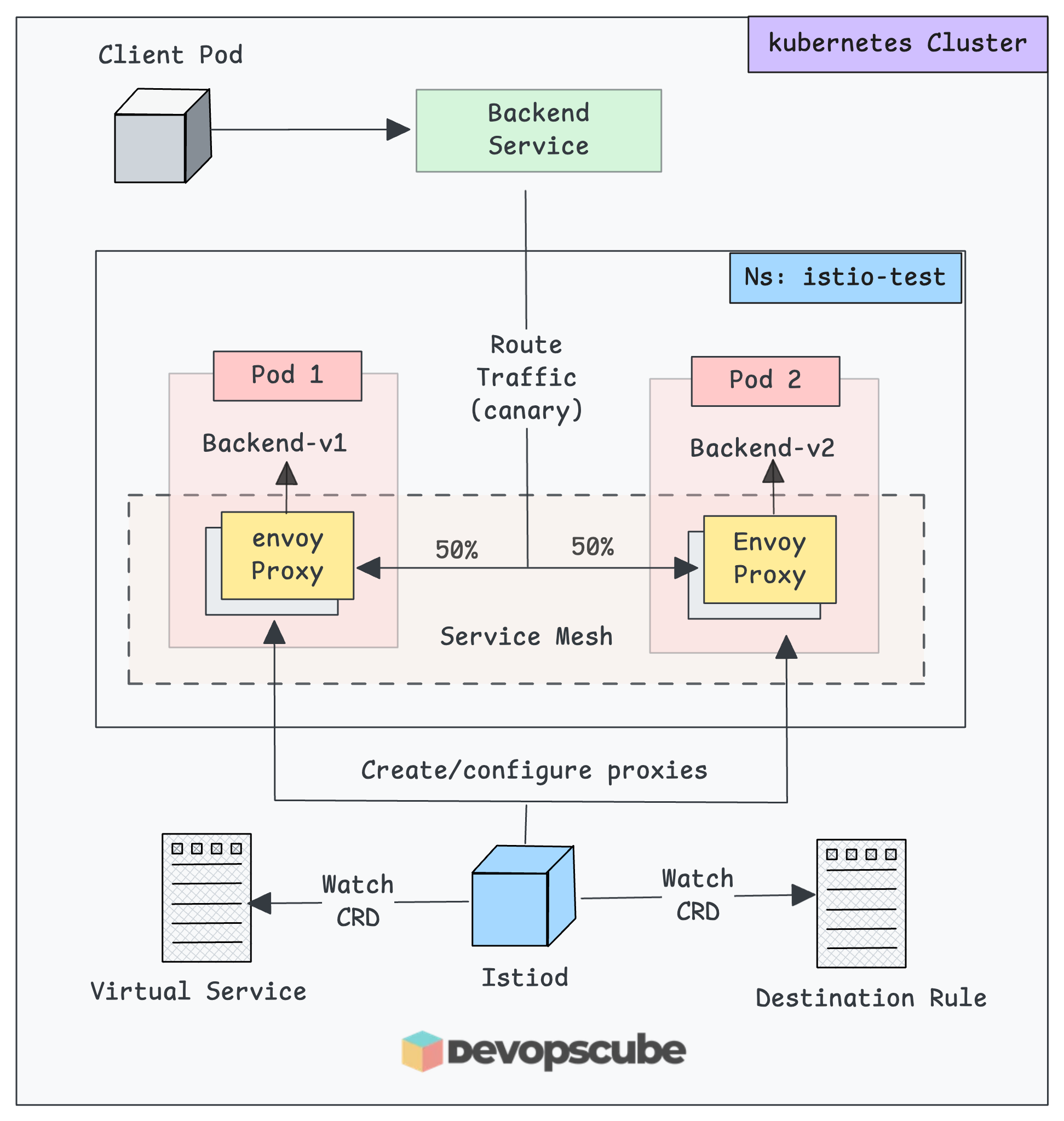

The following image shows what we are going to build in this test setup.

Let's get started with the setup.

Create a Namespace & Label

For testing, we use a dedicated namespace

kubectl create ns istio-testNow we need to add the istio specific lable to enable automatic Istio sidecar injection.

kubectl label namespace istio-test istio-injection=enabledWhen you set istio-injection=enabled on a namespace, Istio's MutatingAdmissionWebhook automatically injects an Envoy proxy sidecar container into any new pods deployed in that namespace.

Verify the label.

$ kubectl get namespace istio-test --show-labels

NAME STATUS AGE LABELS

istio-test Active 4m2s istio-injection=enabled,kubernetes.io/metadata.name=istio-testistio-injection=enabled label to an existing namespace with pods, you will need to restart the pods for the sidecar injection to happen.Deploy Two Sample Apps

For our validation, we are deploying two demo http echo applications and then test the traffic routing.

You can directly copy and paste the following manifests on your terminal to deploy the apps.

cat << EOF > demo-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend-v1

namespace: istio-test

spec:

replicas: 3

selector:

matchLabels: { app: backend, version: v1 }

template:

metadata:

labels: { app: backend, version: v1 }

spec:

containers:

- name: echo

image: hashicorp/http-echo

args: ["-text=hello from backend v1"]

ports:

- containerPort: 5678

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: backend-v2

namespace: istio-test

spec:

replicas: 2

selector:

matchLabels: { app: backend, version: v2 }

template:

metadata:

labels: { app: backend, version: v2 }

spec:

containers:

- name: echo

image: hashicorp/http-echo

args: ["-text=hello from backend v2"]

ports:

- containerPort: 5678

---

apiVersion: v1

kind: Service

metadata:

name: backend

namespace: istio-test

labels:

app: backend

service: backend

spec:

ports:

- name: http

port: 80

targetPort: 5678

selector:

app: backend

EOFOnce you deploy the pod in the labelled namespace, Istio will inject the proxy sidecar on each pod.

Run the following command to check the status of the sample applications and backend svc.

kubectl -n istio-test get po,svcYou will get the following output.

$ kubectl -n istio-test get po,svc

NAME READY STATUS RESTARTS AGE

pod/backend-v1-7c88547fc6-6krk8 2/2 Running 0 60s

pod/backend-v1-7c88547fc6-lvntb 2/2 Running 0 60s

pod/backend-v1-7c88547fc6-x49kr 2/2 Running 0 60s

pod/backend-v2-86c767bf6b-blf56 2/2 Running 0 59s

pod/backend-v2-86c767bf6b-zwzp7 2/2 Running 0 59s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/backend ClusterIP 10.100.134.45 <none> 80/TCP 55sIf you notice, the output says 2/2 ready. It is the application pod + the sidecar proxy.

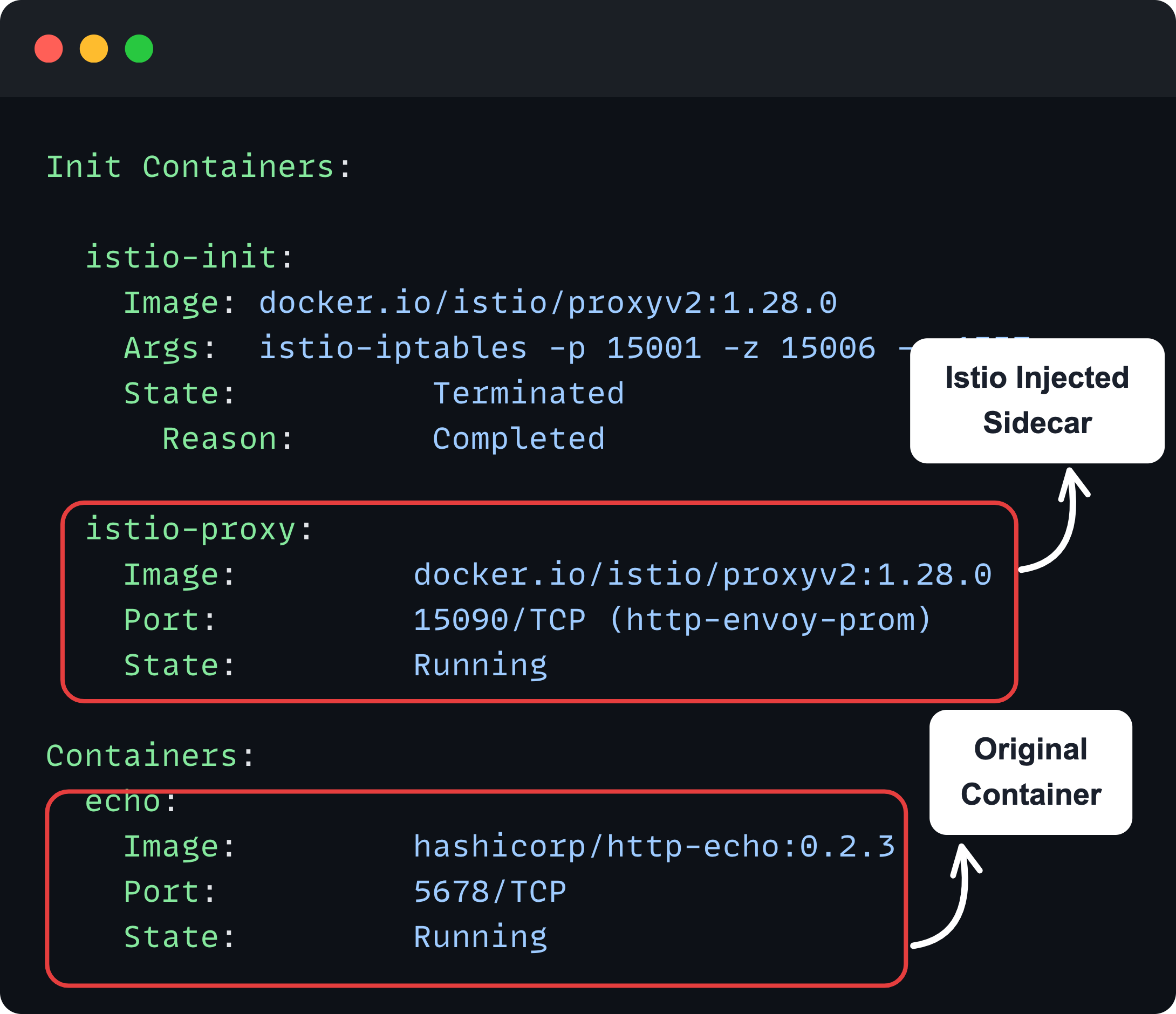

To verify this, describe any of the pods from the namespace, and you will see the sidecar container.

kubectl -n istio-test describe po <pod-name>You can see the main container and Istios container added as a sidecar as shown below.

Also, you can use istioctl to check the status of the proxies using the following command.

istioctl -n istio-test proxy-statusAnd, you will get the following output.

$ istioctl -n istio-test proxy-status

NAME CLUSTER ISTIOD VERSION SUBSCRIBED TYPES

backend-v1-7c88547fc6-6krk8.istio-test Kubernetes istiod-fd9bbfdf8-dzcsp 1.28.0 4 (CDS,LDS,EDS,RDS)

backend-v1-7c88547fc6-lvntb.istio-test Kubernetes istiod-fd9bbfdf8-dzcsp 1.28.0 4 (CDS,LDS,EDS,RDS)

backend-v1-7c88547fc6-x49kr.istio-test Kubernetes istiod-fd9bbfdf8-dzcsp 1.28.0 4 (CDS,LDS,EDS,RDS)

backend-v2-86c767bf6b-blf56.istio-test Kubernetes istiod-fd9bbfdf8-dzcsp 1.28.0 4 (CDS,LDS,EDS,RDS)

backend-v2-86c767bf6b-zwzp7.istio-test Kubernetes istiod-fd9bbfdf8-dzcsp 1.28.0 4 (CDS,LDS,EDS,RDS)In the output, we can see two Envoy proxies, which are connected with the Istio daemon (Control Plane).

Each side-car Envoy proxy is subscribed to specific discovery feeds via gRPC or streaming connection to the Istio control plane to decide how the traffic should be routed.

- LDS (Listener Discovery Service) - This tells the Envoy to which IP and Ports to listen, and what to do when the traffic arrives.

- RDS (Route Discovery Service) - After traffic is received, it tells Envoy where it should be sent.

- CDS (Cluster Discovery Service) - Defines the services on other clusters and how to connect them.

- EDS (Endpoint Discovery Service) - List the Pod IPs and Ports inside each cluster so Envoy can reach the correct destination.

This means at runtime, Envoy is receiving updates for what listeners to use, what routes to send traffic on.

Now, our demo deployments are ready, so we can now create the Destination Rule.

Create a Destination Rule

As the name indicates, destination rule basically defines the rules on how the traffic should be handled at the destination (pod).

When we say rules, it means,

- Load balancing method

- Connection limits

- Circuit breaking

- TLS settings

- Retry policies and more..

For example, if you want to enable mTLS, or LEAST_REQUEST load balancing for the destination pod, you define all those in the DestinationRule CRD.

All the DestinationRule configurations get translated into Envoy proxy (pod sidecar) configuration.

DestinationRule EVERY proxy in the mesh, regardless of whether they'll ever call that service or not.In our example, we are going to perform a traffic split between two versions of applications using weighted routing. It is also useful for canary routing.

For that we need to create a DestinationRulethat creates two subsets.

- Subset v1: To group all pods with label

version: v1 - Subset v2: Groups all pods with label

version: v2

When traffic reaches the dataplane proxy, it routes between the label-based subsets of one service.

The following is the sample Destination rule configuration that creates two subsets.

cat <<EOF > destination-rule.yaml

apiVersion: networking.istio.io/v1alpha3

kind: DestinationRule

metadata:

name: backend

namespace: istio-test

spec:

host: backend.istio-test.svc.cluster.local

subsets:

- name: v1

labels:

version: v1

- name: v2

labels:

version: v2

EOFTo apply this, use the following command.

kubectl apply -f destination-rule.yamlIn the above config, we have used

spec.host: backend.istio-test.svc.cluster.local- The domain name of the backend service that we created in the previous step. It defines that the traffic should route to this backend service.subsets- Defines the backend application deployments based on labels. We have two deployments under one service so created two subsets.

backend.istio-test.svc.cluster.local, apply these rules (subsets v1 and v2).To list the Destination Rule Custom Resources, use the following command.

kubectl -n istio-test get destinationrulesYou will get the following output.

$ kubectl -n istio-test get destinationrules

NAME HOST AGE

backend backend.istio-test.svc.cluster.local 6sNow, we need to create a Virtual service.

Create a Virtual Service

Virtual Service is a Custom Resource of the Istio, where we define where to route the traffic based on conditions such as host, path, weight, canary, etc.

So why do we need VirtualService when we already have DestinationRule?

Well, they serve completely different purposes in traffic management.

Think of it this way.

- VirtualService = WHAT traffic goes WHERE (Eg, path/host-matching, canary/weight, splits, header-based routing etc.)

- DestinationRule = HOW to handle traffic at the destination (connection policies)

Lets create a Virtual Service that does weighted routing (canary style) to the two subsets we deigned in the Destination rule.

To create a Virtual Service, use the following manifet.

cat <<EOF > virtual-service.yaml

apiVersion: networking.istio.io/v1beta1

kind: VirtualService

metadata:

name: backend

namespace: istio-test

spec:

hosts:

- backend.istio-test.svc.cluster.local

http:

- route:

- destination:

host: backend.istio-test.svc.cluster.local

subset: v1

weight: 50

- destination:

host: backend.istio-test.svc.cluster.local

subset: v2

weight: 50

EOFHere, we have defined the hostname of the service to route the traffic.

spec.http.route - This defines how to spread the traffic to the subsets. Here, we use the canary method, so the 50% traffic route to the v1 service and the remaining 50% will be routed to the v2 service.

To apply this, use the following command.

kubectl apply -f virtual-service.yamlTo list the available virtual services, use the following command.

kubectl -n istio-test get virtualservicesYou will get the following output.

$ kubectl -n istio-test get virtualservices

NAME GATEWAYS HOSTS AGE

backend ["backend.istio-test.svc.cluster.local"] 5sNow, out setup is ready. Now we need to test the canary routing using a client Pod.

Validate Traffic Routing With A Client

For validation, we are going to create a client pod to send a request to the sample applications.

To create a client pod, use the following manifest. We are deploying a curl image to send curl request.

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: sleep

namespace: istio-test

labels:

app: sleep

spec:

containers:

- name: curl

image: curlimages/curl:8.8.0

command:

- sh

- -c

- sleep 3650d

EOFWait until the pod gets ready.

The sidecar gets injected for this pod as well. Check the status of the proxies using Istioctl again.

istioctl -n istio-test proxy-statusYou can see the new pod has also been added as a proxy in Istiod.

istioctl -n istio-test proxy-status

NAME CLUSTER ISTIOD VERSION SUBSCRIBED TYPES

backend-v1-6cf9fdbd56-99lkf.istio-test Kubernetes istiod-5d5696f494-pzqxs 1.28.0 4 (CDS,LDS,EDS,RDS)

backend-v2-776557dbfd-www9b.istio-test Kubernetes istiod-5d5696f494-pzqxs 1.28.0 4 (CDS,LDS,EDS,RDS)

sleep.istio-test Kubernetes istiod-5d5696f494-pzqxs 1.28.0 4 (CDS,LDS,EDS,RDS)Now, lets make10 requests to the backend service from the client and see how many times the traffic hits the v1 and v2 services.

for i in $(seq 1 10); do

kubectl exec -n istio-test -it sleep -- curl -s http://backend.istio-test.svc.cluster.local

echo

doneYou can see that the requests are is split among each service.

$ for i in $(seq 1 10); do

kubectl exec -n istio-test -it sleep -- curl -s http://backend.istio-test.svc.cluster.local

echo

done

hello from backend v2

hello from backend v2

hello from backend v2

hello from backend v1

hello from backend v1

hello from backend v2

hello from backend v1

hello from backend v1

hello from backend v1

hello from backend v1Thats it! You have deployed Istio and tested a sample app for canary routing.

Exposing Applications Outside Cluster

The next step in the setup would be setting up a gateway to expose Istio enabled services outside the cluster.

The standard way to expose applications in Istio is using Ingress Gateways combined with a VirtualService.

The Kubernetes Gateway API will be the default API for traffic management in the future.

We have covered the entire setup in a separate blog.

Please refer Istio Ingress With Kubernetes Gateway API for the detailed setup guide.

Cleanup

Once your setup is done and if you dont want the resources to be running, cleanup the resources so that you can save CPU and memory resources.

To delete the deployments and services of the application, use the following command.

kubectl -n istio-test delete deploy backend-v1 backend-v2

kubectl -n istio-test delete svc backend

kubectl -n istio-test delete po sleepNow, we can delete the Namespace

kubectl delete ns istio-testTo remove the Istio Daemon, use the following Helm command.

helm -n istio-system uninstall istiodTo uninstall the Istio Custom Resource Definitions

helm -n istio-system uninstall istio-baseConclusion

When it comes to Istio setup in organizations, Helm is the preferred method for setting up Istio.

Helm + GitOps is a strong pattern for mature teams. This ways you get versioned installs, clear change history, rollback ability.

In this blog, we walked through installing Istio custom resources, the sidecar proxy (daemon), and using istioctl for setup and basic configuration. We also showed how to test the installation with a sample app.

There is more you can do with Istio, like routing rules, timeouts, mutual authentication, etc., and we will cover these topics one by one in the upcoming posts.

If you have any trouble getting started with Istio setup, do let us know in the comments. We will help you out!