This tutorial will guide you to setup Ingress on GKE using a GKE ingress controller that covers:

- The need for ingress

- The logic behind the GKE ingress controller

- Creating your first ingress with a demo application.

- Different ways of ingress domain/host and path mappings

Let’s dive right in.

Why GKE Ingress?

Exposing each service as a Loadbalancer is not an ideal solution to deal with Kubernetes ingress traffic.

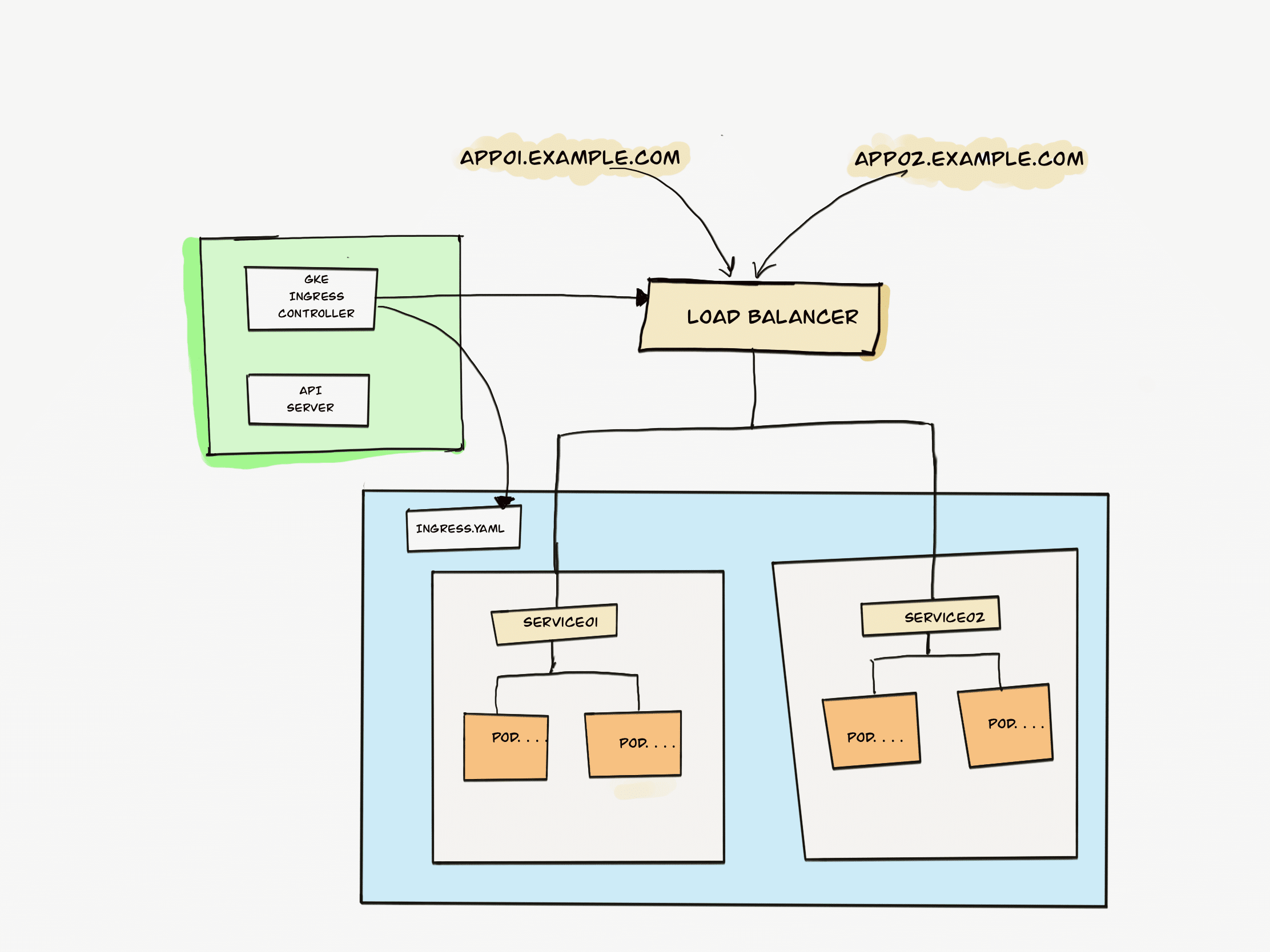

You need a Kubernetes ingress controller to manage all the ingress traffic for the cluster. With direct DNS or a wildcard DNS mapping, you can route traffic to backend kubernetes services.

Also you can have multiple DNS attached to a single ingress Loadbalancer and route to different service backends using the Ingress controller.

Also, you can have path-based routing rules in the ingress resources to different kubernetes services.

The good thing is, GKE comes with an inbuilt GKE ingress controller. So you don't have to do any extra configurations to set up an ingress controller.

You can also set up other ingress controllers like the Nginx ingress controller. It depends upon the project requirements and the features supported by each controller implementation.

For this tutorial, we will just look at how to create an ingress object with the GKE ingress controllers.

How Does GKE Ingress Work?

As you probably know, for Kubernetes ingress to work, you need an Ingress controller.

If you are not aware of ingress concepts, please read the ingress tutorial to understand how it works.

GKE has its own ingress controller called GKE ingress controller.

When you create an ingress object, GKE launches a Load Balancer (Public or Private) with all the routing rules mentioned in the ingress resource.

Since the Loadbalancer is outside the cluster, the backend service defined in the ingress resources should be of type LoadBalancer.

Whereas in normal ingress controller implementation like Nginx ingress controller, the proxy layer resides inside the cluster and it can talk to services without Nodeport.

In GKE, there is a concept of Network Endpoint Groups. Even though the backend service is of type NoePort, GKE doesn't randomly send traffic to any node in the cluster to reach the pods. With NEGs, all the traffic will be sent directly to the nodes where the pod resides.

Without NEGs, the traffic from the Loadbalancer can read any node in the cluster and can result in extra network hops to reach the node where the pod resides.

Setup Ingress on GKE using a GKE Ingress Controller

Now, let's look at a practical example of setting up ingress using the GKE ingress controller.

To understand the GKE ingress workflow and configurations better, we will do the following.

- Deploy an Nginx deployment with a

NodePortservice (Nodeport is a requirement) - Create an ingress object with static IP that has a rule to route traffic to the Nginx service endpoint.

- Validate the ingress deployment by accessing Nginx over the Loadbalancer IP.

Let's get started with the setup.

Step 1: Deploy an Nginx deployment With Service Type NodePort

Save the following manifest as nginx.yaml. We are adding a custom index.html using a configmap which replaced the default Nginx index.html file.

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

namespace: default

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:latest

ports:

- containerPort: 80

readinessProbe:

httpGet:

scheme: HTTP

path: /index.html

port: 80

initialDelaySeconds: 10

periodSeconds: 5

volumeMounts:

- name: nginx-public

mountPath: /usr/share/nginx/html/

volumes:

- name: nginx-public

configMap:

name: nginx-index-html-configmap

---

apiVersion: v1

kind: ConfigMap

metadata:

name: nginx-index-html-configmap

namespace: default

data:

index.html: |

<html>

<h1>Welcome To Webapp 01</h1>

</br>

<h1>Hi! You are Trying to Access Webapp Through GKE Ingress </h1>

</html

---

apiVersion: v1

kind: Service

metadata:

name: nginx-service

namespace: default

spec:

selector:

app: nginx

type: NodePort

ports:

- port: 80

targetPort: 80

Create the deployment.

kubectl apply -f nginx.yamlIt gets deployed in the default namespace.

Validate the deployment. You should see two Replicas of Nginx.

kubectl get deploymentsValidate the Nginx service endpoint. You should be abe able to see the randomly assigned NodePort.

kubectl get svc nginx-serviceStep 2: Create Ingress for the Nginx Deployment

Now that we have a working deployment, we will create an ingress resource.

Let's start with a basic setup and then I will address other options in the ingress.

What we are going to do is create an ingress that routes traffic to our Nginx service endpoint.

First, we need to create a Static Public IP address with the name ingress-webapps that we can use with the ingress. With static IP you can point the required domain name to the Ingress IP.

gcloud compute addresses create ingress-webapps --globalSave the following manifest as ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-ingress

annotations:

kubernetes.io/ingress.class: "gce"

kubernetes.io/ingress.global-static-ip-name: "ingress-webapps"

spec:

rules:

- http:

paths:

- path: "/*"

pathType: ImplementationSpecific

backend:

service:

name: nginx-service

port:

number: 80As you can see we have two annotations in the manifest file.

kubernetes.io/ingress.classannotation with valuegcetells GKE to create a public Load Balancer. If you don't specify it, it defaults to public only.kubernetes.io/ingress.global-static-ip-nameannotation with valueingress-webappstells GKE to attach the static IP we created to the ingress Load Balancer.- We are creating a

httprule to route all the traffic, ie/*to thenginx-serviceendpoint.

Let's create the ingress

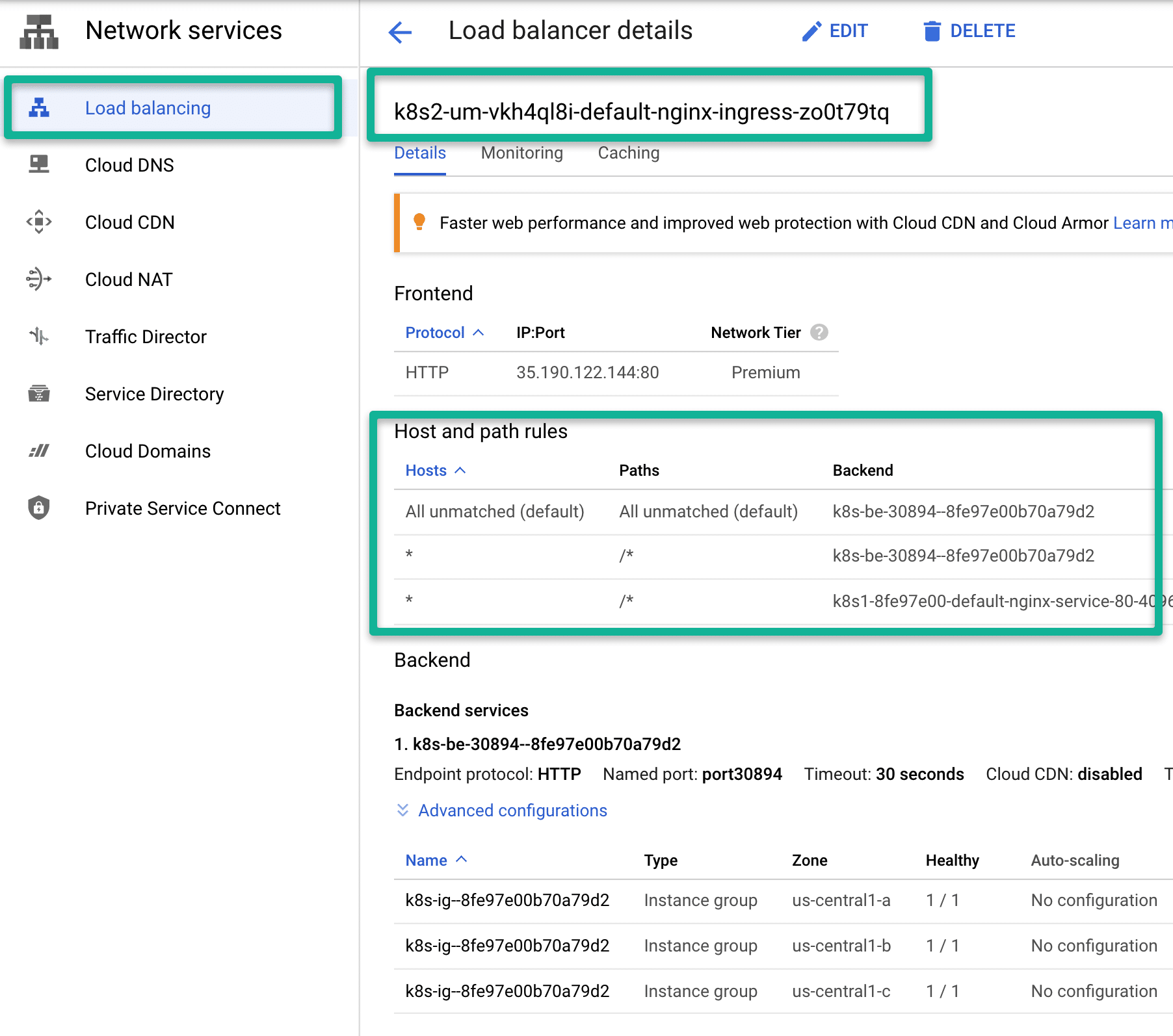

kubectl apply -f ingress.yamlWhen you create ingress, it creates a Loadbalancer with the routing rules mentioned in the ingress YAML.

Step 3: Validate Ingress

Let's list the ingress and get the Load balancer IP.

kubectl get ingress

After few minutes you should be able to access the Nginx service running inside the cluster using the

Alternatively, you can check the Google Cloud load balancer dashboard to get more details about the Ingress Loadbalancer.

Hosting Multiple Domains on Same GKE Ingress Loadbalancer

If you have a use case to host multiple applications with DNS on the same Load balancer, you can do it by mapping the same Loadbalancer IP in both the DNS A records.

Now, in the ingress specification, you need to add both the domain names with the respective backend service endpoints as shown below.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-ingress

annotations:

kubernetes.io/ingress.class: "gce"

kubernetes.io/ingress.global-static-ip-name: "ingress-webapps"

spec:

rules:

- host: "www.web01.com"

http:

paths:

- pathType: ImplementationSpecific

path: "/"

backend:

service:

name: web01-service

port:

number: 8080

- host: "www.web02.com"

http:

paths:

- pathType: ImplementationSpecific

path: "/"

backend:

service:

name: web02-service

port:

number: 8080

Path-Based Routing Using GKE Ingress

Now, let's say you have many backend services that need to be routed based on a specific path. In that case, your ingress would like the following.

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: nginx-ingress

annotations:

kubernetes.io/ingress.class: "gce"

kubernetes.io/ingress.global-static-ip-name: "ingress-webapps"

spec:

rules:

- http:

paths:

- path: "/*"

pathType: ImplementationSpecific

backend:

service:

name: nginx-service

port:

number: 80

- path: "/api"

pathType: ImplementationSpecific

backend:

service:

name: api-service

port:

number: 80

- path: "/users"

pathType: ImplementationSpecific

backend:

service:

name: user-service

port:

number: 80Multiple Ingress Resources with Single GKE Ingress Controller

When we setup ingress controllers like Nginx, you can have multiple ingress resources mapped to a single Ingress controller.

However, when it comes to GKE Loadbalancer based ingress controllers, you cannot map multiple ingress objects to a single GKE ingress controller.

For each ingress object or a resource, a separate Google Loadbalancer will be provisioned.

Here is what the google cloud document says about the multiple ingress resource mapping limitation.

The one way to circumvent this problem is to have all the ingress rules in a single ingress resource.

There is a limitation of a maximum of 50 paths per backend mapping. See out all the Quota limitations.

Customizing GKE Ingress Configurations

The example we have seen uses all the default settings of the GKE ingress controller.

But when it comes to production project requirements, you may have to tweak the configurations based on your application.

Few use cases are,

- Custom Headers: You might have to add custom HTTP headers to the GKE ingress controller.

- Session Affinity: If you want requests from a given user to be served by the same backend.

Refer to the GKE ingress controller features document to understand all the supported configurations.

To use the extra GKE ingress features, you need to deploy a Backend config with the required features. Backendconfig is a custom resource type available as part of GKE ingress controllers.

For, example, to enable session affinity based on a cookie, you have to deploy the following backend config.

apiVersion: cloud.google.com/v1

kind: BackendConfig

metadata:

name: session-cookie-config

spec:

sessionAffinity:

affinityType: "GENERATED_COOKIE"

affinityCookieTtlSec: 50And for setting custom headers in GKE ingress, you have to use the following backend config.

apiVersion: cloud.google.com/v1

kind: BackendConfig

metadata:

name: my-backendconfig

spec:

customRequestHeaders:

headers:

- "X-Client-Region:{client_region}"

- "X-Client-City:{client_city}"

- "X-Client-CityLatLong:{client_city_lat_long}"Conclusion

In this tutorial, we have learned to set up Ingress on GKE using a GKE ingress controller and Google cloud load balancer.

Choosing a GKE ingress controller vs. another ingress controller depends on the project requirements and features required in the ingress layer.

You might need more information or you may some issues while setting it up.

Either way, drop a comment below.