In this guide, I have explained how to handle Kubernetes pod graceful shutdown using SIGTERM and preStop hooks, with practical examples.

One of the fundamental concepts in Kubernetes is Pod Graceful Shutdown. It plays a key role in application reliability.

While modern application architectures often use patterns like circuit breakers, retries, and timeouts to handle failures gracefully, graceful pod shutdown acts as the last line of defense.

By the end of this guide, you will have learned,

- What are SIGTERM and SIGKILL

- How Kubernetes handles SIGTERM and SIGKILL

- Practically understand how to handle SIGTERM in applications.

- The role of PreStop hooks and why it matters

- The link between SIGTERM and container PID 1

Lets get started.

Kubernetes & SIGTERM

When a pod shuts down in Kubernetes (due to scaling, update, or any reason), it sends a SIGTERM signal to the app inside.

SIGTERM (Signal 15) is used to request the termination of a process.

SIGKILL (Signal 9) forces an immediate termination of a process .

If your app does not handle SIGTERM properly, it might stop in the middle of serving a user request or processing a file (by SIGKILL), leading to data loss or a bad user experience.

For example, let's say an app is handling file uploads or user payments.

If the pod shuts down without waiting, files may be incomplete, or payments may fail. It could lead to

ECONNRESETerrors for clients with in-flight requests- HTTP 5xx errors (typically 502 Bad Gateway or 503 Service Unavailable)

- Database connections remain open until the timeout, etc.

By catching SIGTERM, you can,

- Finish the current request

- Save important state

- Notify other services

Kubernetes Graceful Pod Termination Process

First lets look at how Kubernetes handles SIGTERM.

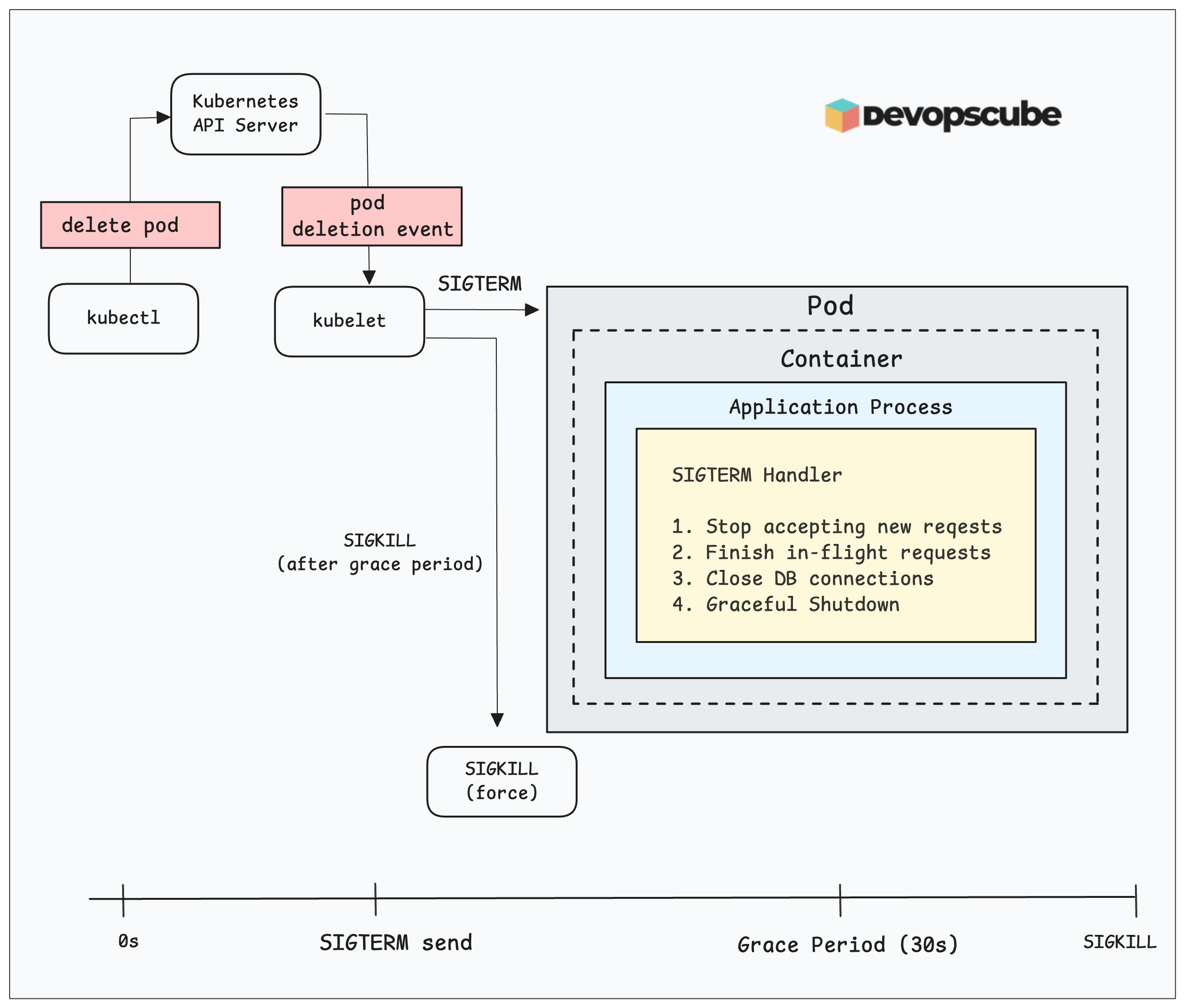

The following diagram shows the graceful pod termination process in Kubernetes.

Here is how it works.

- A pod deletion request is sent to Kubernetes via kubectl pod delete or the API server sends a deletion event.

- Then the kubelet sends a SIGTERM to the application process container. When there are multiple containers in a pod, Kubernetes sends SIGTERM to all containers simultaneously.

- Once the application receives the SIGTERM, it has 30 seconds by default to handle the signal and shutdown gracefully. (The grace period is a configurable value using terminationGracePeriodSeconds)

- Now, the application SIGTERM handler stops accepting new requests, finishes processing in-flight requests, closes database connections, and so on.

- If the SIGTERM handler executes all the graceful shutdown activities the applications shuts down gracefully.

- If the application process does not exit within the grace period, Kubernetes sends a SIGKILL signal to terminate it forcefully.

Is 30 seconds terminationGracePeriodSeconds ideal?

There is no definitive answer. The 30 seconds (the Kubernetes default) works for most cases.

However, for databases or heavy processing apps, you may have to use 60-120 seconds and for batch jobs or data processing, you might need 300+ seconds to finish their work properly. So it depends on the applications.

Handling SIGTERM in an Application

To understand Graceful Pod Shutdown using SIGTERM, let's build a Flask app that performs the following tasks.

- Takes a few seconds to process a request (simulates real work)

- Handles the SIGTERM signal properly.

- Cleans up before shutting down.

The workflow remains the same for all types of apps. The libraries and their implementation will change based on the use case.

Create a Python file app.py and copy the below content.

from flask import Flask

import signal

import time

import sys

app = Flask(__name__)

is_shutting_down = False

def handle_sigterm(signum, frame):

global is_shutting_down

print("\n[SHUTDOWN] SIGTERM signal received.")

is_shutting_down = True

print("[SHUTDOWN] Finishing remaining tasks before exit...")

time.sleep(1)

print("[SHUTDOWN] Saving in-progress orders to database...")

time.sleep(1)

print("[SHUTDOWN] Notifying other services that shutdown is happening...")

time.sleep(1)

print("[SHUTDOWN] Cleanup complete. Shutting down gracefully.")

sys.exit(0)

signal.signal(signal.SIGTERM, handle_sigterm)

@app.route("/work")

def do_work():

if is_shutting_down:

print("[WORK] Rejected request: shutting down.")

return "Server is shutting down. Try again later.\n", 503

print("[WORK] New order processing started...")

time.sleep(3)

print("[WORK] Validating order data...")

time.sleep(2)

print("[WORK] Writing order to database...")

time.sleep(2)

print("[WORK] Order processed successfully.")

return "Order processed successfully!\n"

@app.route("/")

def home():

return "Welcome to the Order Processing App\n"

if __name__ == "__main__":

print("[APP] Starting Flask app...")

app.run(host="0.0.0.0", port=5000)

Here is the Dockerfile to build the application image. If you dont want to build a image, use our devopscube/python-app:1.0.0 image for testing.

FROM python:3.9-slim

WORKDIR /app

COPY app.py .

RUN pip3 install flask

CMD ["python3", "app.py"]

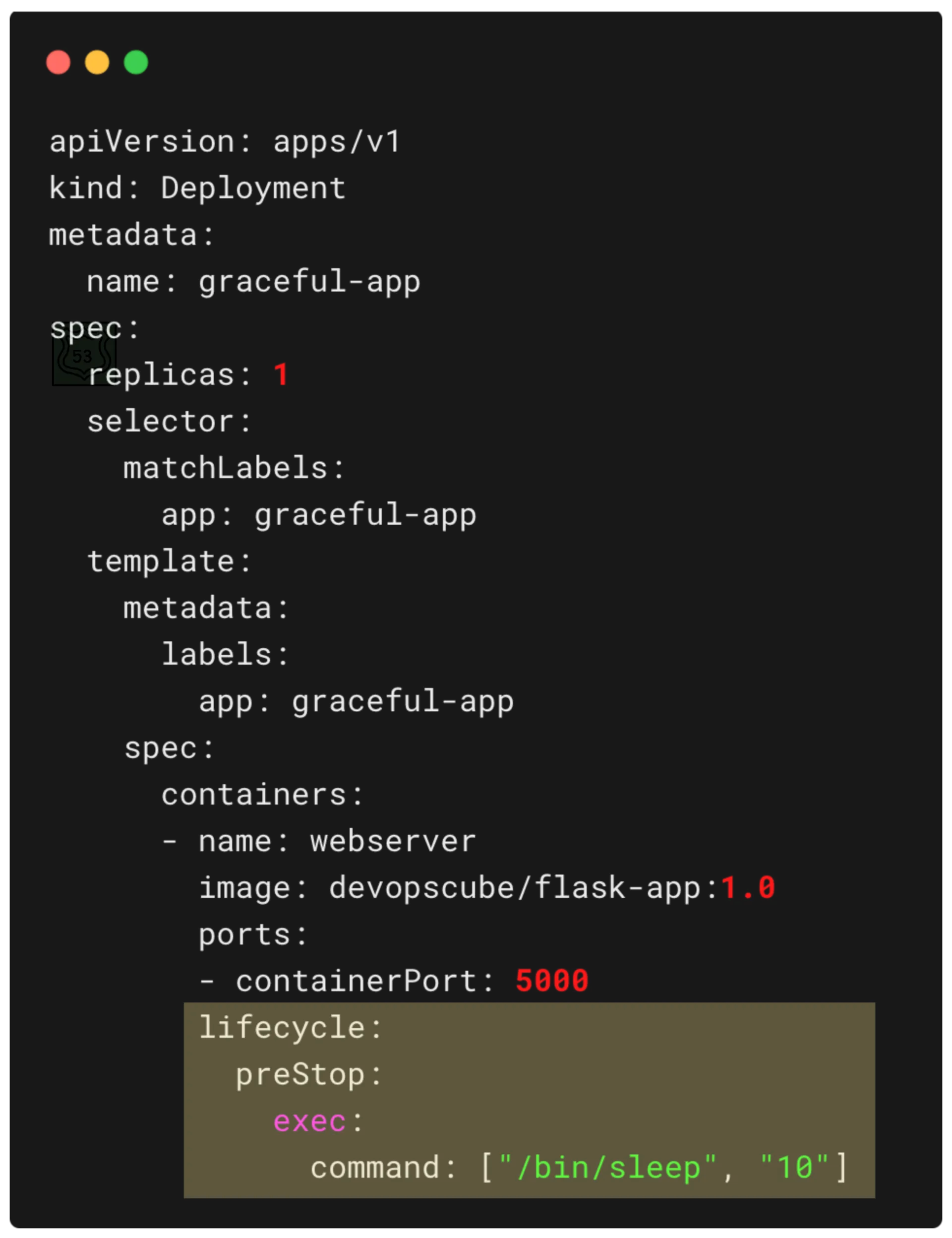

Once you have the image ready in a Docker registry, deploy it using the following Deployment manifest.

Create graceful-pod.yaml and copy the following manifest.

apiVersion: apps/v1

kind: Deployment

metadata:

name: graceful-app

spec:

replicas: 1

selector:

matchLabels:

app: graceful-app

template:

metadata:

labels:

app: graceful-app

spec:

containers:

- name: graceful-app

image: devopscube/python-app:1.0.0

ports:

- containerPort: 5000

terminationMessagePath: "/dev/termination-log"

terminationGracePeriodSeconds: 60

In the above YAML mainifest terminationMessagePath is the default path inside the container where termination messages are written by the pod. It tells Kubernetes where it can find termination messages.

As we learned before, terminationGracePeriodSeconds tells Kubernetes to wait up to 60 seconds (default is 30) before forcefully deleting the pod.

If the pod is not shutdown within the grace period, it deletes the pods forcefully.

Lets deploy the manifest.

kubectl apply -f graceful-pod.yamlValidate Graceful Shutdown

The next step is to validate the graceful shutdown. Follow the steps below for validation.

Step 1: Tail the Logs

Tail the logs of the pods to watch the logs in real-time and to watch the logs of the running pod run the following commands:

POD_NAME=$(kubectl get pods -l app=graceful-app -o jsonpath='{.items[0].metadata.name}')

kubectl logs -f $POD_NAMEStep 2: Send Test Requests

While the logs are tailing, you could kubectl port-forward and send curl request continuously to see if it's being processed.

Or use Apache benchmark to send requests continuously.

Open a new terminal and run the following command to port-forward the pod.

kubectl port-forward pod/<pod-name> 5000:5000Now, open a new terminal and run the following command to send curl requests to the pod continuously.

while true; do curl -s http://localhost:5000/work; echo; sleep 1; doneStep 3: Delete the Pod

Now, delete the pod to check how Sigterm gracefully deletes it.

You will get the log as follows.

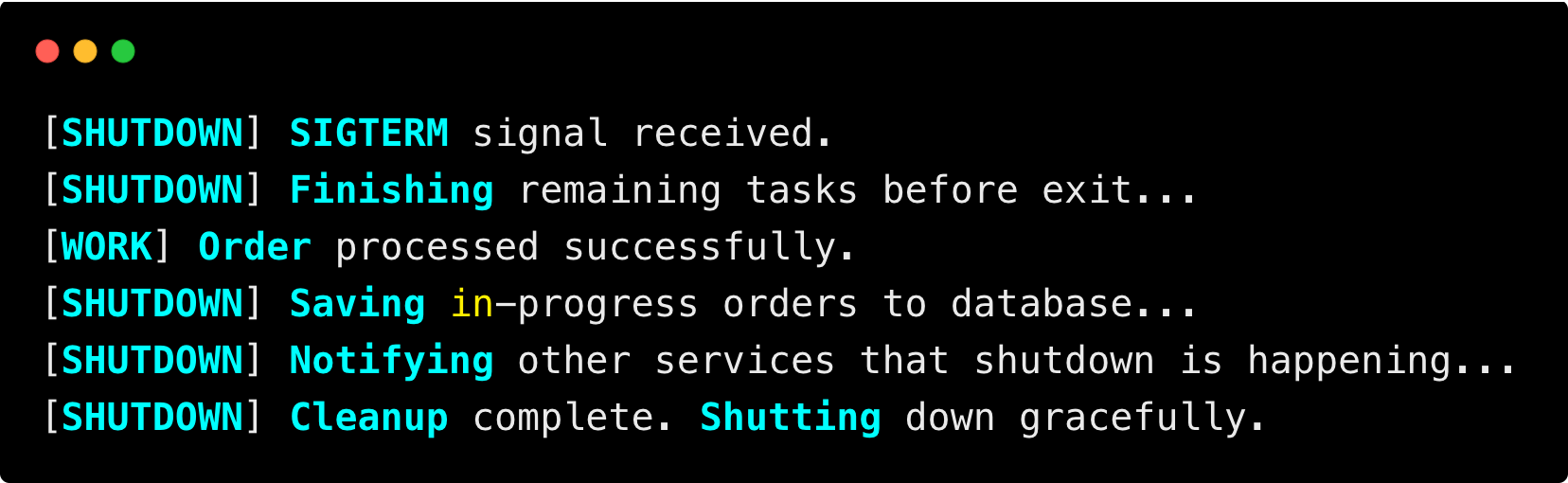

Here is what you just saw.

- Kubernetes sent

SIGTERMwhen you deleted the Pod. - Flask app caught the signal.

- Finish In-Flight Work - Allowing ongoing tasks/requests to complete.

- Clean Up Resources: Close database connections, files, network listeners, etc.

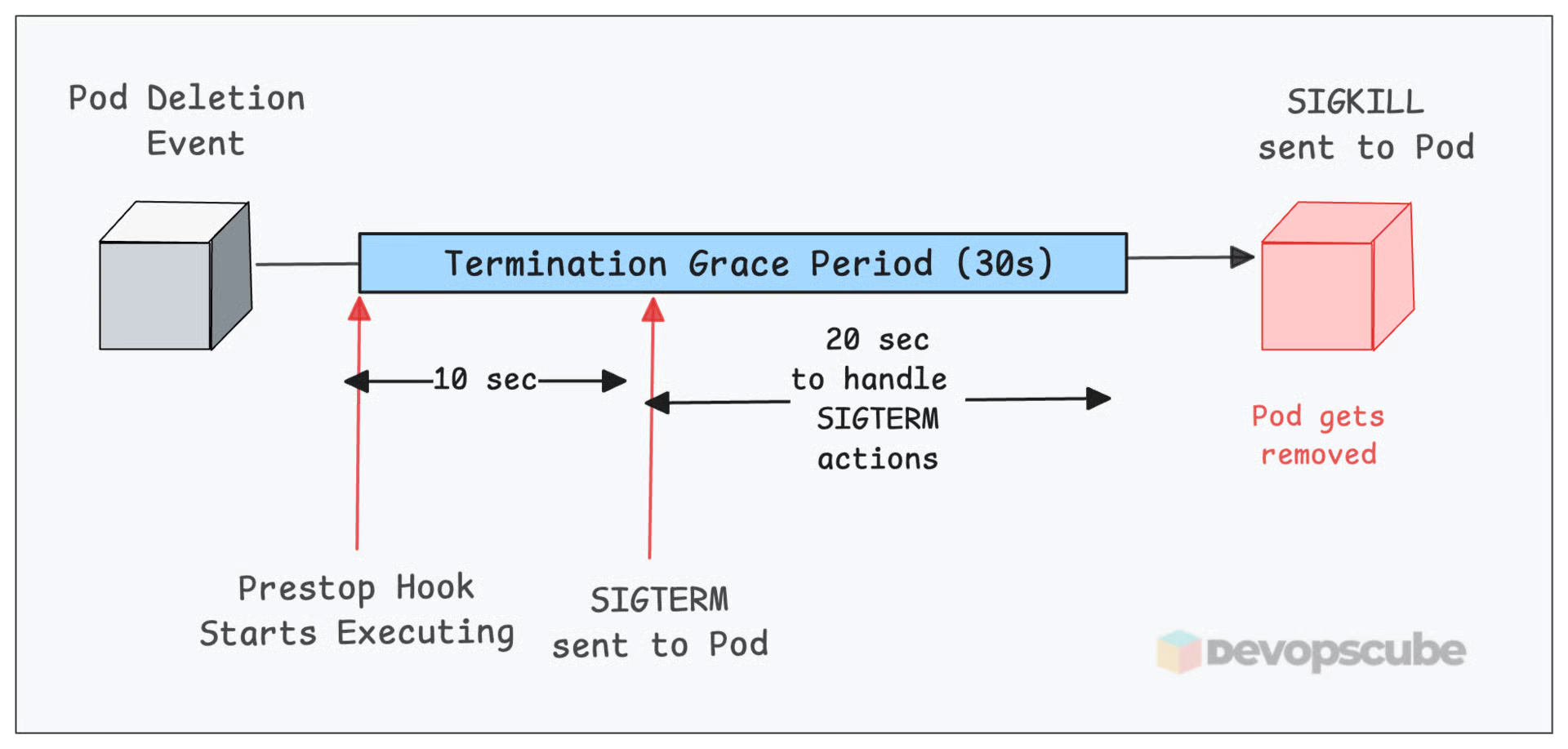

Using PreStop Hook for Buffer Time

When you delete a Pod, Kubernetes sends a SIGTERM signal to your app.

The problem is, while your app is starting to shut down, things like Kube-Proxy, Ingress controllers, or external load balancers (like AWS ALB) might still be routing traffic to that Pod.

This delay happens because it takes time to,

- Remove the Pod from the service endpoints (update takes time to propagate)

- Update kube-proxy iptables rules

- External load balancers (like AWS ALB) also need time to remove the Pod from their target list.

So if your app shuts down too quickly, clients might still get routed to it and end up with “connection refused” errors.

At the same time, there is no mechanism to control how fast other components (like Kube-Proxy, Ingress, etc.) stop sending traffic to the Pod.

But you can slow down your apps shutdown a bit using a preStop hook. The preStop hook runs before Kubernetes sends SIGTERM.

For example, adding a simple sleep 10 in the preStop gives your app a 10-second buffer to let traffic stop flowing before it shuts down.

This gives time for Kube-Proxy to update iptables rules or an external Load balancer (Eg, AWS ALB ingress controller) to stop forwarding requests to that pod.

Here is an example of a Deployment YAML with the preStop lifecycle hook highlighted.

SIGTERM & PID

Kubernetes sends SIGTERM to the process with PID 1 inside the container. If your app is not running as PID 1, it will not receive the signal, and the code to handle graceful termination will not execute.

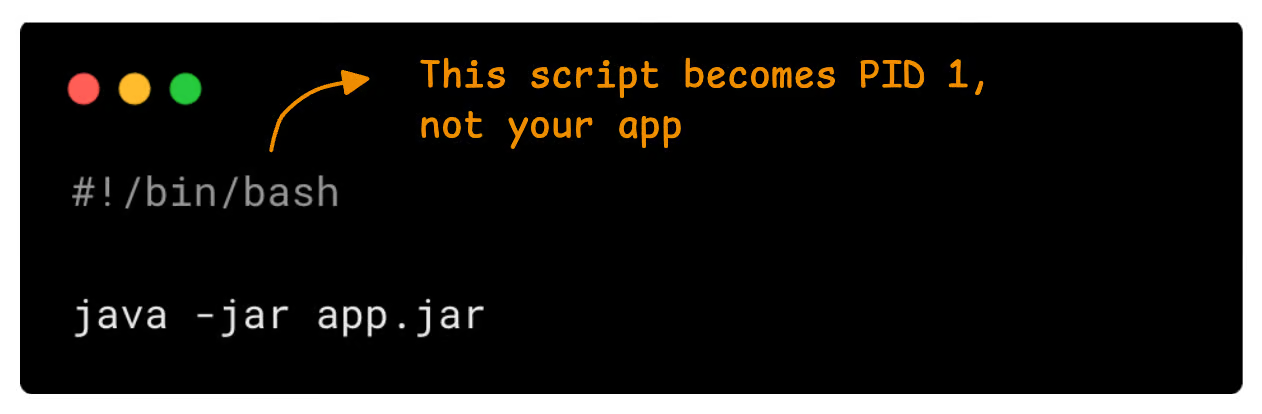

For example, if the Dockerfile runs a shell script that starts your app as a child process, the script becomes PID 1, not your app.

Look at the following example.

However, if you need a shell script, use exec to replace the shell process.

To check this, you can use the following command inside the pod.

ps -e -o pid,ppid,cmdHere is an example of a pod where app.py is assigned PID 1 because it is started using exec in the shell scrip

➜ k exec -it graceful-app-pid1-8649dfbf5c-v48tv -- bash

root@graceful-app-pid1-86

root@graceful-app-pid1-8649dfbf5c-v48tv:/app# ps -e -o pid,ppid,cmd

PID PPID CMD

1 0 python3 app.py

7 0 bash

14 7 ps -e -o pid,ppid,cmd

root@graceful-app-pid1-8649dfbf5c-v48tv:/app# The following example shows PID 1 assigned to the shell script because it was started without using exec

➜ k exec -it graceful-app-no-pid1-5d7b6bfdf7-2xffr -- bash

root@graceful-app-no-pid1-5d7b6bfdf7-2xffr:/app# ps -e -o pid,ppid,cmd

PID PPID CMD

1 0 /bin/bash ./start-without-exec.sh

7 1 python3 app.py

8 0 bash

14 8 ps -e -o pid,ppid,cmdThis applies to CMD as well. In a Dockerfile, how you write the CMD instruction affects how SIGTERM is handled.

For example,

CMD ["python3", "app.py"]- This is the exec form.python3becomes the PID 1 (first process) inside the container.CMD python3 app.py- This is the shell form. Docker runs it as:/bin/sh -c "python3 app.py"In this case, the shell (sh) becomes PID 1, andpython3is just a child process.

Modern frameworks handle SIGTERM for you

Popular frameworks include built-in support for graceful shutdown

For example,

In Spring Boot (Java), just set server.shutdown=graceful and it stops accepting new traffic and waits for ongoing requests before exiting

Go's http.Server includes a Shutdown() method that handles graceful shutdown properly.

These usually handle the common logic, such as,

- Stop accepting new requests.

- Waits for active HTTP requests to finish etc

However, you may need to perform special cleanup actions: e.g., deregister from a service, send metrics, flush caches, commit state to the database etc.

Wrapping Up

We have covered almost all the key concepts in Kubernetes pod graceful termination.

Here are the key takeaways.

- Handle SIGTERM properly with a suitable termination grace period.

- Ensure your application process runs as PID 1 in the container to receive the SIGTERM signal

- Use PreStop hooks for graceful shutdown buffer time.

Over to you.

What problems did you face (or think you might face) when trying to safely shut down a Kubernetes pod?

Comment below.