Kubernetes is a complex distributed system that requires a robust and efficient distributed database to function smoothly. This is where etcd comes into play.

What is etcd?

etcd is the backbone of Kubernetes, acting both as a backend service discovery tool and a key-value database. Often referred to as the "brain" of the Kubernetes cluster, etcd is an open-source, strongly consistent, distributed key-value store.

But what exactly does that mean?

- Strong Consistency: In a distributed system, when an update is made to one node, strong consistency ensures that all other nodes in the cluster are updated immediately. This guarantees that all nodes reflect the same data at any given time.

- Distributed Nature: etcd is designed to operate across multiple nodes as a cluster, without compromising on consistency. This distributed architecture ensures that etcd remains highly available and resilient.

- Key-Value Store: etcd is a non-relational database that stores data as simple key-value pairs. It also provides a key-value API, making it easy to interact with the stored data. The data store is built on top of BboltDB, a fork of BoltDB, known for its high performance and reliability.

etcd achieves strong consistency and availability using the Raft consensus algorithm.

This algorithm enables etcd to function in a leader-member fashion, ensuring high availability even in the event of node failures.

How does etcd integrate with Kubernetes?

In simple terms, every time you use kubectl to retrieve details about a Kubernetes object, you’re interacting with etcd. Similarly, when you deploy an object like a pod, etcd records this as an entry.

Here’s what you need to know about etcd in the context of Kubernetes:

- Centralized Storage: etcd stores all configurations, states, and metadata of Kubernetes objects—such as pods, secrets, daemonsets, deployments, configmaps, and statefulsets.

- Real-Time Tracking: Kubernetes leverages etcd’s

watchfunctionality (via theWatch()API) to monitor changes in the state of objects, enabling real-time tracking and response to updates. - API Accessibility: etcd exposes a key-value API using gRPC. Additionally, it includes a gRPC gateway, a RESTful proxy that translates HTTP API calls into gRPC messages. This makes etcd an ideal database for Kubernetes.

- Data Storage Structure: All Kubernetes objects are stored under the

/registrydirectory key in etcd, organized in a key-value format. It is called etc d key space. For example, information about a pod namednginxin the default namespace would be found under/registry/pods/default/nginx.

Note: etcd is the only StatefulSet component within the Kubernetes control plane.

etcd HA Architecture:

When it comes to deploying etcd in a high-availability (HA) architecture, there are two primary modes:

- Stacked etcd: This setup involves deploying etcd alongside the control plane nodes. It's a simpler deployment model but with potential trade-offs in terms of isolation and scaling.

- External etcd Cluster: In this mode, etcd is deployed as a dedicated cluster, independent of the control plane nodes, offering better isolation, scalability, and resilience.

etcd Quorum and Node Count

Quorum is a concept in distributed systems that refers to the minimum number of nodes that must be operational and able to communicate for the cluster to function correctly.

In etcd, quorum is used to ensure consistency and availability in the face of node failures.

The quorum is calculated as:

quorum = (n / 2) + 1Where n is the total number of nodes in the cluster.

For instance, to tolerate the failure of one node, a minimum of three etcd nodes is required. To withstand two node failures, you would need at least five nodes, and so on.

The number of nodes in an etcd cluster directly affects its fault tolerance. Here's how it breaks down:

- 3 nodes: Can tolerate 1 node failure (quorum = 2)

- 5 nodes: Can tolerate 2 node failures (quorum = 3)

- 7 nodes: Can tolerate 3 node failures (quorum = 4)

And so on. The general formula for the number of node failures a cluster can tolerate is:

fault tolerance = (n - 1) / 2Where n is the total number of nodes.

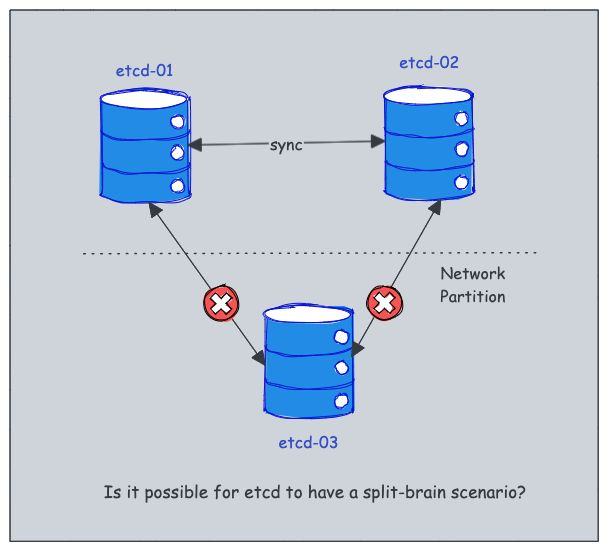

Avoiding Split-Brain Scenarios in etcd

In distributed systems, a split-brain scenario occurs when a group of nodes loses communication with each other, typically due to network partitioning.

This can lead to inconsistent or conflicting system states, which is particularly problematic in critical systems like Kubernetes.

However, etcd is specifically designed to prevent split-brain scenarios.

It employs a robust leader election mechanism, ensuring that only one node is actively controlling the cluster at any given time. According to the official documentation, split-brain scenarios are effectively avoided in etcd. Here's how:

- When a network partition occurs, the etcd cluster is divided into two segments: a majority and a minority.

- The majority segment continues to function as the available cluster, while the minority segment becomes unavailable.

- If the leader resides within the majority segment, the system treats the failure as a minority follower failure. The majority segment remains operational with no impact on consistency.

- If the leader is part of the minority segment, it recognizes that it is separated due to the loss of communication with a majority of the nodes in the cluster and it steps down from its leadership role.

- The majority segment then elects a new leader, ensuring continuous availability and consistency.

- Once the network partition is resolved, the minority segment automatically identifies the new leader from the majority segment and synchronizes its state accordingly.

This design ensures that etcd maintains strong consistency and prevents split-brain scenarios, even in the face of network issues.

Prodcuction Case Studies

Want to Stay Ahead in DevOps & Cloud? Join the Free Newsletter Below.