In this blog, we will look at Azure AI Foundry basics and then setup AI Foundry hub and a project to deploy a large language model (LLM).

By the end of this blog, you will have learned:

- What Azure AI Foundry is and how it works

- How to set up an AI Foundry Hub and Project

- How to deploy a large language model (LLM) in the project

- Key features like model fine-tuning, deployment options, agent services, and playgrounds

- The difference between Azure AI Foundry and Azure OpenAI

- Best practices for setting up access, storage, and security

What is Azure AI Foundry?

Azure AI Foundry is Microsoft's managed platform that handles the entire AI application lifecycle, with key focus on generative AI and modern AI development.

Think of it as like a complete toolkit for building modern AI applications at enterprise scale.

Instead of starting from scratch, you get access to a massive catalog of over 1900+ pre-trained models from various providers.

You can also build RAG applications using your company's data, manage prompts and LLM workflows, and handle both traditional MLOps and the newer LLMOps requirements.

In short, if you're looking for a one-stop solution to build, train, deploy, and scale AI applications without stitching together multiple separate services, Azure AI Foundry is the service you need.

When we first started using Azure AI Foundry, it was quite confusing.

There is a separate AI Foundry portal, and then there is also an AI Foundry service inside the Azure portal.

So before you begin, it's important to understand these two key concepts in Azure AI Foundry. In this blog, we will be looking at the AI Foundry service through the Azure portal.

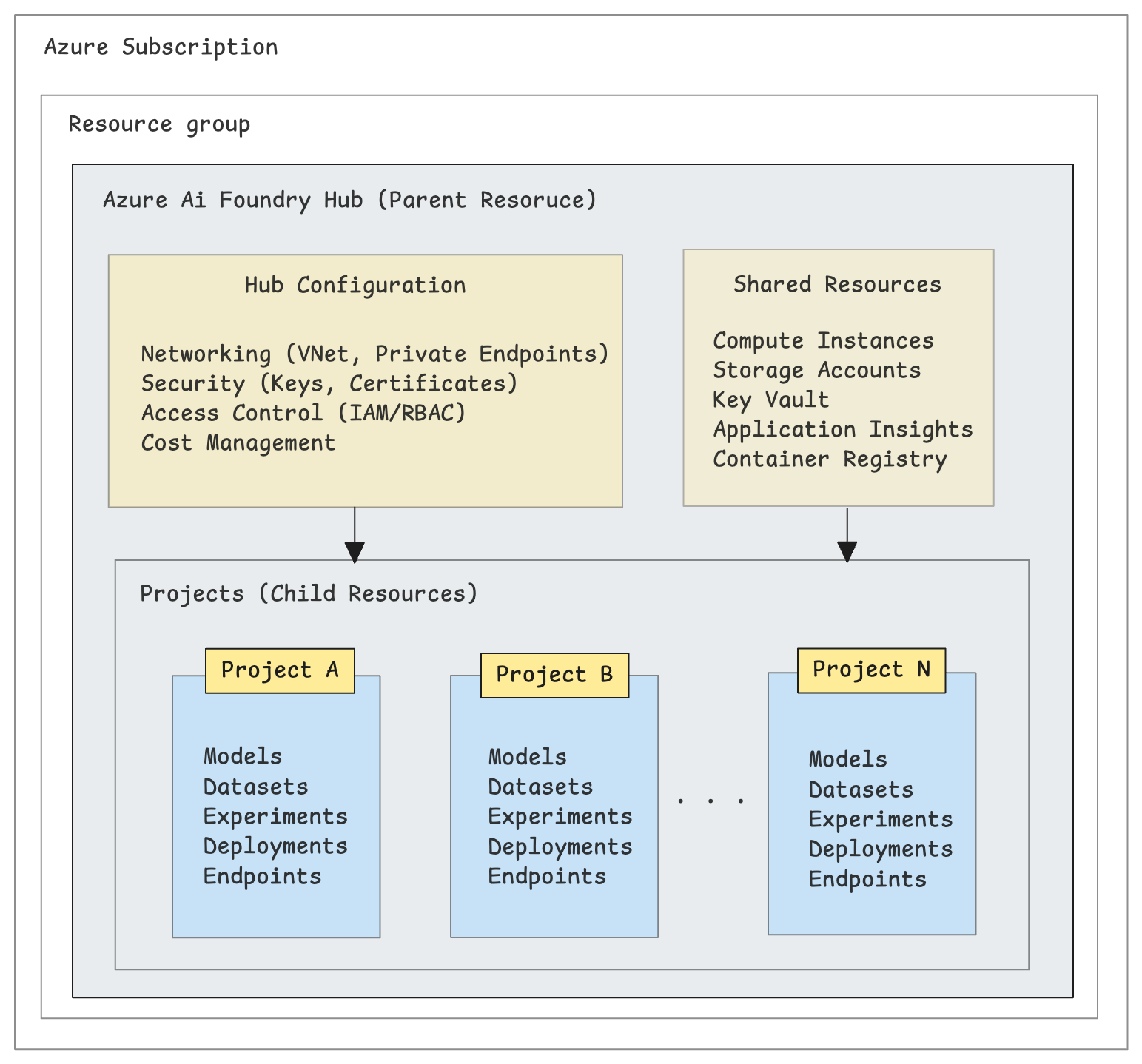

The following image shows the architecture of Azure AI Foundry.

Azure AI Foundry Hub: The hub acts as the parent resource that provides shared infrastructure and centralized management. It centralizes and manages the following.

- Security and networking configuration (Private endpoints, managed virtual networks, Azure Policies)

- Shared compute, storage resources, and connections (e.g., to Azure OpenAI, AI Search, Storage, Key Vault, Container Registry)

- Access control and permissions (Azure RBAC and Azure ABAC)

- Cost management across all child projects

Azure AI Foundry Projects: Projects are individual workspaces within a hub where actual AI development happens (model training, deployment, etc.).. Each project contains the following.

- Models (trained or imported)

- Datasets for training and testing

- Indexes for search and retrieval

- Experiments and deployments

- Endpoints for model serving

And within Projects, you have access to two main categories of AI services.

- Azure OpenAI: For integrating OpenAI's LLM models (GPT, etc.) into applications

- Azure AI Services: Readily available AI Services, such as Bot services, AI Content Safety, Machine learning, AI Search, AI Speech, Vision, Language, etc.

Let's Begin..

Here is what we are going to do in this guide,

- Create Azure AI Foundry Hub (Through Azure Portal)

- Create a project inside the Hub

- Deploy a large language model in the project.

If you are setting up AI Foundry and need good governance, including advanced networking, security configurations, and enterprise-grade controls, you need to create a hub-based project starting from the Azure portal. This guide is based on AI Foundry hub management through the Azure portal

Create an Azure AI Foundry Hub

The first step is to create a Hub.

Let's get started.

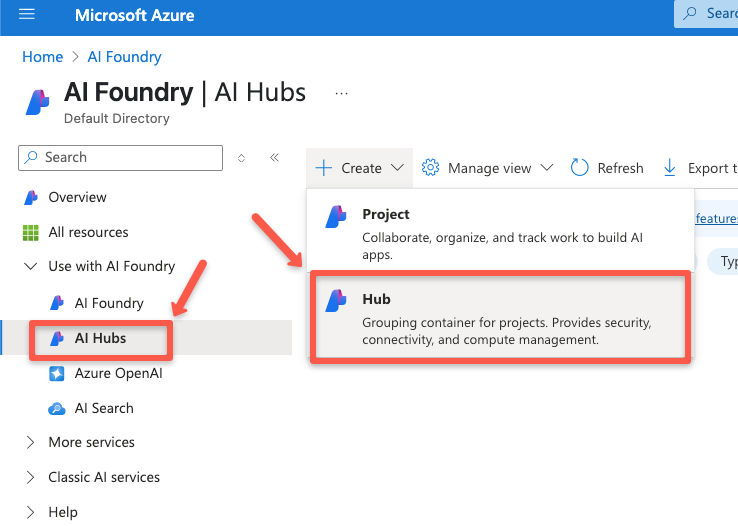

In the search bar, type Azure AI Foundryand open the service.

Navigate to the AI Hubs and click the + create button to select the Hub for the AI Hub creation.

The resource creation page will open.

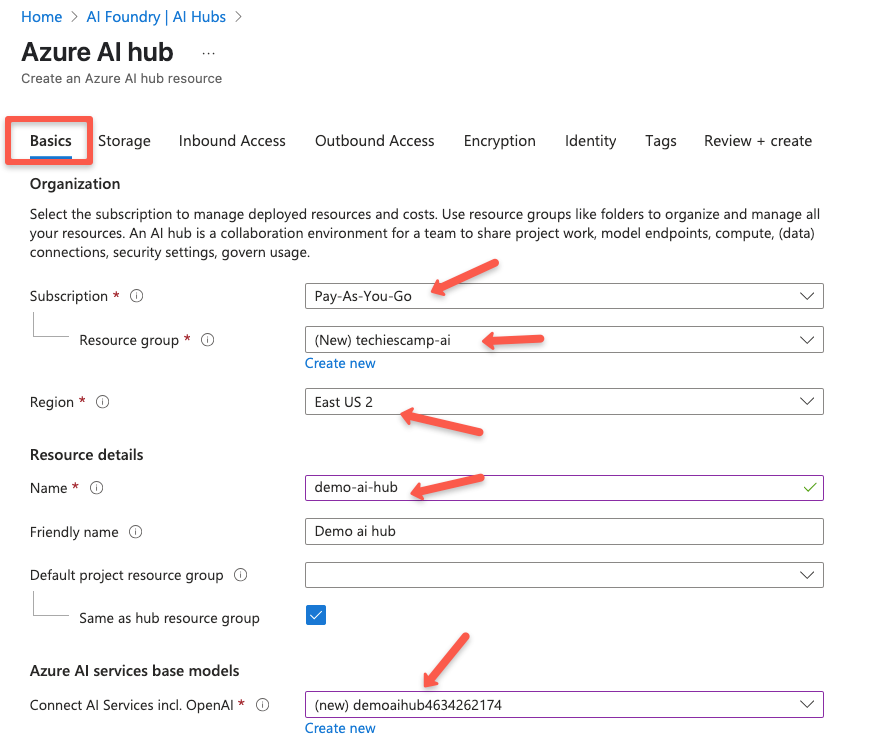

On the Basics page, select the subscription, resource group, region, and name for the Hub.

You can use the existing one, or it will create a new one.

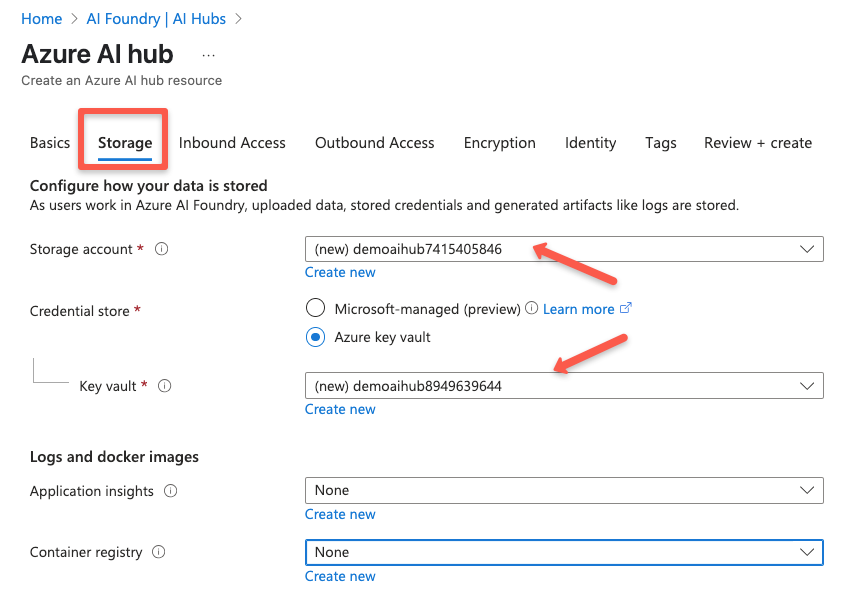

In the Storage section, you need a storage account to store your project artifacts. You can choose the existing one, or it will create a new one itself.

Along with you need to select one of the credentials store options to store the storage account and container registry credentials.

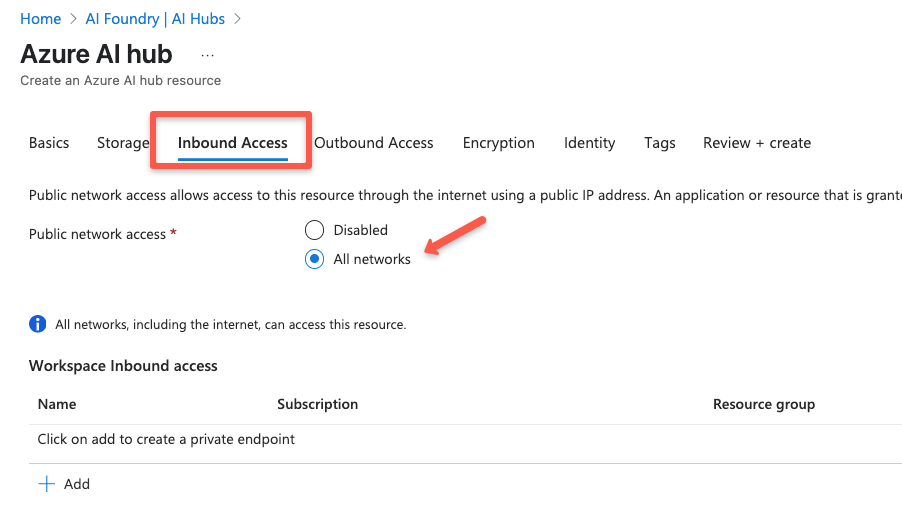

The next one is the Inbound Access section, where we can configure the network configuration for the clients to access the Hub.

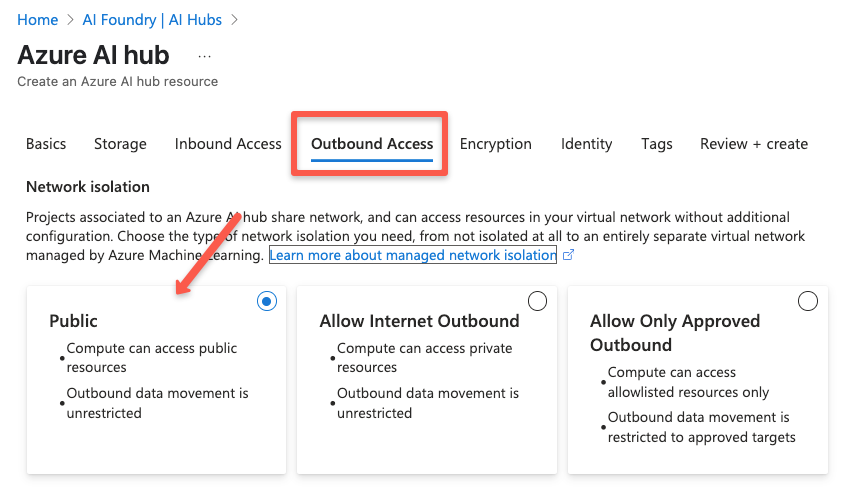

The following section will be the Outbound Access section, where we can restrict outbound network access to the Hub.

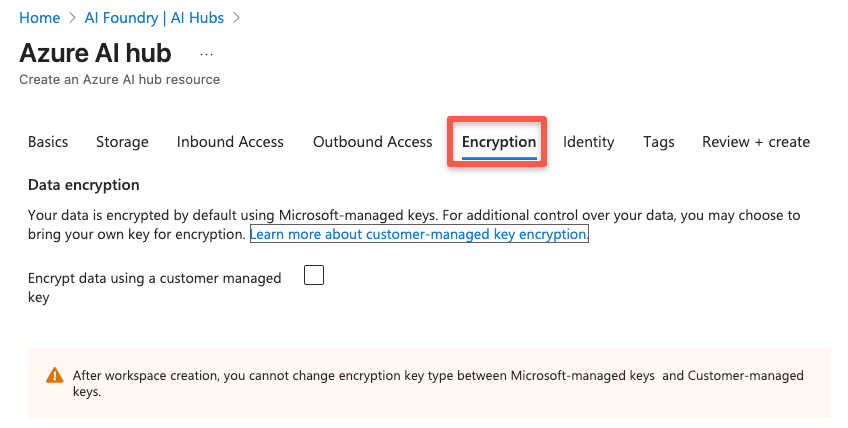

On the Encryption section, we can configure the encryption so our data will be encrypted by the managed keys.

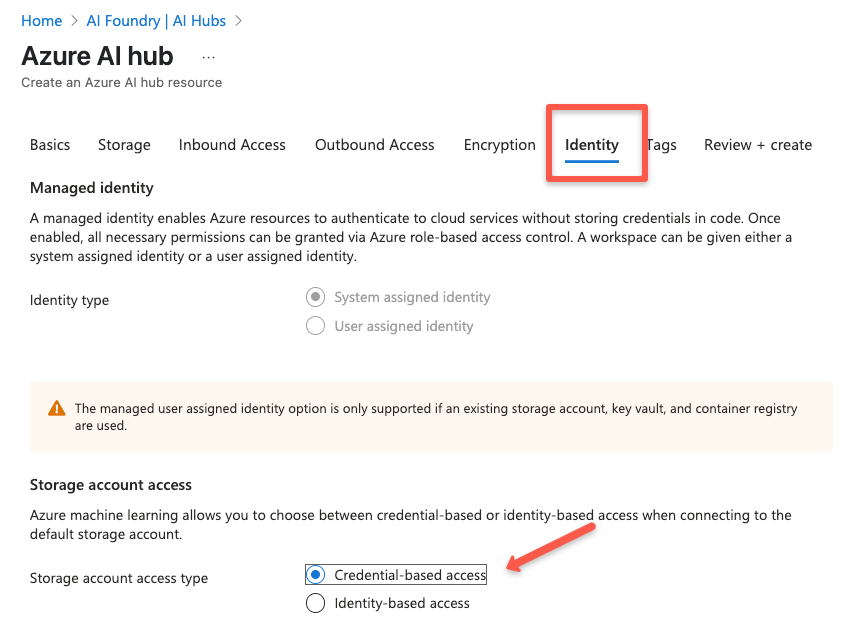

On the Identity section, we define the identity so that the Hub can access the storage account, key vault, and container registry.

Finally, add the tags and review the given configuration, then click to create the Hub.

The Hub creation will take a few minutes to complete.

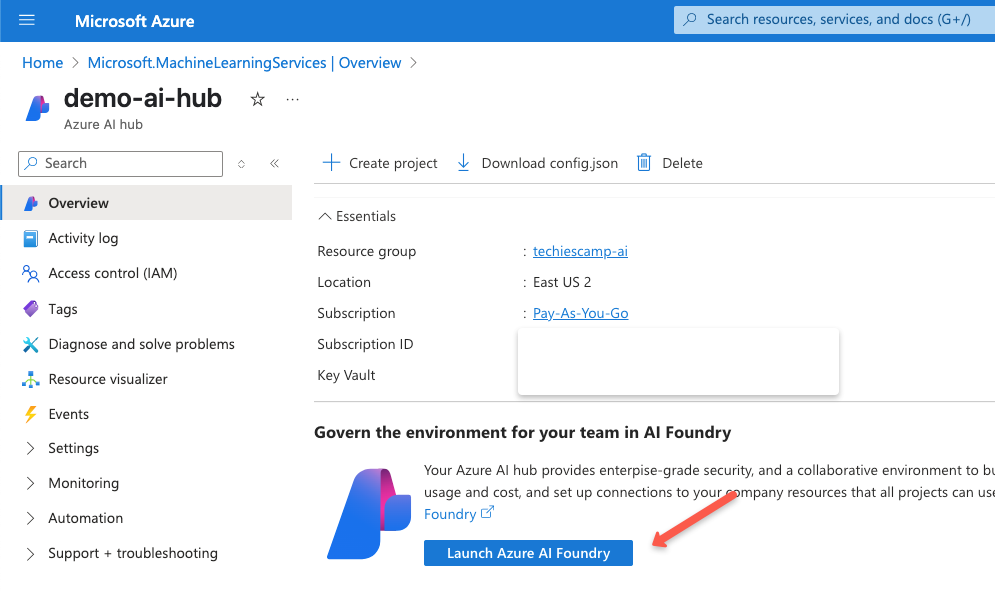

Once the Hub creation is completed, navigate to the Azure AI Hub and click the Launch Azure AI Foundry to manage the resources.

You will be on a new dedicated page where you can manage your Hubs, Projects, and other services.

Now that we have the hub ready, the next step is to create a project under the hub.

Create an Azure AI Project

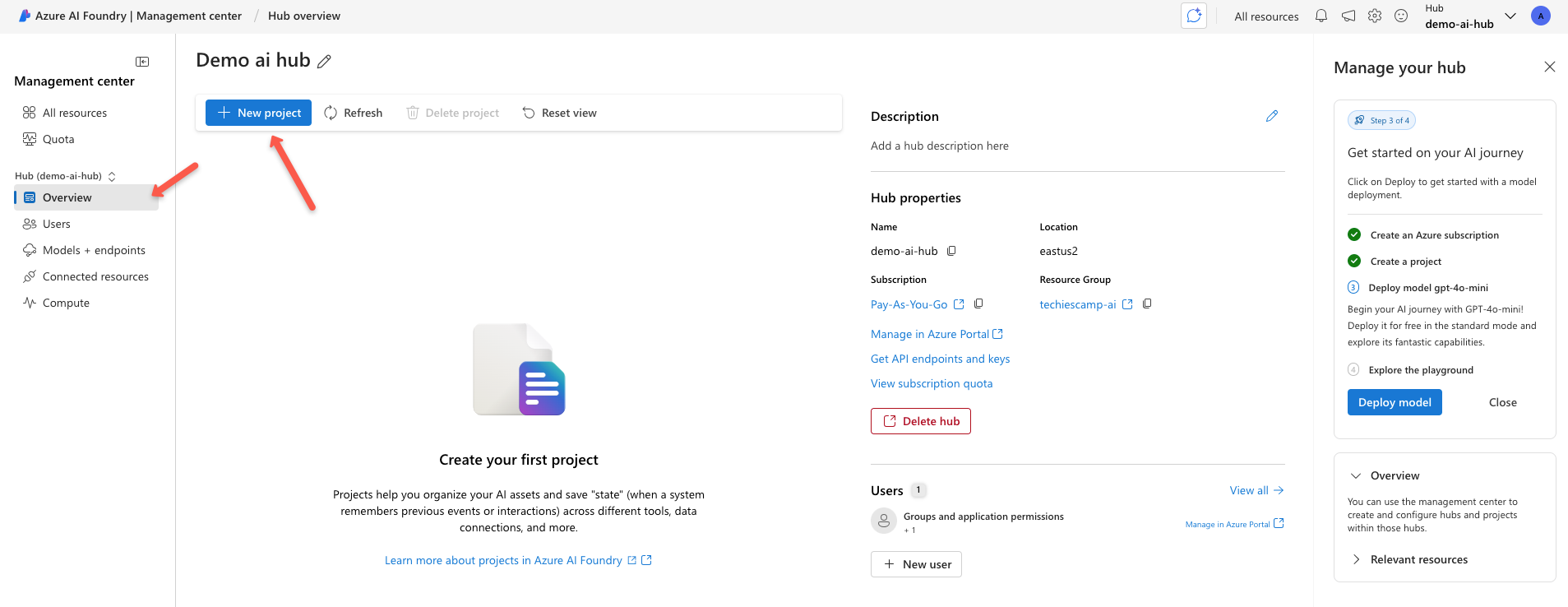

We can create multiple projects under one hub so hub level configurations will be inherited by all the projects.

For the demo, we are going to create a single project under this hub (Demo ai hub)

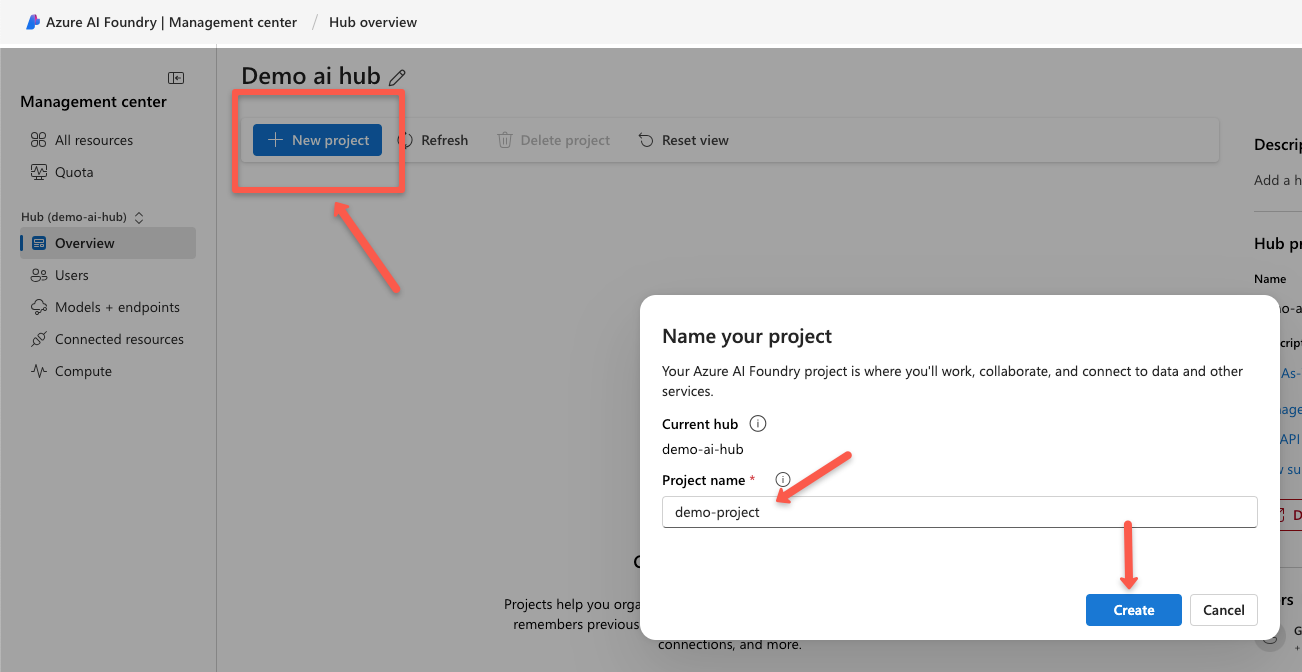

In the overview section of the Hub, we can create a new project by giving a name.

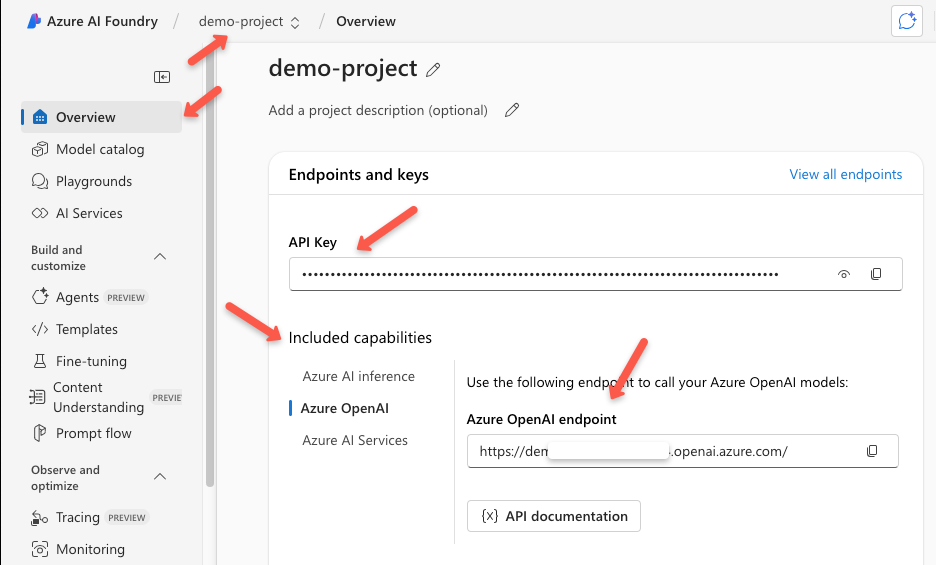

Once the Project creation is complete, you will be redirected to the project page where you can view the endpoints.

In there, you will see three endpoints for various use cases.

In the Project overview page, we can see the following endpoints,

- Azure AI Inference --> Endpoint for the non-default model

- Azure OpenAI --> Endpoint for the OpenAI service (Default model - gpt-4o-mini and the default embedding model - text-embedding-3-small)

- Azure AI Services --> This endpoint is for Azure's AI services, including vision, speech, and language.

All the projects under the AI Hub can utilize the security, connections, and configurations of the Hub.

Now, the project creation under the hub is completed so we can start deploying models and using them.

Deploying a Model In the Project

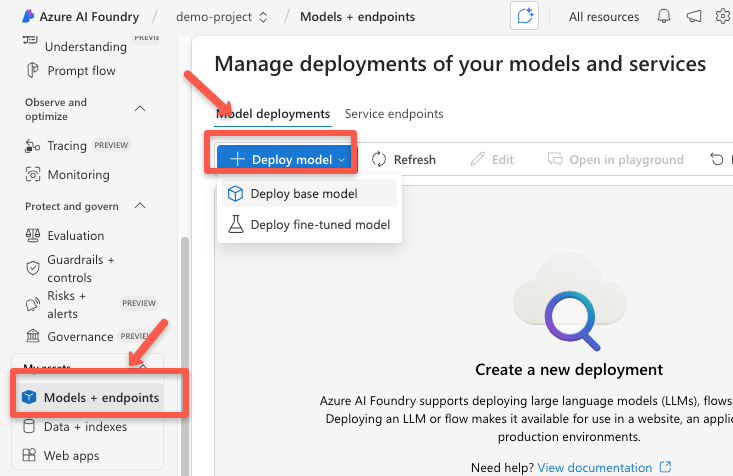

To deploy a model for the project, navigate to the Models + endpoints section of the left side panel and select the + Deploy model to choose the existing models or the fine-tuned models.

For this demo, we will choose one of the base OpenAI models, but you can choose any from the available list.

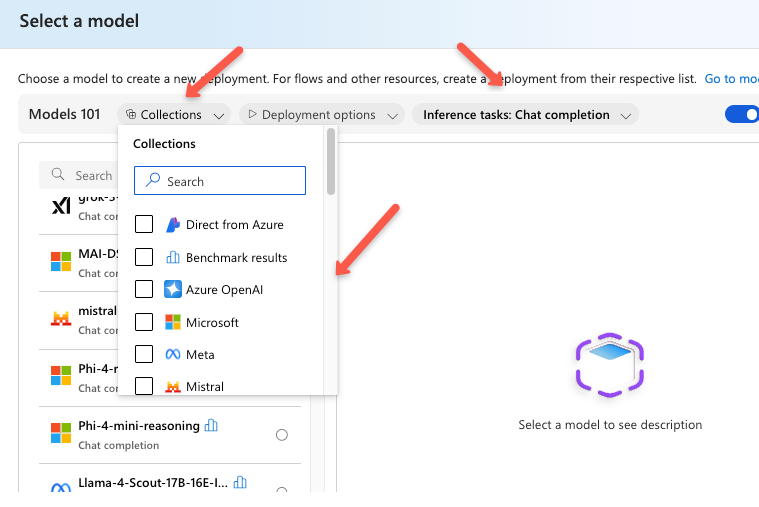

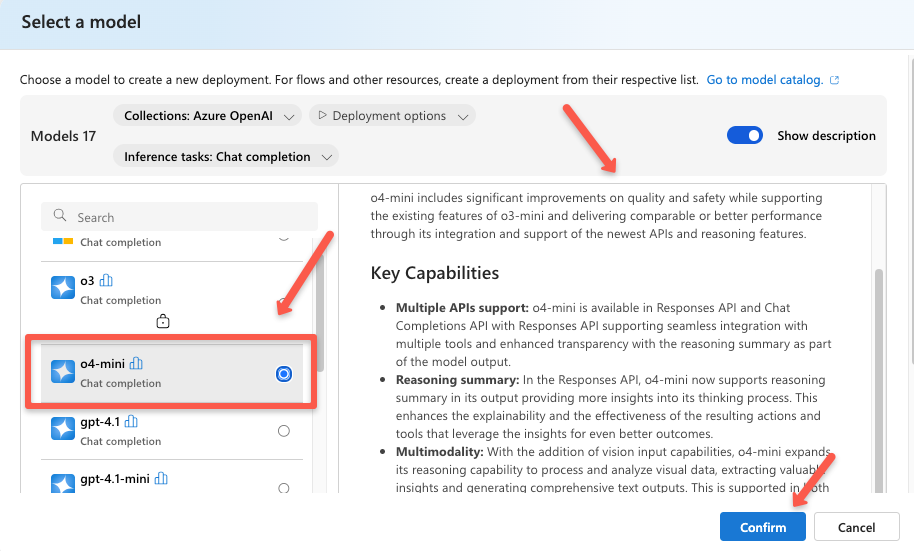

Choosing the Deploy base model will show the list of all existing LLM models. We can choose them using the filters based on our use case.

Once you select the model, it will show the description of the details about that model.

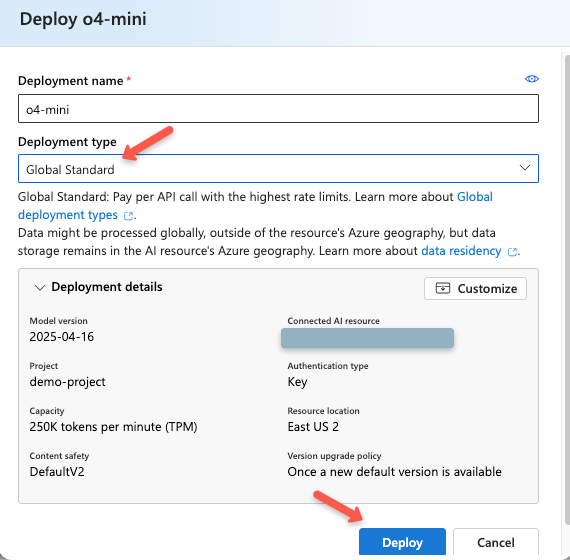

On the next page, deployment details will be available, and we can customize them as per the requirements, such as deployment type, tokens per minute, etc.

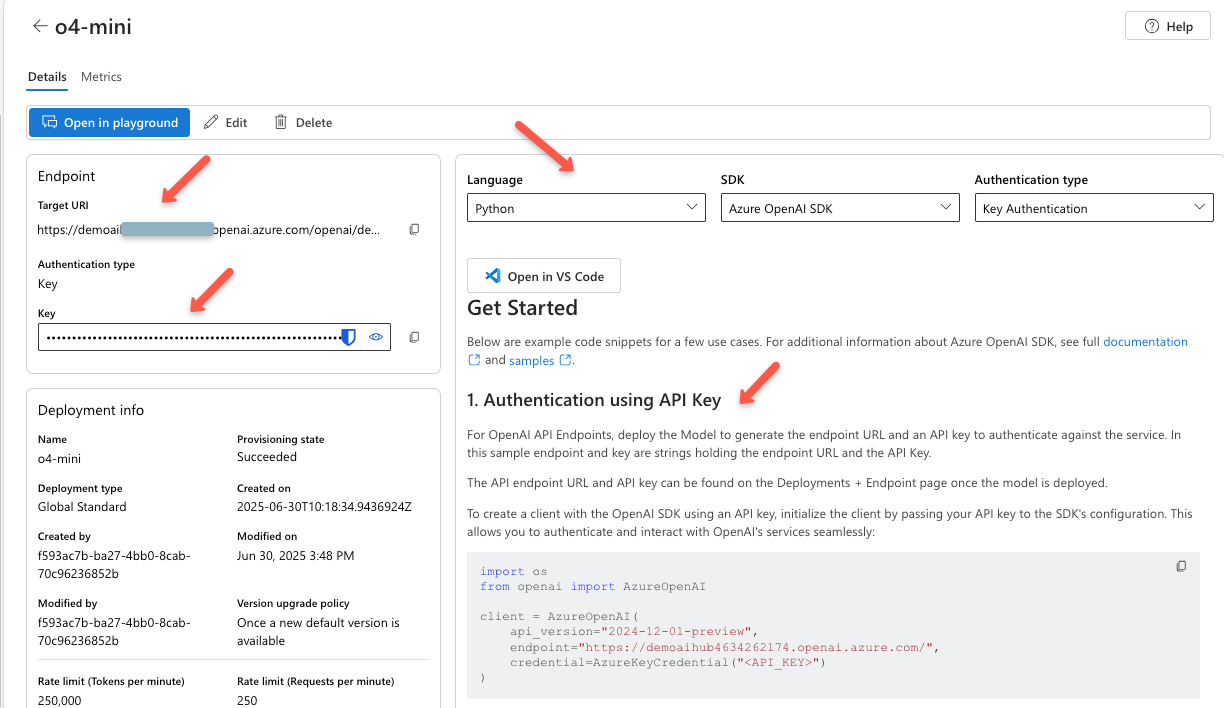

Once the deployment is successfully completed, we can see the API key, endpoint, and sample usage on the deployment page.

This is how we deploy a model on the Azure AI Projects and use it on our AI applications.

To see the pricing details, refer to this official documentation.

In the next section, we can see some of the key features of the AI Foundry.

Features of the Azure AI Foundry

The following are some of the key features of the Azure AI Foundry service.

- You can fine-tune your own models or use Azure’s pre-built models with your own datasets.

- Models can be deployed in virtual machines or as serverless endpoints.

- The Agent Service helps automate and complete different parts of your AI workflows.

- Built-in playgrounds let you test your models or apps directly without setting up any infrastructure.

- Use it for Models as a Service.

- Suports LLMOps & MLOps

Azure AI Foundry vs Azure OpenAI

Azure OpenAI is a service that gives you access to OpenAI’s models like GPT-4, GPT-3.5, DALL·E, and Whisper through Microsoft’s Azure platform. It is a way to use these models with the security and scalability of Azure’s cloud.

Azure AI Foundry is a broader platform designed for building, deploying, and managing AI projects. It supports different AI models and frameworks, not just OpenAI’s.

It also includes tools for MLOps, so teams can handle the full AI development lifecycle. Think of it like Azure’s version of AWS SageMaker.

Conclusion

If you have made it this far, nice work!

We started with the basics of Azure AI Foundry, then how to set up the Hub, start a project, and deploy a large language model.

The goal was to help you see where Azure AI Foundry fits in the bigger AI/ML picture, especially compared to Azure OpenAI. If you’re just using OpenAI models, Azure OpenAI might be all you need. But if you’re building full-on AI solutions from start to finish, AI Foundry gives you way more room to work.

And this is just the start.

In the next few posts, we will dig into real-world use cases, fine-tuning, version control for models, managing costs, monitoring tools, and tips for working with AI Foundry as a team.

Thanks for following along. If you decide to try out AI Foundry, I’d love to hear how it goes.

Let’s build some cool stuff.