In this blog, we will look at how sandboxed containers improve workload isolation, reduce security risks, and whether they’re right for the infrastructure.

By the end of this blog, you will have learned the following:

- Sandboxed containers

- Container runtime and host kernel access

- Performance trade-offs

- Organizations that use sandboxed containers in production

- Should you use sandboxed containers?

What Are Sandboxed Containers?

Sandboxed containers are a type of container runtime that provides an additional layer of security by isolating containers from the host operating system (OS) and other containers.

You can also call it as virtualised containers.

Here is how it works.

Unlike traditional containers (e.g., Docker, which shares the host OS kernel), sandboxed containers use lightweight virtualization or other isolation mechanisms to fully isolate them.

This prevents container breakout attacks. Meaning that a container can't escape its limits and gets access to the host OS Kernel or other containers.

This ensures stronger security for multi-tenant environments (when many users/orgs share the same system.)

Some popular sandboxed container runtimes include:

- gVisor (Developed by Google) – A user-space kernel that intercepts syscalls to provide extra security.

- Kata Containers – Runs each container inside a lightweight VM, ensuring strong kernel isolation.

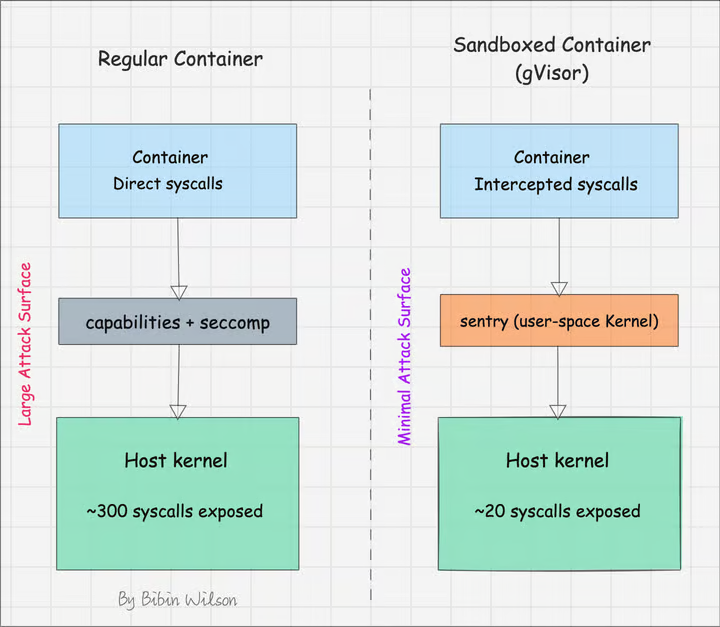

Instead of sharing the host kernel directly, these runtimes introduce an additional layer that limits direct access to system calls.

It isolates the workloads much better than standard container runtimes.

For example,

- gVisor has its own "mini-kernel" (called Sentry) that runs in user space (not directly on the host OS). When an app inside the container makes a syscall, gVisor intercepts it and emulates (pretends to handle) it instead of letting it go directly to the host OS.

- Kata runs containers in lightweight VMs. The VM kernel typically allows ~200-300 syscalls (similar to a normal Linux kernel). The app inside the container makes syscalls to the VM's kernel, not the host OS kernel.

Container Runtime and Host Kernel Access

To understand sandboxed containers, we first need to know how much access a typical container has to the host kernel.

Most containers today run on container runtimes such as containerd or cri-o.

Let’s consider what happens when you run a basic container:

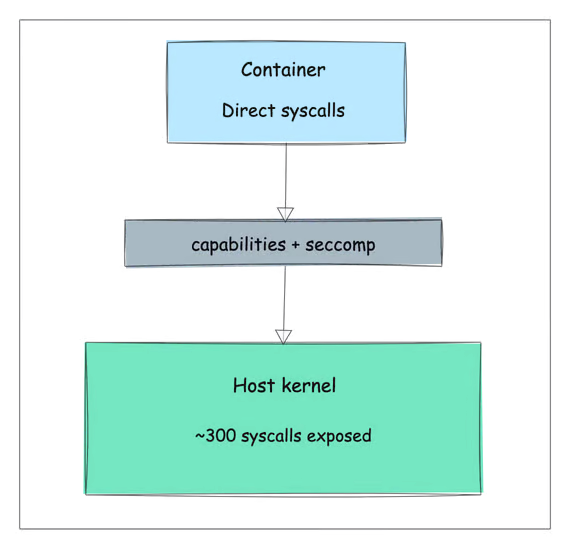

- It uses the host’s system calls directly.

- It shares the same kernel version and capabilities.

- It is controlled through Linux capabilities, which limit privileged operations.

However, despite these controls, containers can still,

- Make direct system calls (~300) to the kernel, which could be exploited if there are vulnerabilities.

- Read kernel version and system information.

- Access kernel modules and subsystems (though with some restrictions).

This level of access poses a risk when running untrusted workloads, especially in multi-tenant environments like CI/CD platforms.

Performance Trade-offs

With good security benefits, sandboxed containers come with some performance trade-offs.

Since sandboxed containers rely on user-space kernels, they may consume more CPU and memory compared to traditional containers.

Unlike standard containers, which start almost instantly, sandboxed containers may take slightly longer to boot due to the added virtualization layer.

Organizations that Use Sandboxed Containers

Following are some of the top organizations that use sandboxed containers in production:

- OpenAI is using gVisor runtime to run some of its high-risk tasks.

- Cloudflare uses gVisor for its building infrastructure

- NVIDIA uses Kata Containers to support AI/ML workloads

- Blink is a DevOps platform that uses gVisor for the EKS Pods to run securely.

Use Case

Let's say you are building a SaaS-based CI/CD platform like CircleCI or BuildKite, where other companies run their build pipelines.

Let's say this service lets users define their own build steps and run any Docker container they need for builds.

Ultimately, these build jobs run as containers or pods inside your cluster, and the separation between companies is mostly logical.

While companies are logically separated, they still share the same underlying system kernel.

Now, let’s say someone on your team mistakenly allows privileged mode in pod security settings.

This means a compromised build job could gain access to the host system. If that happens, one company’s build could access another company’s source code, secrets, or sensitive data.

This is a huge security risk!

So how do you avoid this?

To prevent such risks, we need stronger isolation between builds. This is where Sandboxed Containers come into play.

Should You Use Sandboxed Containers?

If you're running sensitive, multi-tenant workloads like:

- CI/CD services

Serverless functions (e.g., AWS Lambda, Cloud Run)

- SaaS platforms where customers execute arbitrary code

Then sandboxed containers are a great way to minimize risk and ensure better isolation.

However, if your workloads run in a trusted environment (such as an internal microservices architecture), the overhead may not be worth it.

Conclusion

Although sandboxed containers have certain performance trade-offs, some enterprises use this technology to run their workflows securely.

Companies that use sandboxed containers have made performance tweaks to make them work at almost the same speed as normal containers.

Hope this blog serves as a primer on sandboxed containers.

Want to Stay Ahead in DevOps & Cloud? Join the Free Newsletter Below.